"docs/git@developer.sourcefind.cn:OpenDAS/nni.git" did not exist on "463c0f78d74c7f3d8f9053602868ae5b208475ff"

Add NAS Visualization Documentation (#2257)

* nas ui docs * add link in overview * update * Update Visualization.md

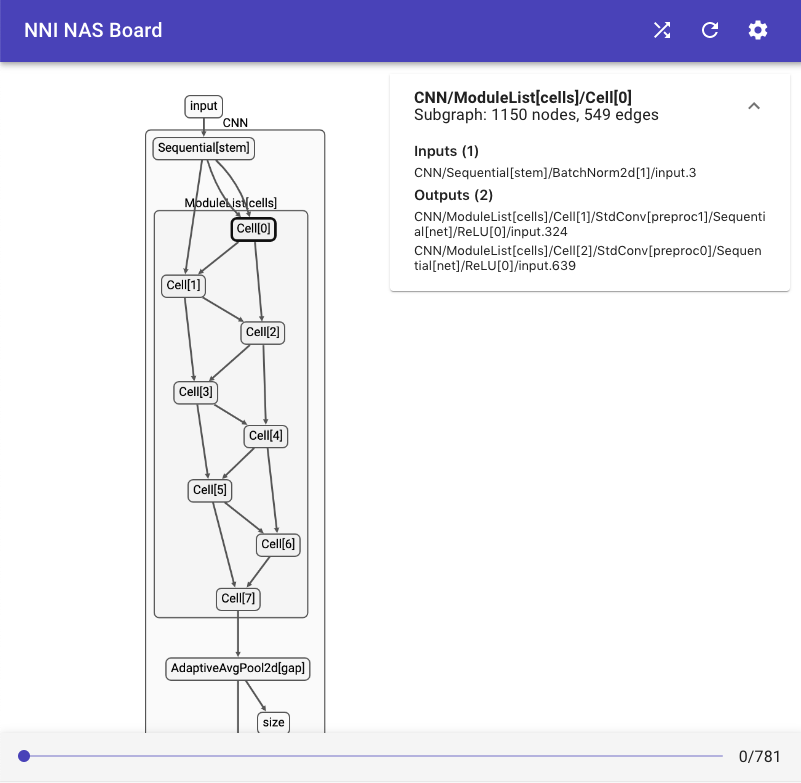

Showing

docs/img/nasui-1.png

0 → 100644

80.8 KB

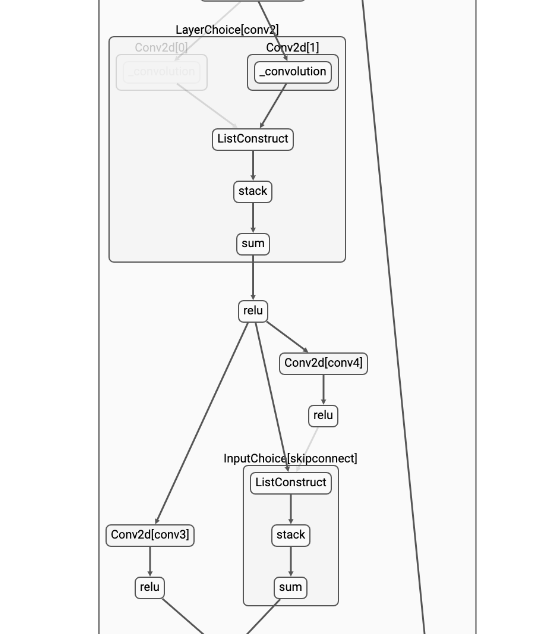

docs/img/nasui-2.png

0 → 100644

50.2 KB