"vscode:/vscode.git/clone" did not exist on "6495927e7ccf4a67720fd81fe5013f752a71f45c"

Request for Integrating the new NAS algorithm: Cream (#2705)

Showing

docs/en_US/NAS/Cream.md

0 → 100644

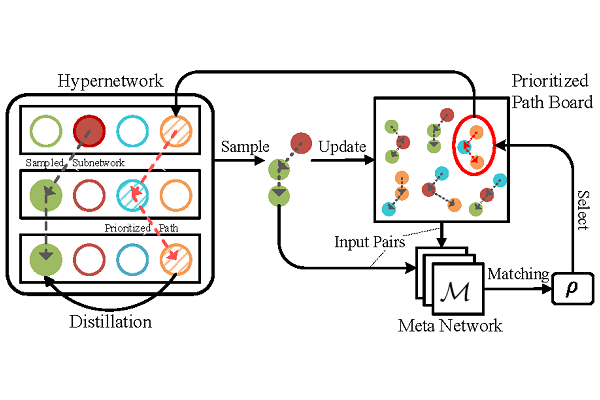

docs/img/cream.png

0 → 100644

63.6 KB

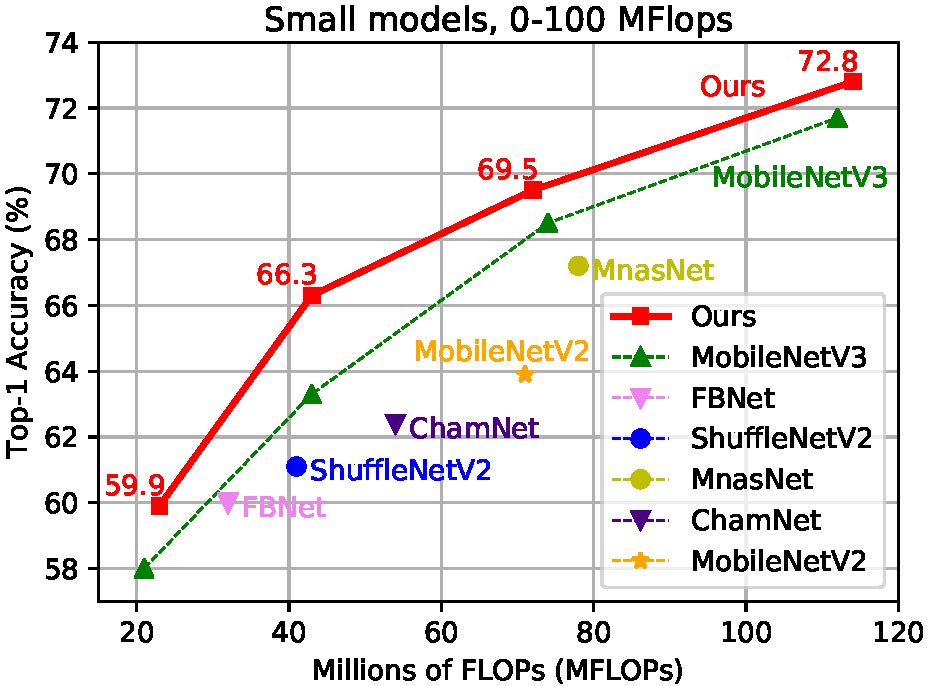

docs/img/cream_flops100.jpg

0 → 100644

103 KB

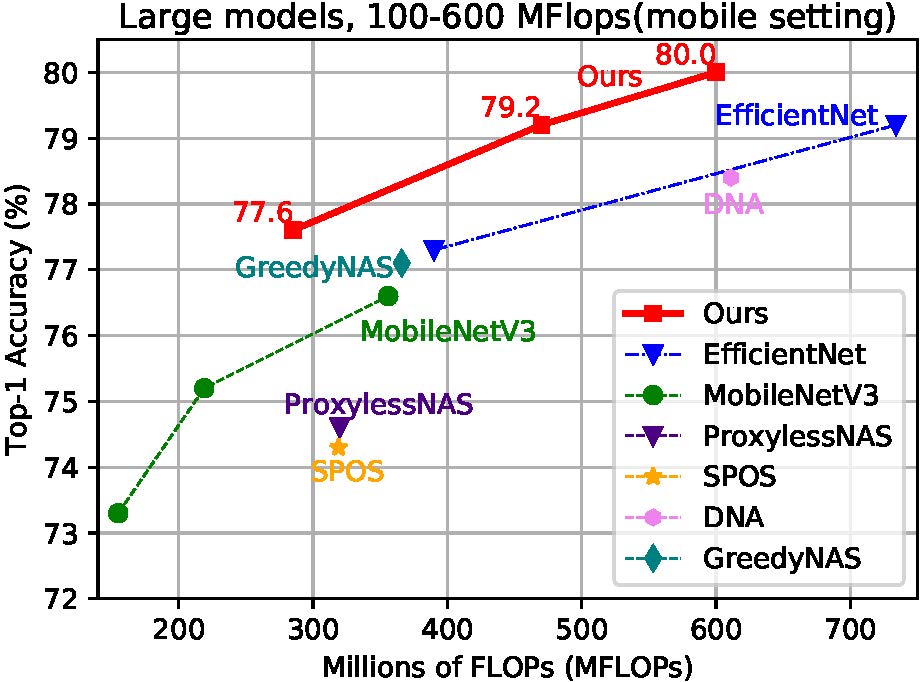

docs/img/cream_flops600.jpg

0 → 100644

104 KB

examples/__init__.py

0 → 100644

examples/nas/__init__.py

0 → 100644

examples/nas/cream/Cream.md

0 → 100644