Doc refactor (#258)

* doc refactor * image name refactor

Showing

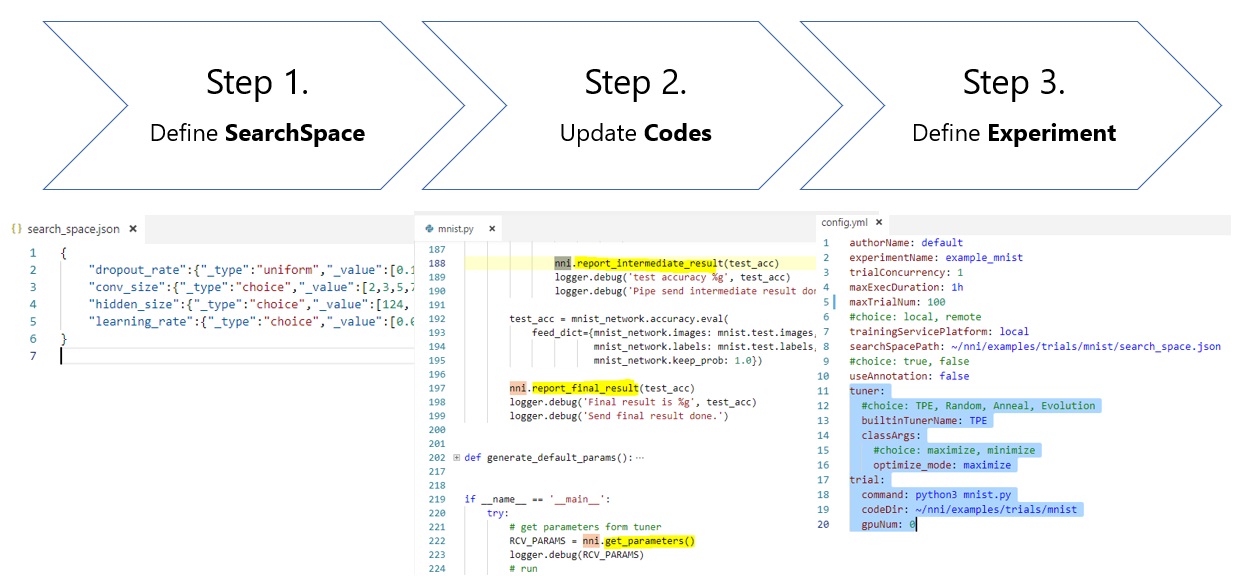

docs/3_steps.jpg

deleted

100644 → 0

159 KB

docs/AnnotationSpec.md

0 → 100644

docs/InstallNNI_Ubuntu.md

0 → 100644

docs/Overview.md

0 → 100644

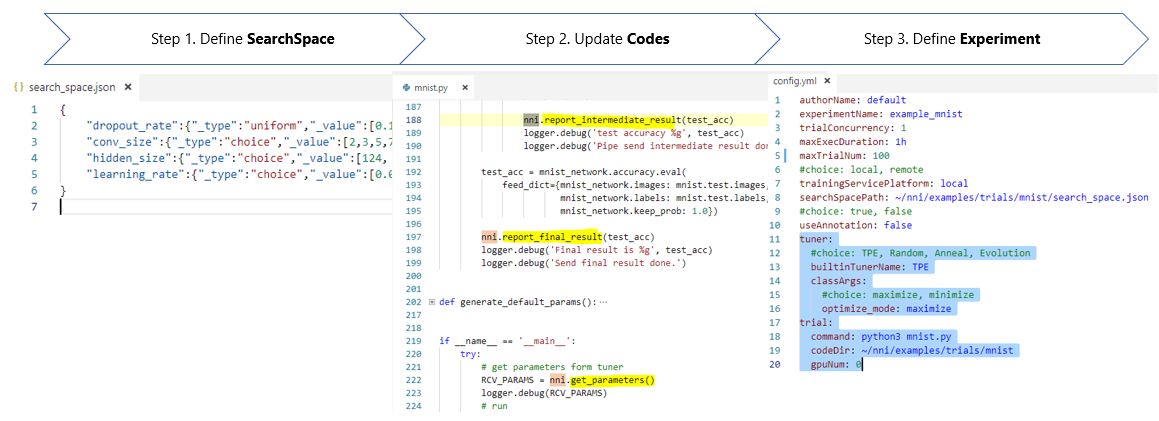

docs/img/3_steps.jpg

0 → 100644

77.7 KB