Merge pull request #21 from microsoft/master

pull code

Showing

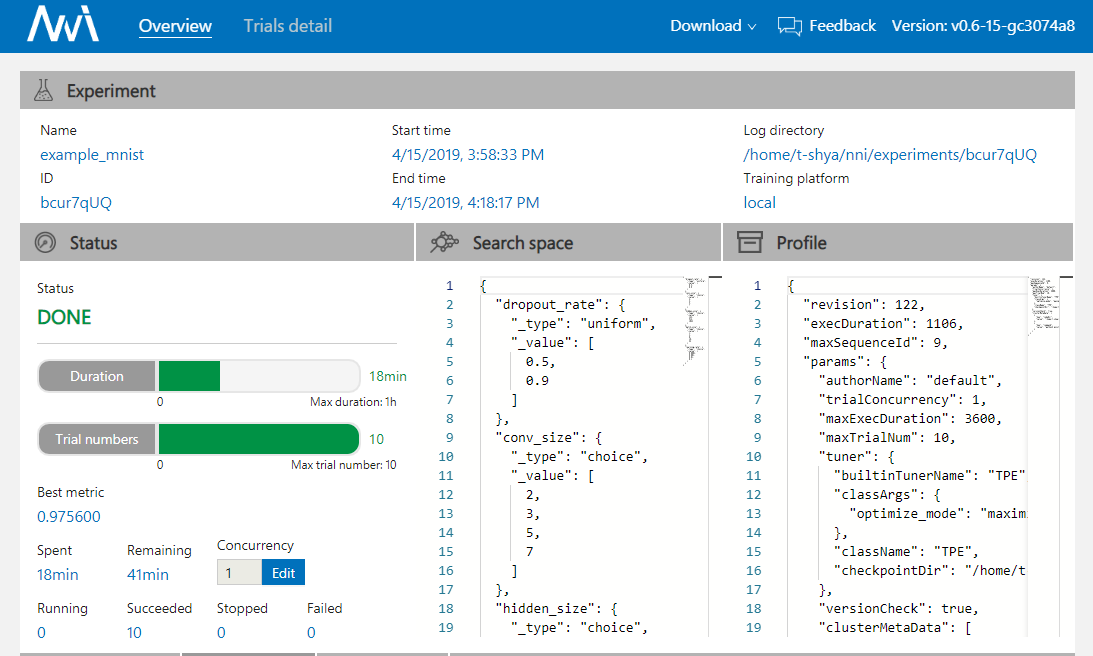

35.9 KB

| W: | H:

| W: | H:

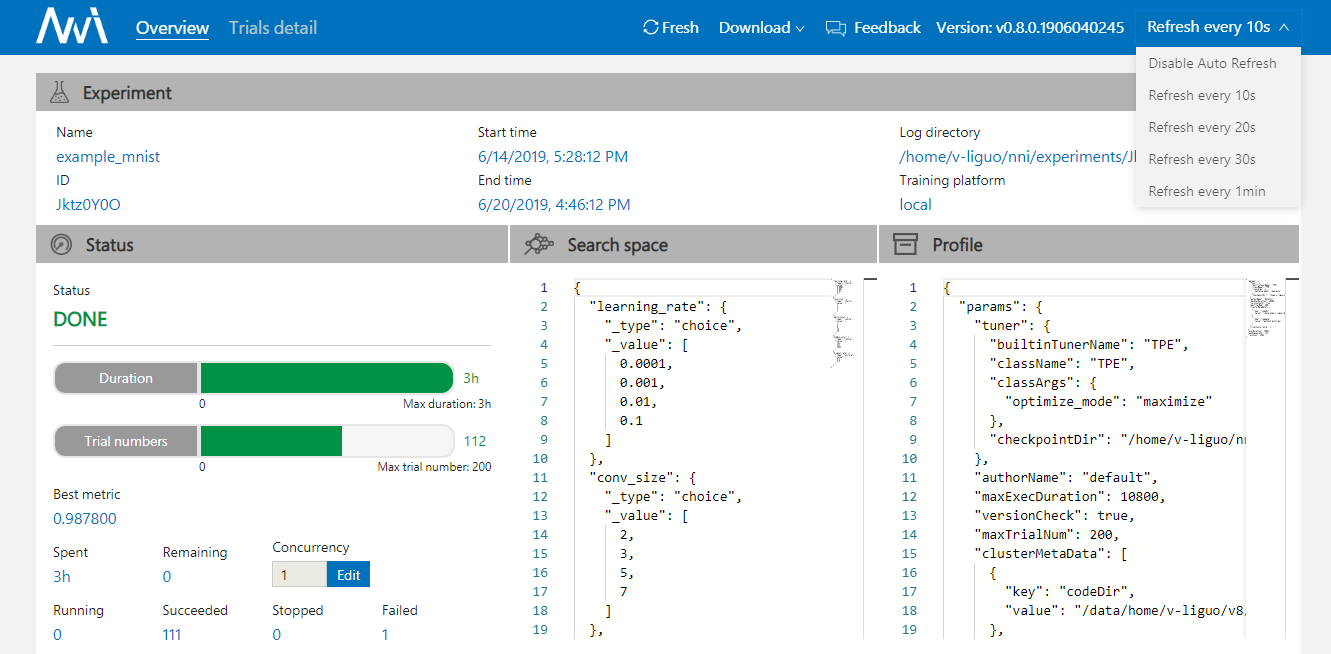

61.7 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

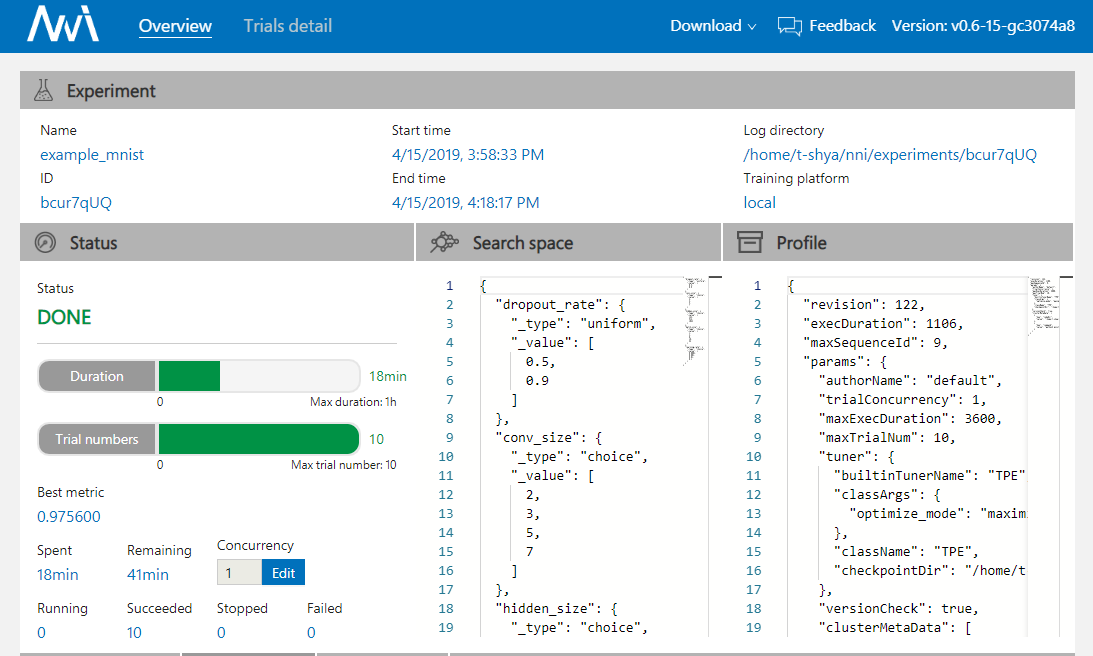

18.9 KB

| W: | H:

| W: | H:

pull code

35.9 KB

26.2 KB | W: | H:

35.7 KB | W: | H:

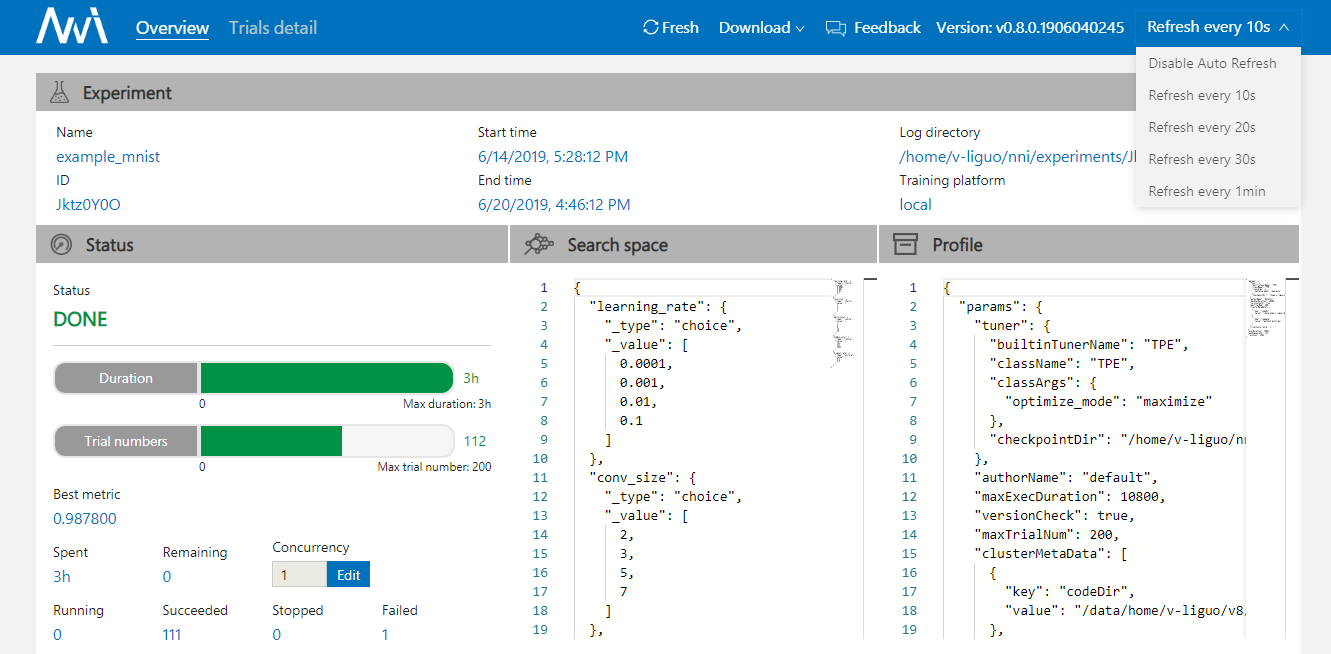

61.7 KB

33.5 KB | W: | H:

34.9 KB | W: | H:

33 KB | W: | H:

37.2 KB | W: | H:

22.1 KB | W: | H:

13.8 KB | W: | H:

18.9 KB

61.9 KB | W: | H:

67.7 KB | W: | H: