Merge branch 'fix-api-doc' into 'master'

Update docstrings See merge request open-mmlab/mmdet.3d!138

Showing

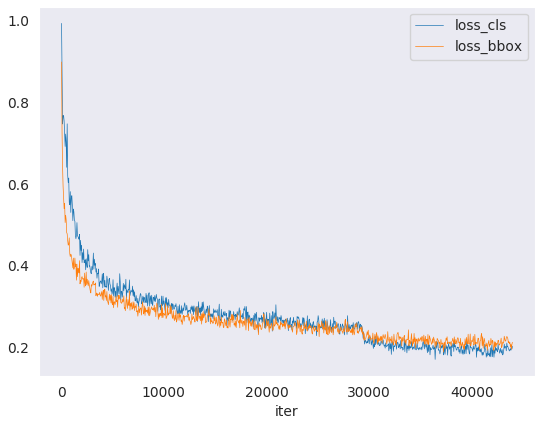

resources/loss_curve.png

0 → 100644

36.6 KB

resources/mmdet3d-logo.png

0 → 100644

32 KB

Update docstrings See merge request open-mmlab/mmdet.3d!138

36.6 KB

32 KB