[Enhance] Dataset browse for multiple dataset types (#467)

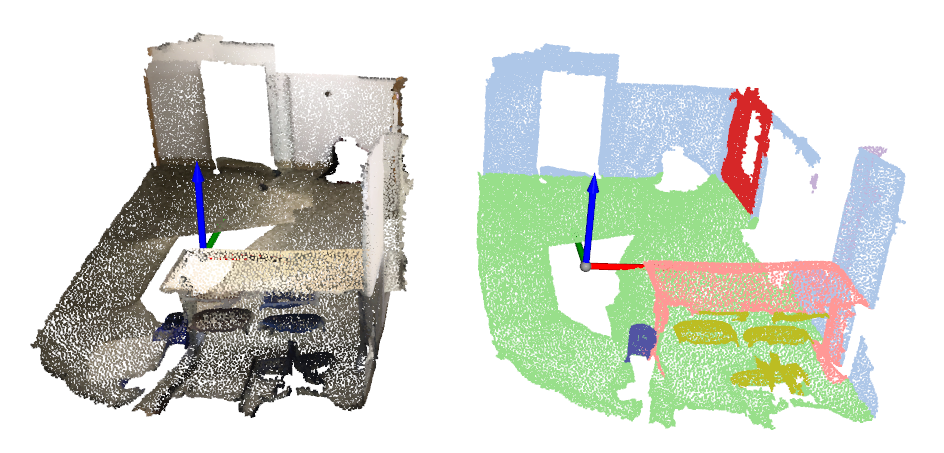

* fix small bug in get_loading_pipeline() * adopt eval_pipeline & support seg visualization * support multi-modality vis * fix small bugs * add multi-modality args & support S3DIS vis * update docs with imgs * fix typos

Showing

844 KB

326 KB