"git@developer.sourcefind.cn:OpenDAS/dgl.git" did not exist on "5cb5759366f872189d90d4299c204bac6e0238bf"

Merge pull request #165 from laekov/parallel-doc

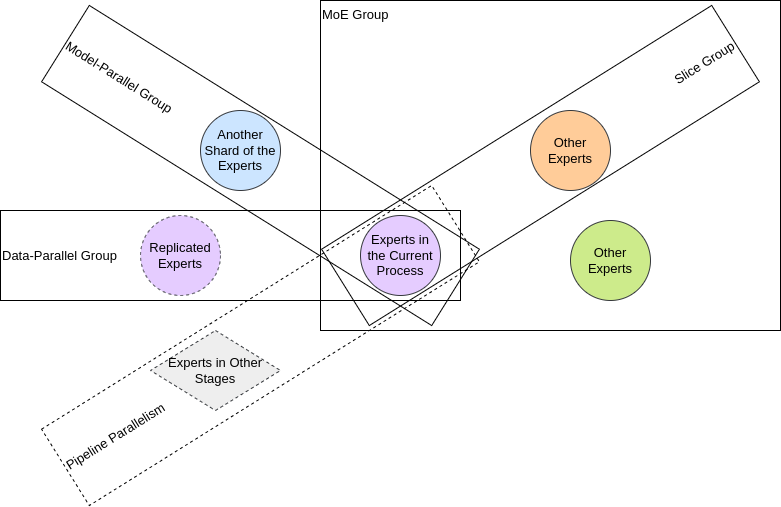

Document for process groups

Showing

doc/parallelism/README.md

0 → 100644

File moved

File moved

68.7 KB