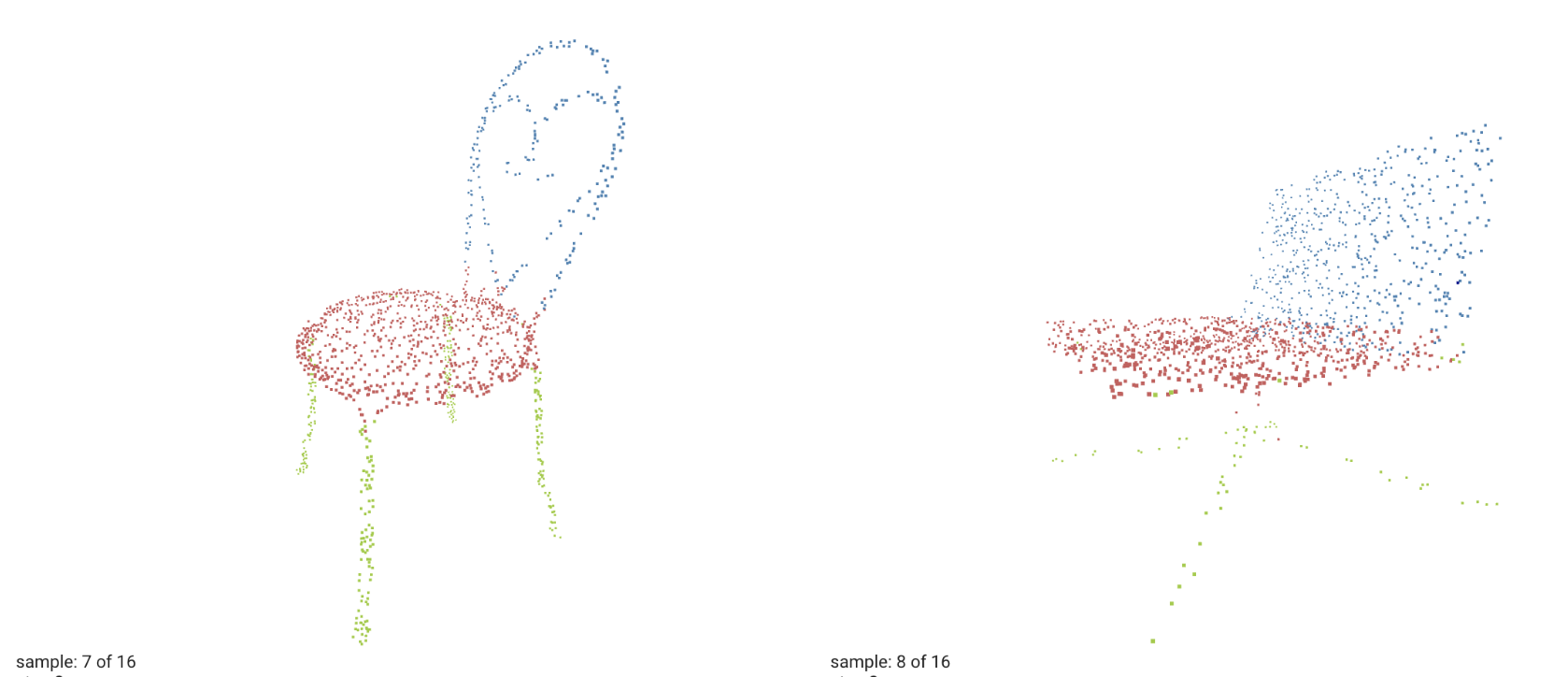

[Example] update PointNet and PointNet++ examples for Part Segmentation (#2547)

* [Model] update PointNet example for Part Segmentation

* Fixed issues with pointnet examples

* update the README

* Added image

* Fixed README and tensorboard arguments

* clean

* Add timing

* Update README.md

* Update README.md

Fixed a typo

Co-authored-by:  Tong He <hetong007@gmail.com>

Tong He <hetong007@gmail.com>

Showing

163 KB