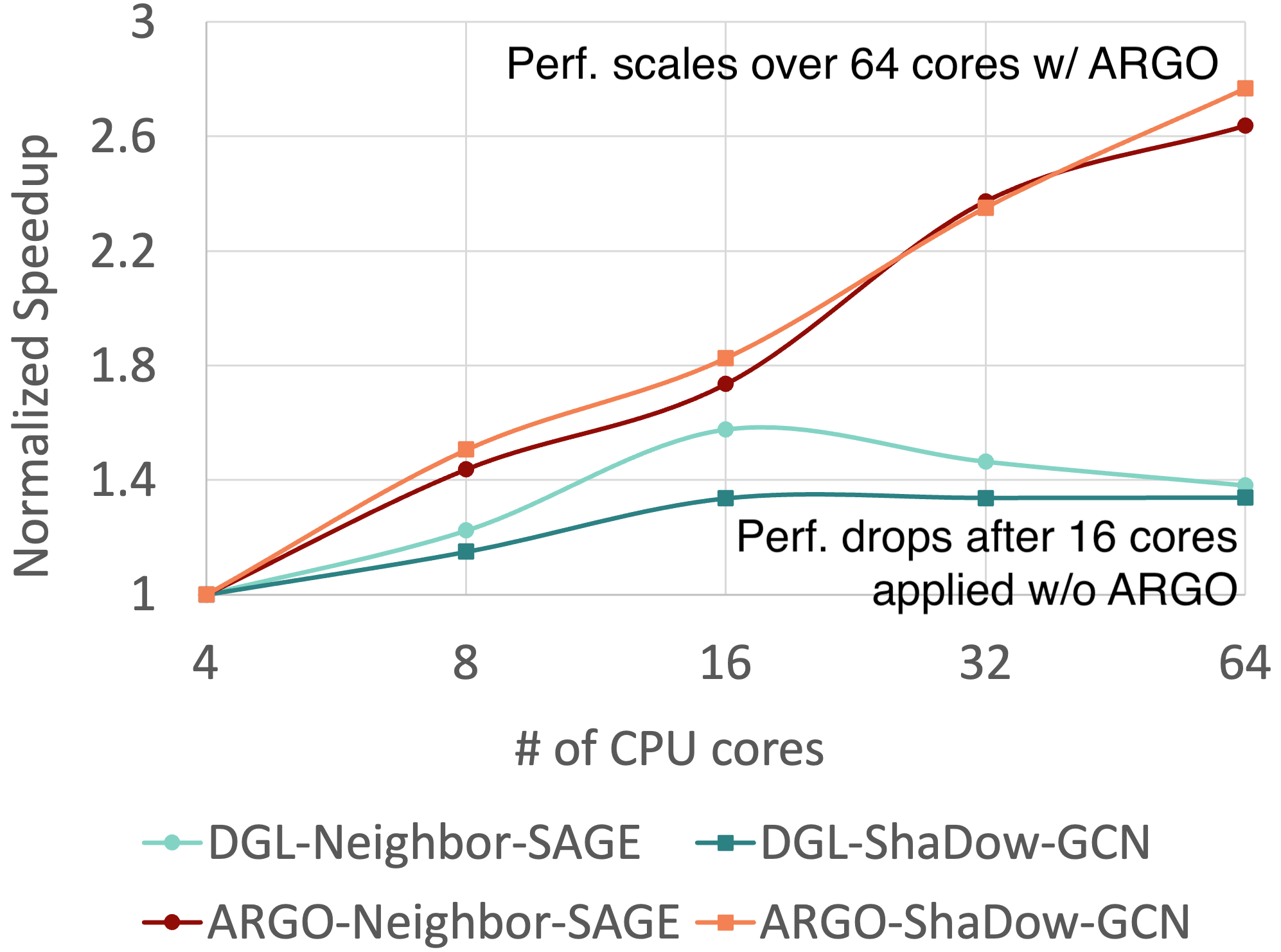

[Feature] ARGO: an easy-to-use runtime to improve GNN training performance on...

[Feature] ARGO: an easy-to-use runtime to improve GNN training performance on multi-core processors (#7003) Co-authored-by:Andrzej Kotłowski <Andrzej.Kotlowski@intel.com> Co-authored-by:

Hongzhi (Steve), Chen <chenhongzhi.nkcs@gmail.com>

Showing

233 KB