triton-v1

Showing

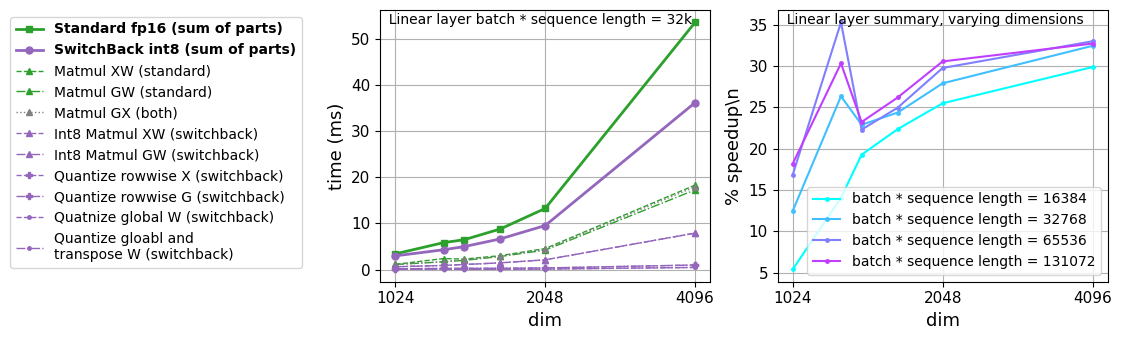

tests/triton_tests/plot1.pdf

0 → 100644

File added

tests/triton_tests/plot1.png

0 → 100644

119 KB

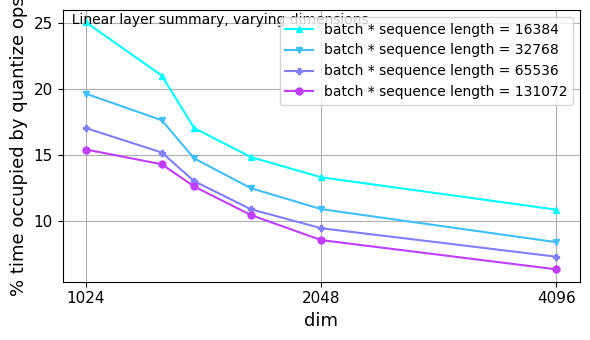

tests/triton_tests/plot2.pdf

0 → 100644

File added

tests/triton_tests/plot2.png

0 → 100644

50.8 KB

tests/triton_tests/plot2.py

0 → 100644

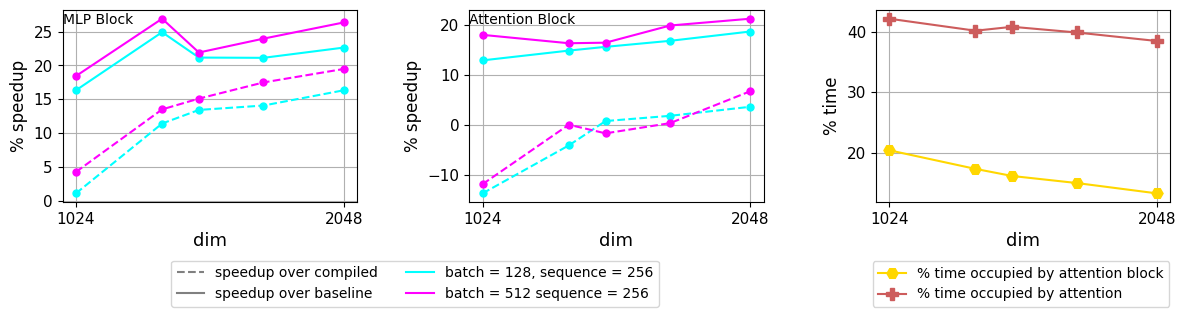

tests/triton_tests/plot3.pdf

0 → 100644

File added

tests/triton_tests/plot3.png

0 → 100644

57 KB

tests/triton_tests/plot3.py

0 → 100644