first commit

parents

Showing

examples/chat_demo.ipynb

0 → 100644

examples/llava_demo.ipynb

0 → 100644

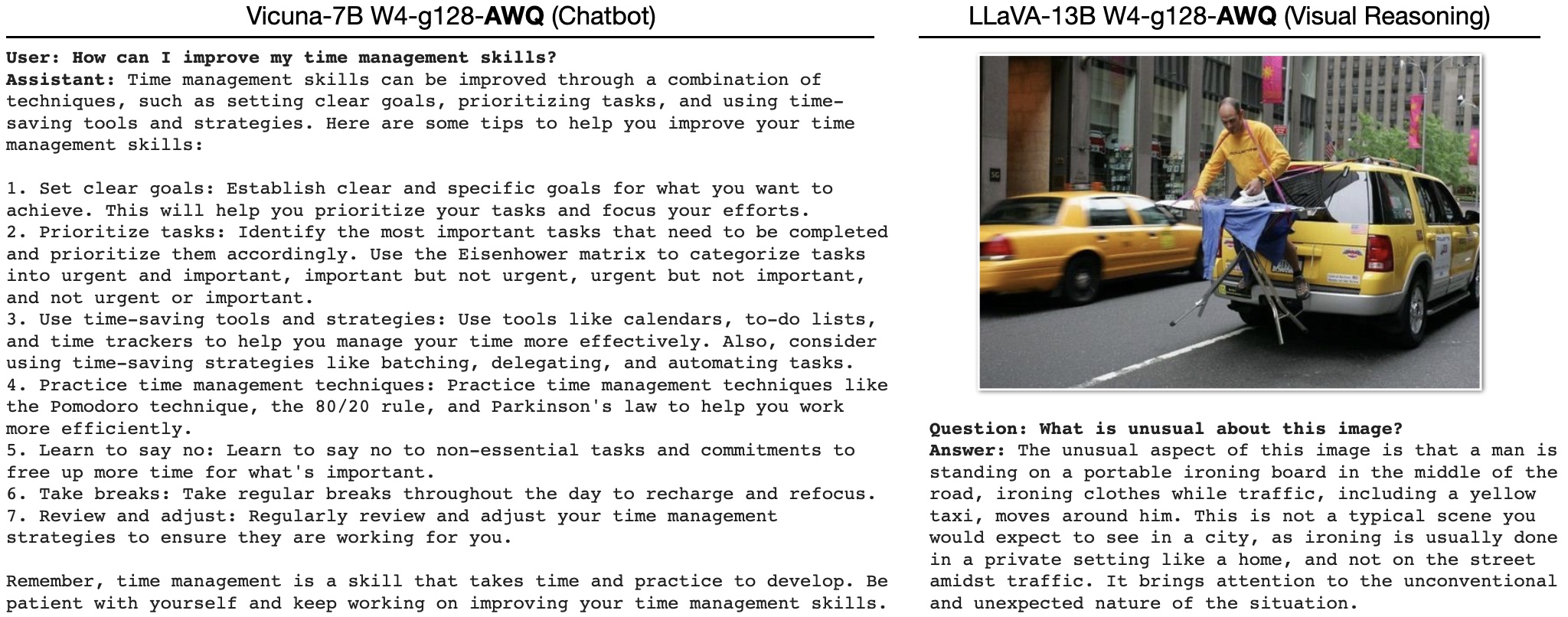

figures/example_vis.jpg

0 → 100644

592 KB

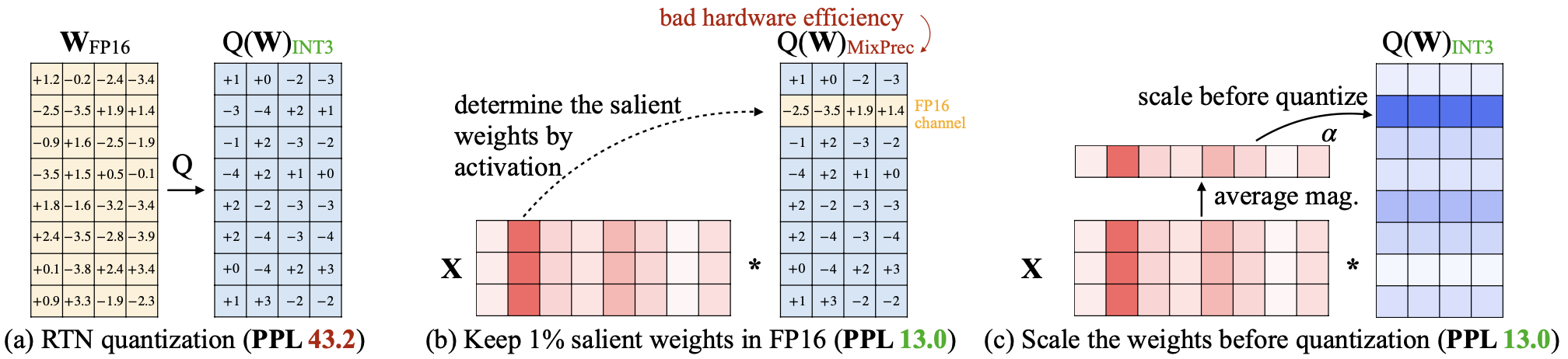

figures/overview.png

0 → 100644

139 KB

pyproject.toml

0 → 100644

scripts/llama_example.sh

0 → 100644

scripts/opt_example.sh

0 → 100644