0402 update

Showing

.pre-commit-config.yaml

0 → 100644

Dockerfile

0 → 100644

Dockerfile.nightly

0 → 100644

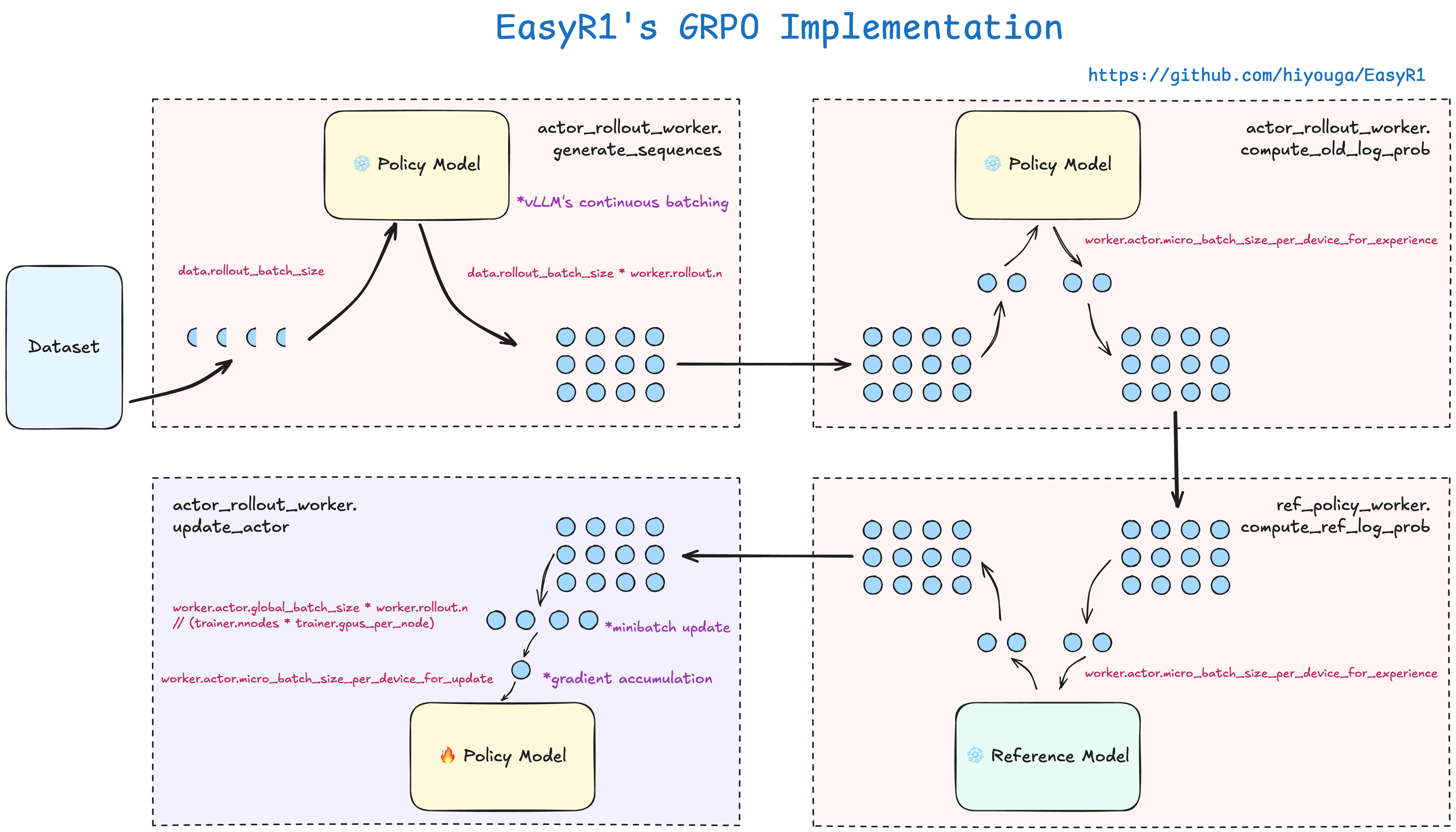

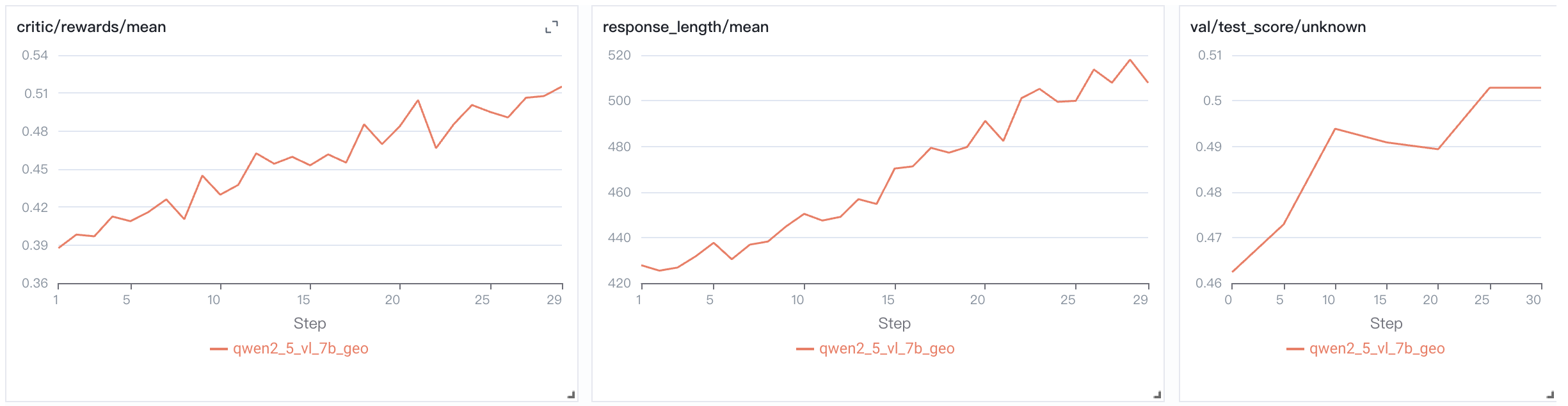

assets/easyr1_grpo.png

0 → 100644

845 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

examples/config.yaml

0 → 100644