Merge pull request #5 from PaddlePaddle/develop

merge paddleocr

Showing

doc/doc_en/whl_en.md

0 → 100644

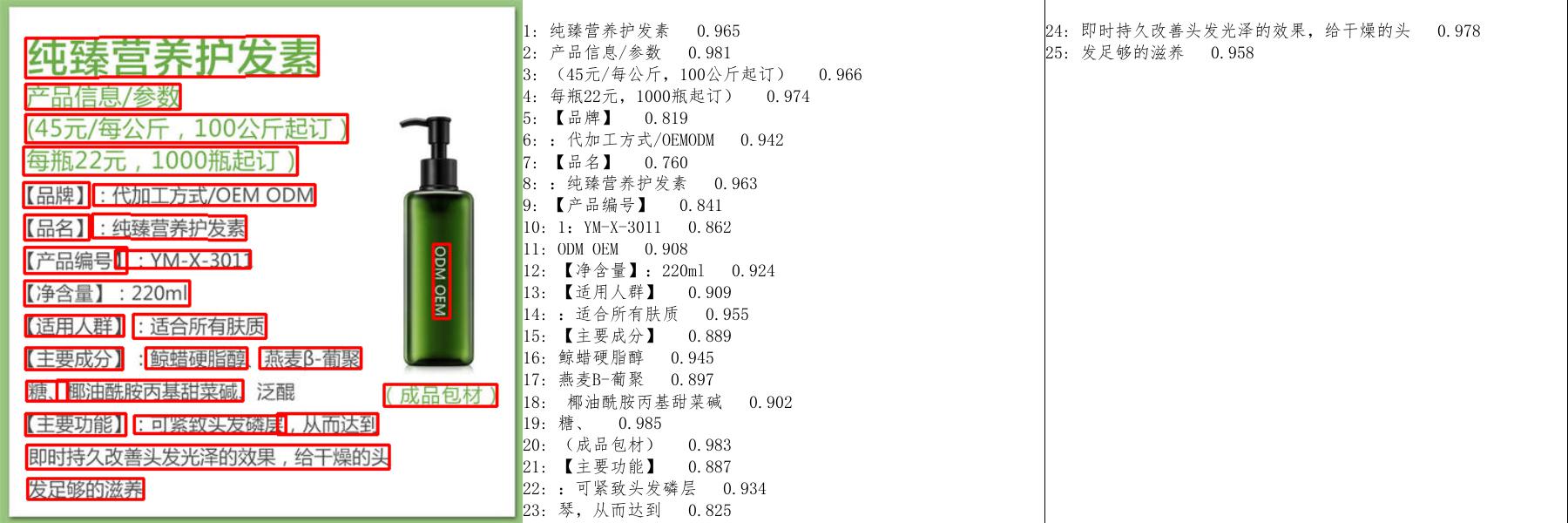

doc/imgs_en/img623.jpg

0 → 100755

248 KB

126 KB

333 KB

61.4 KB

135 KB

166 KB

84 KB

paddleocr.py

0 → 100644

setup.py

0 → 100644