merge dygraph

Showing

configs/table/table_mv3.yml

0 → 100755

| W: | H:

| W: | H:

doc/table/1.png

0 → 100644

263 KB

55.4 KB

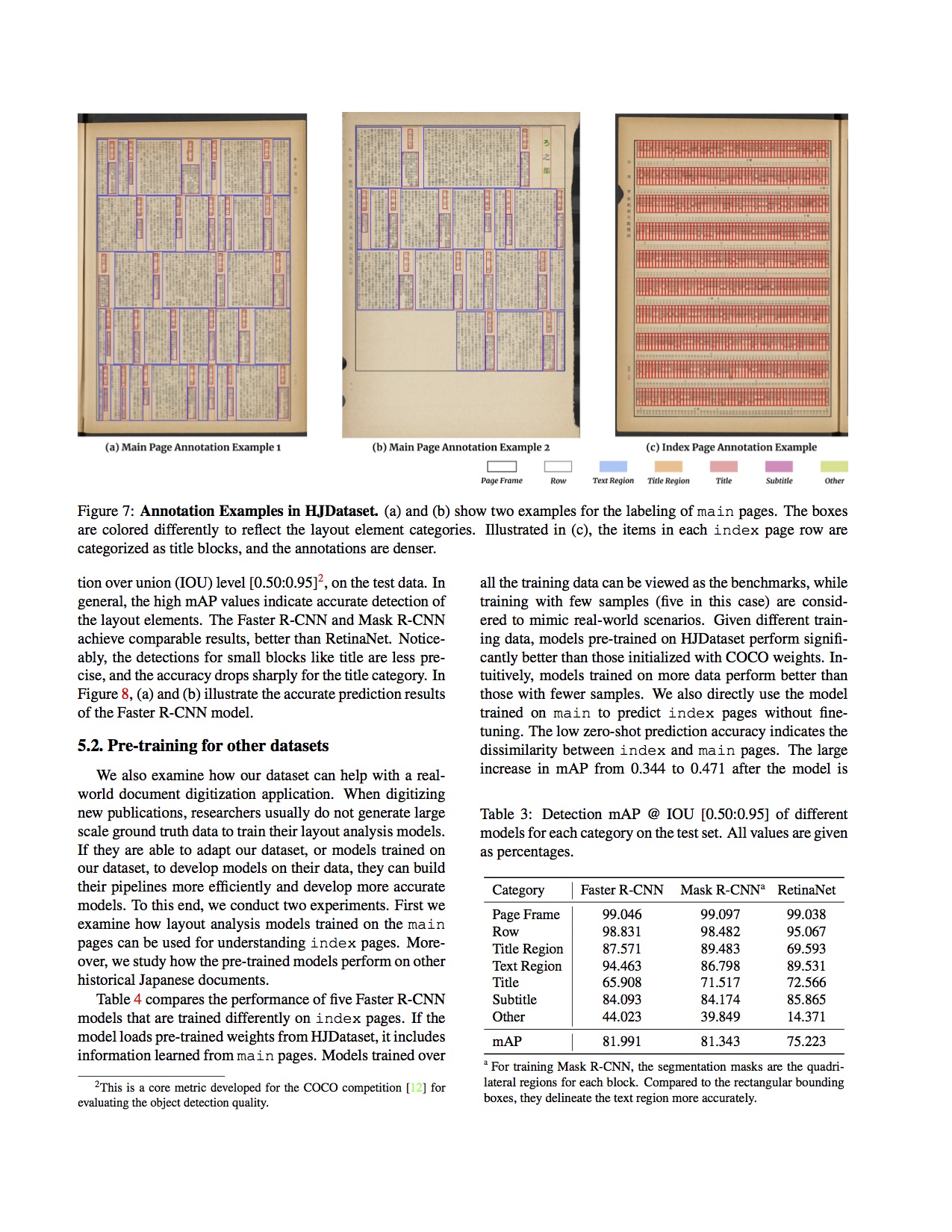

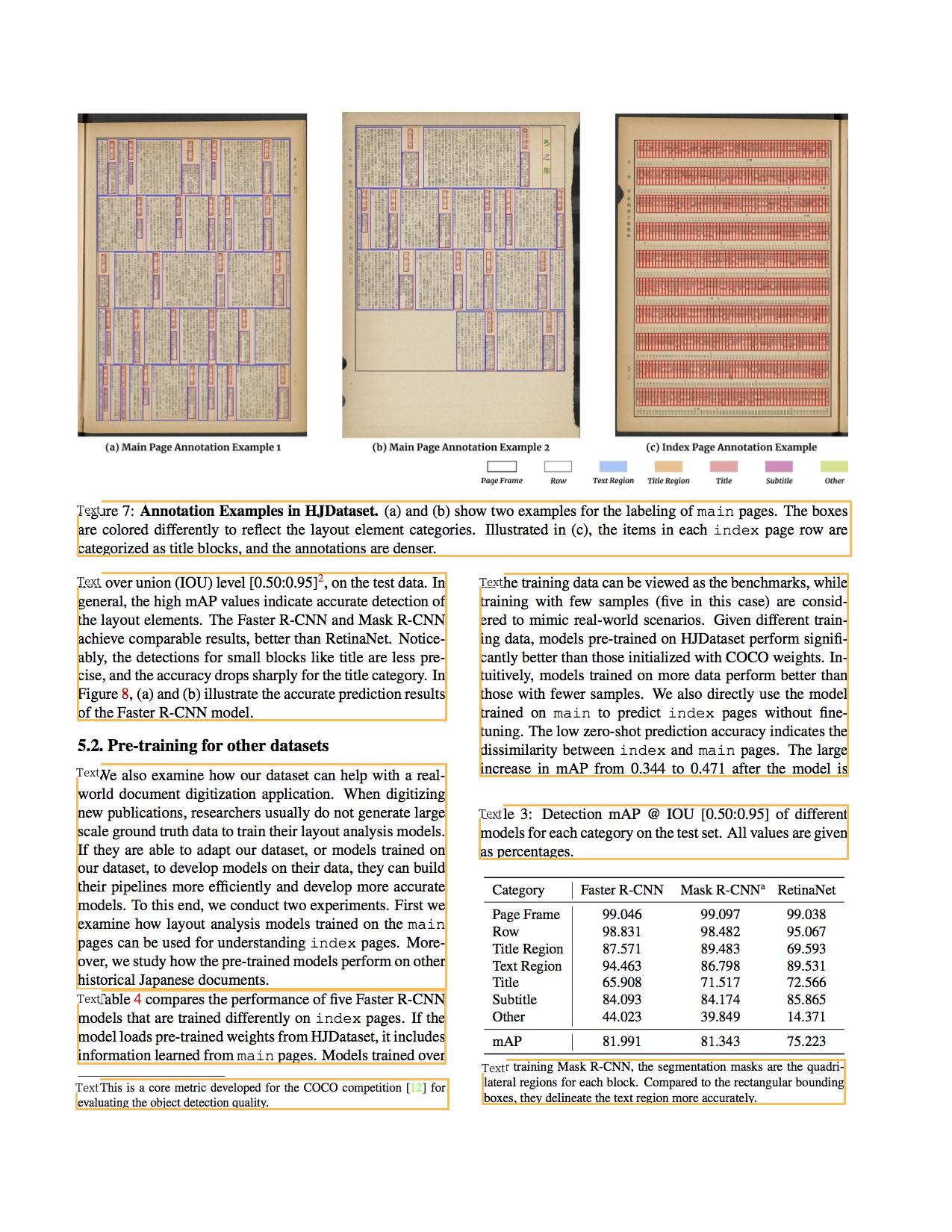

doc/table/paper-image.jpg

0 → 100644

671 KB

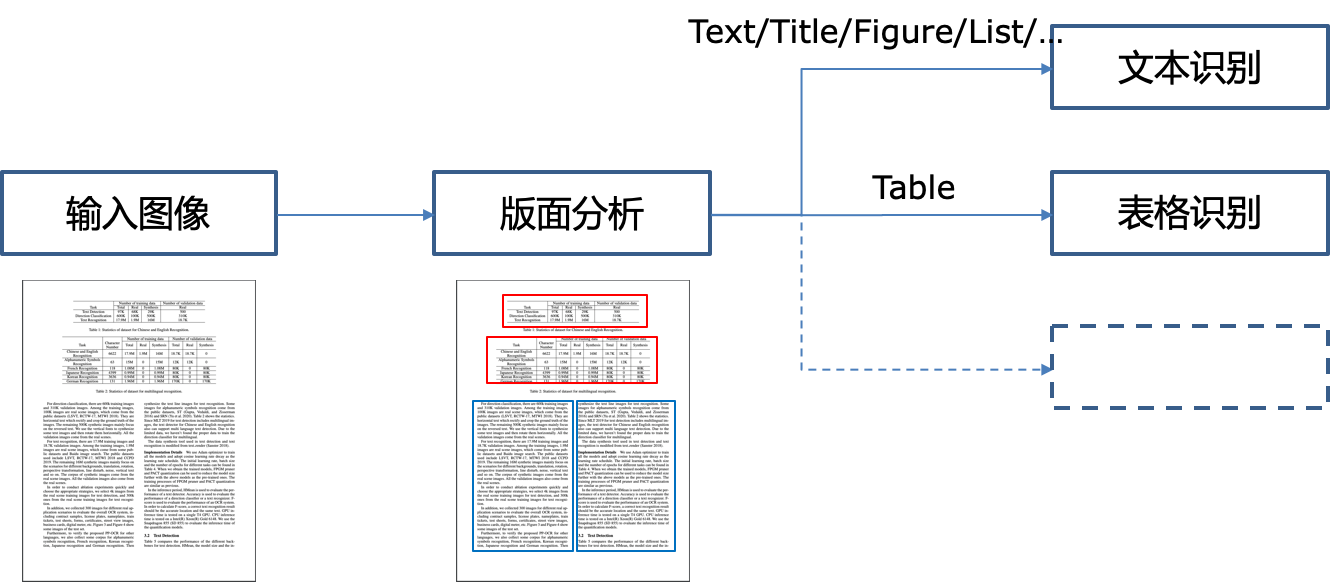

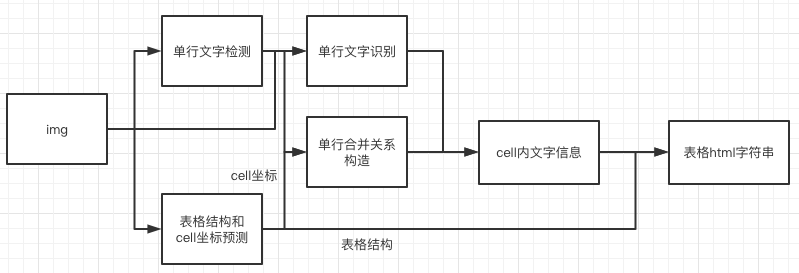

doc/table/pipeline.png

0 → 100644

116 KB

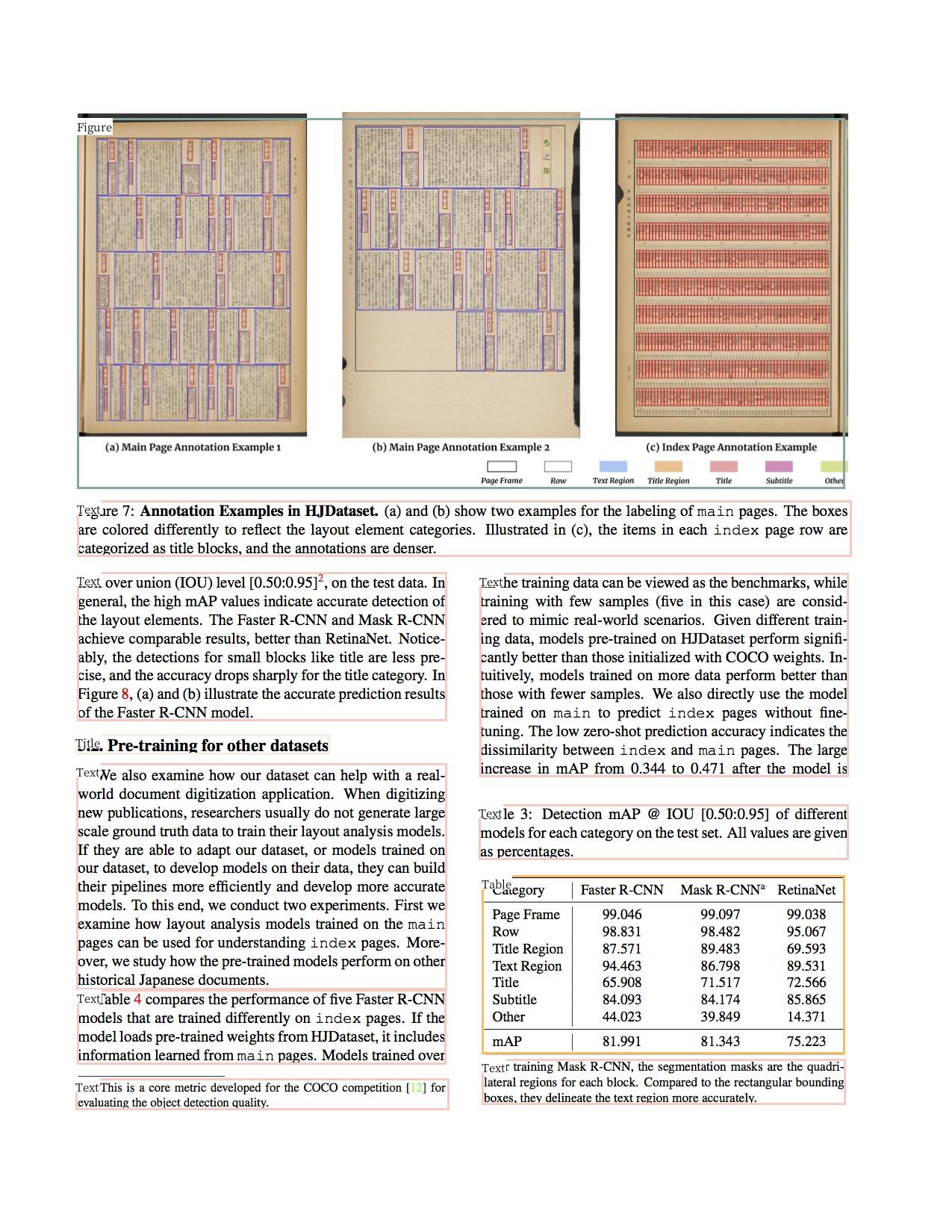

doc/table/result_all.jpg

0 → 100644

386 KB

doc/table/result_text.jpg

0 → 100644

388 KB

26.1 KB