"...MoQ/huggingface-transformers/examples/seq2seq/README.md" did not exist on "aebde649e30016aa33b2e1345cb22210a2e49b04"

Merge branch 'develop' of https://github.com/PaddlePaddle/PaddleOCR into fixocr

Showing

doc/doc_ch/quickstart.md

0 → 100644

doc/doc_ch/reference.md

0 → 100644

doc/doc_ch/serving.md

0 → 100644

doc/doc_ch/visualization.md

0 → 100644

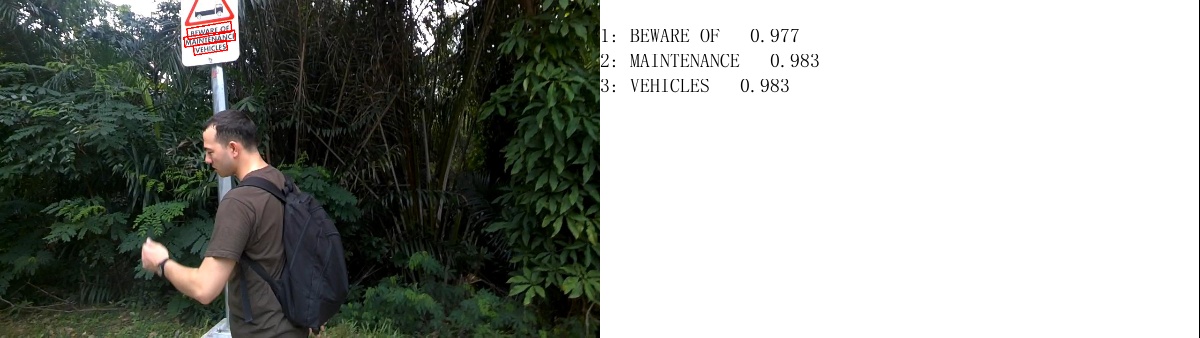

doc/imgs_en/img_12.jpg

0 → 100644

722 KB

153 KB

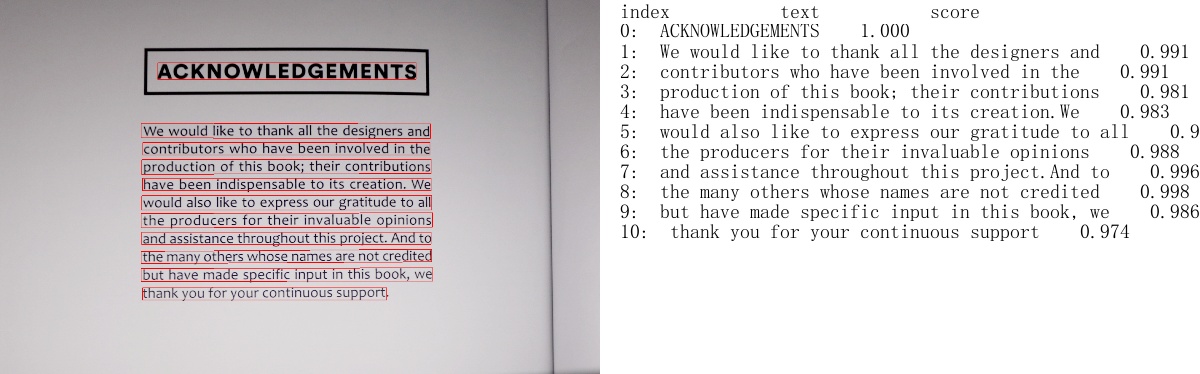

doc/imgs_results/img_11.jpg

0 → 100644

118 KB

tools/test_hubserving.py

0 → 100644