"vscode:/vscode.git/clone" did not exist on "c8aa934673a5ddd8d870aef673f91e6910df7c62"

fix conflict

Showing

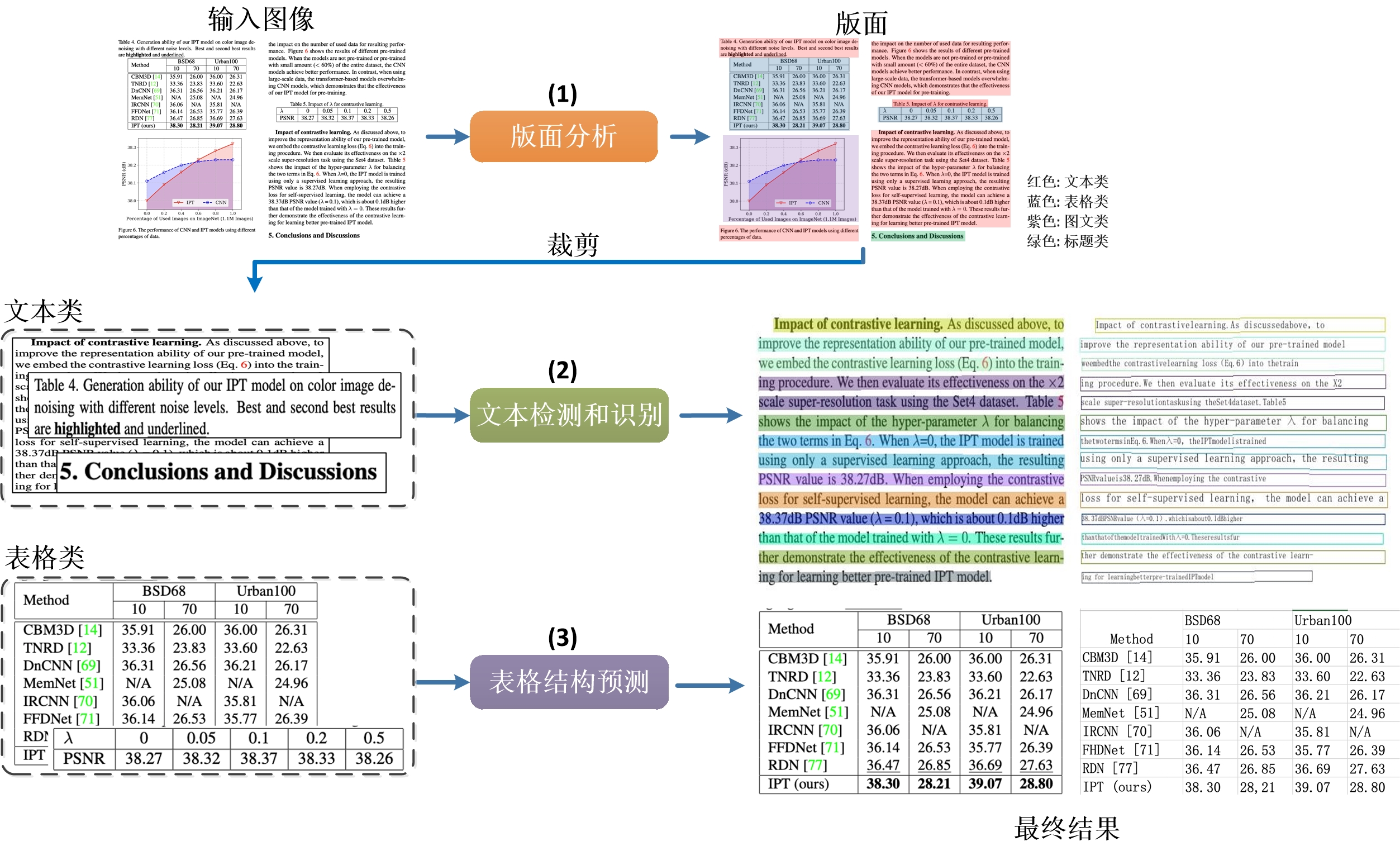

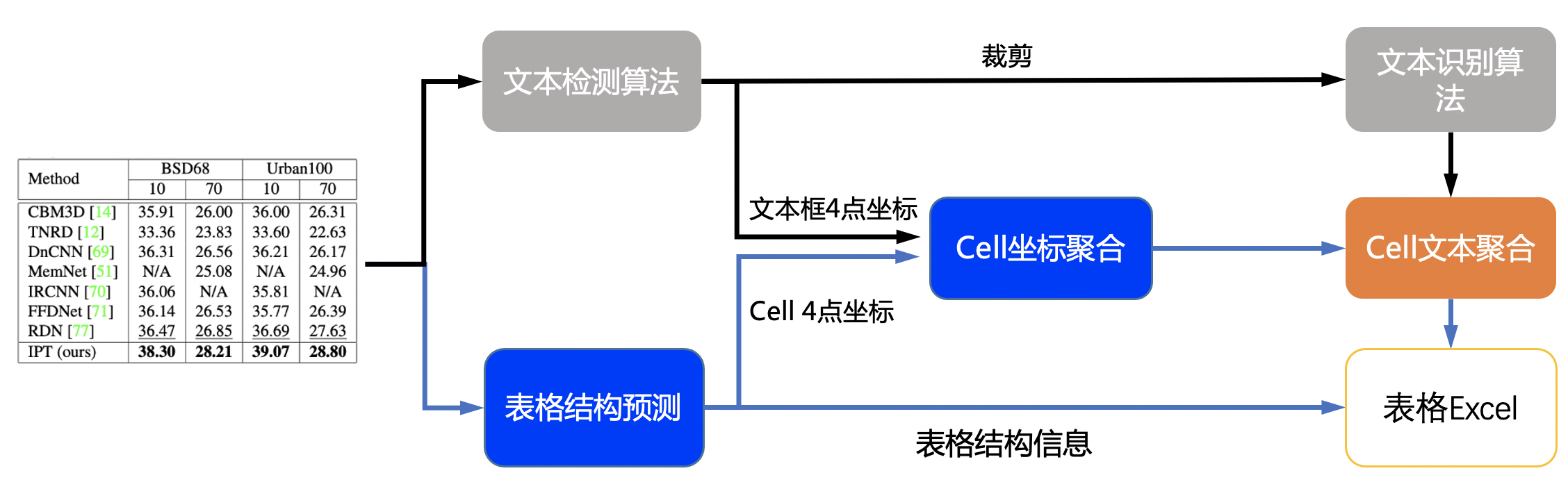

doc/table/pipeline.jpg

0 → 100644

1.46 MB

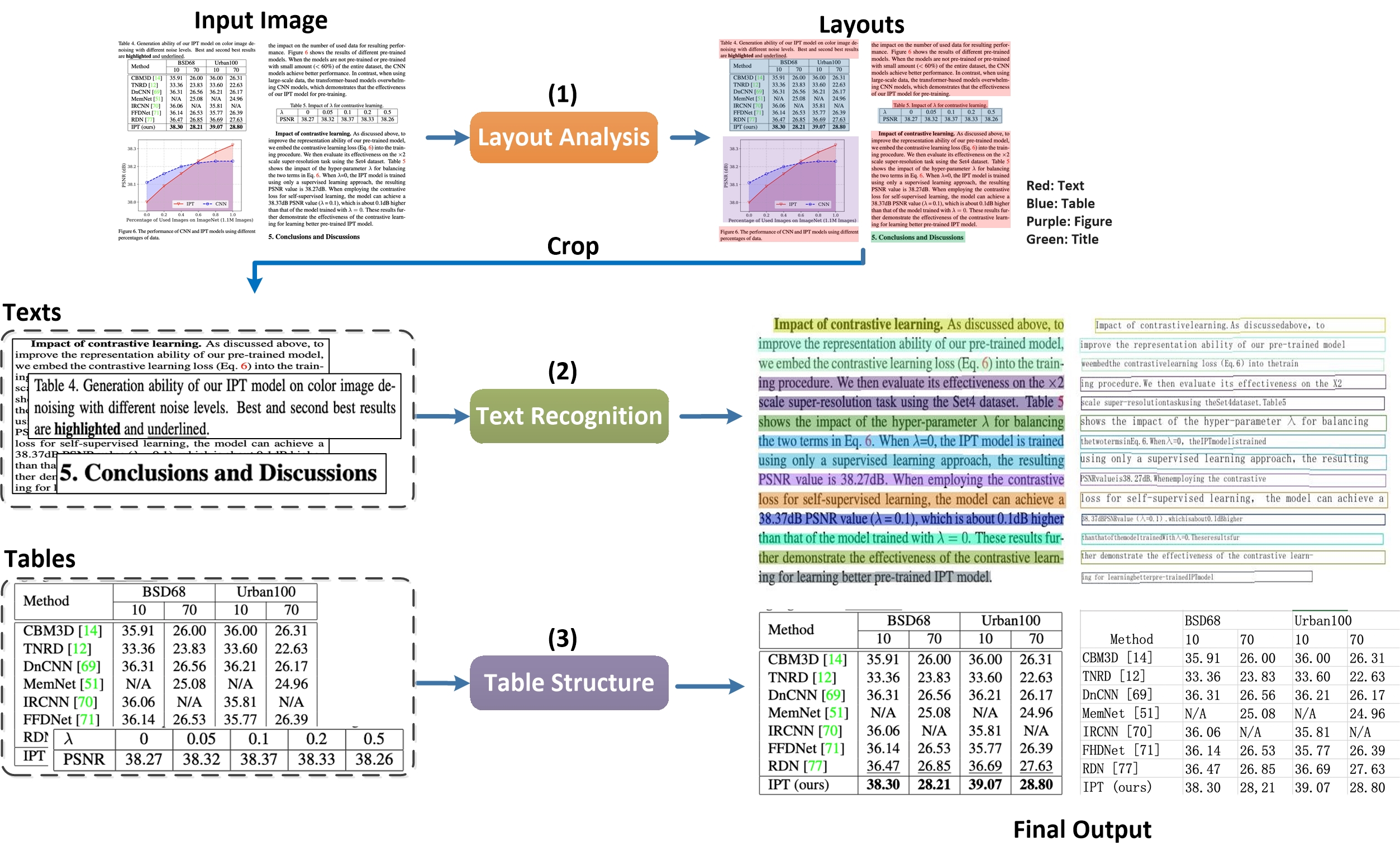

doc/table/pipeline_en.jpg

0 → 100644

1.45 MB

doc/table/ppstructure.GIF

0 → 100644

2.49 MB

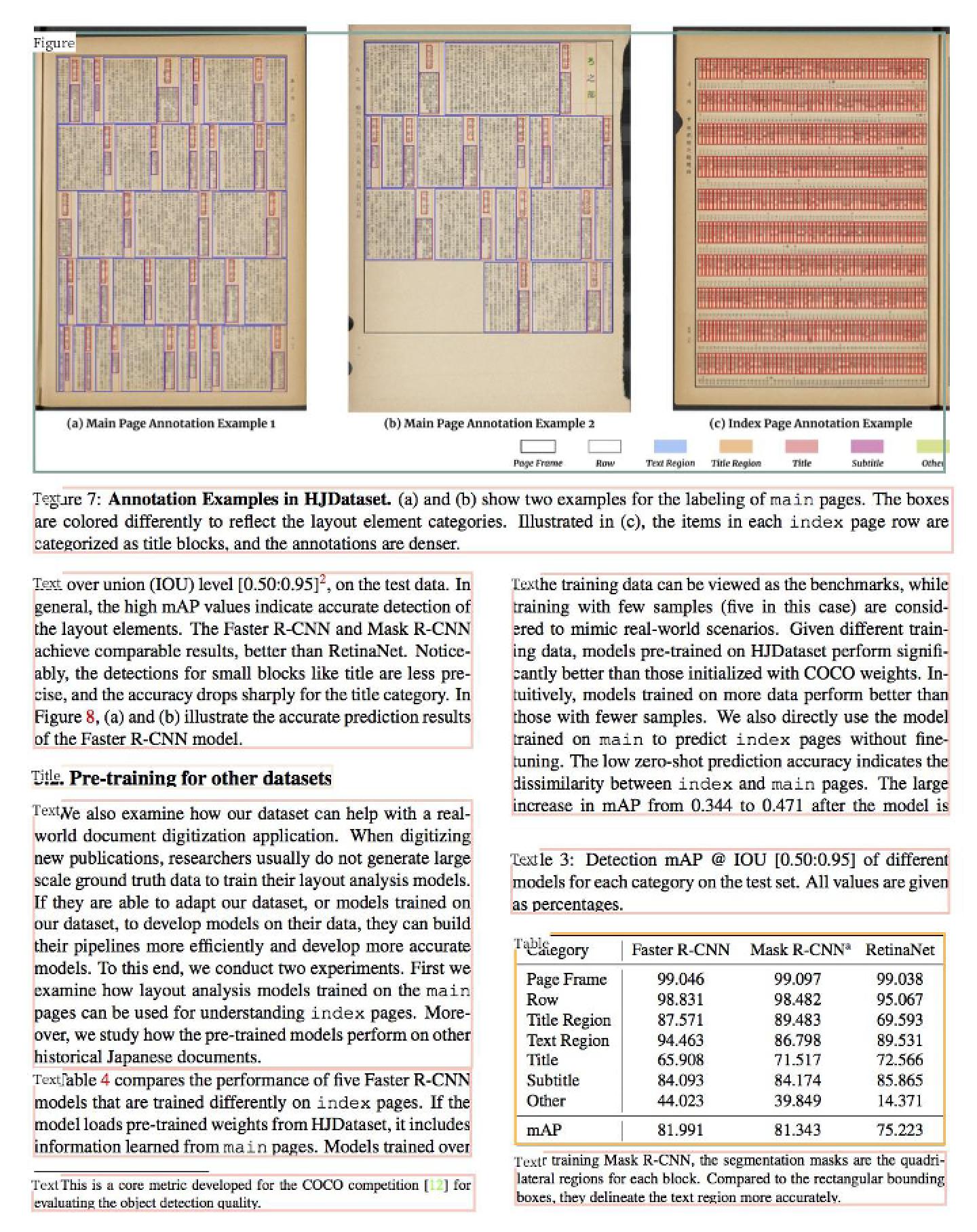

doc/table/result_all.jpg

0 → 100644

521 KB

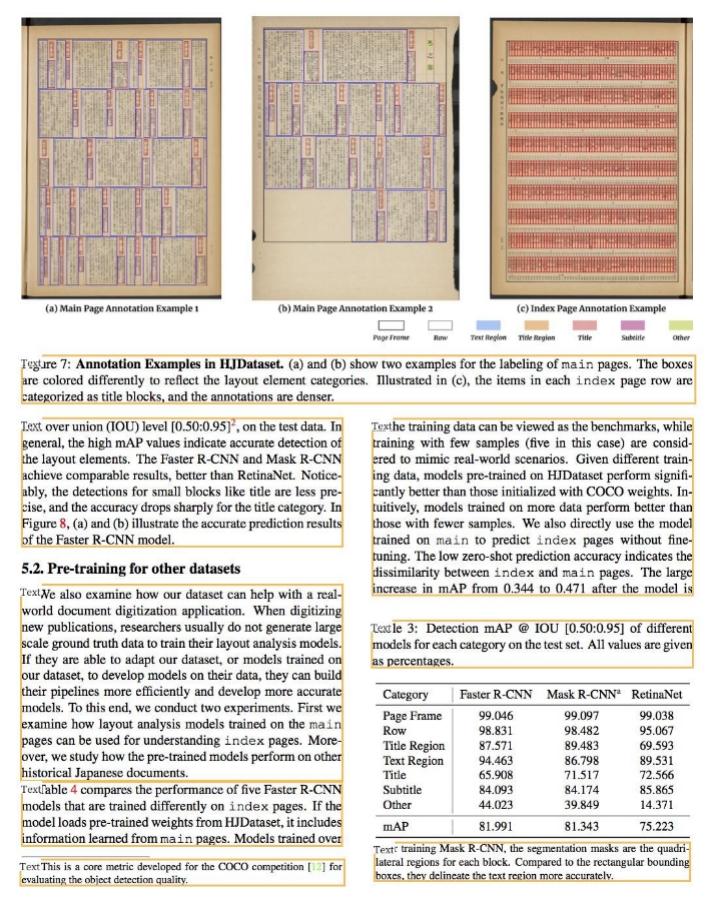

doc/table/result_text.jpg

0 → 100644

146 KB

doc/table/table.jpg

0 → 100644

24.1 KB

552 KB

416 KB

ppocr/data/pubtab_dataset.py

0 → 100644

ppocr/losses/basic_loss.py

0 → 100644