Merge remote-tracking branch 'origin/dygraph' into dygraph

Showing

File moved

File moved

| W: | H:

| W: | H:

| W: | H:

| W: | H:

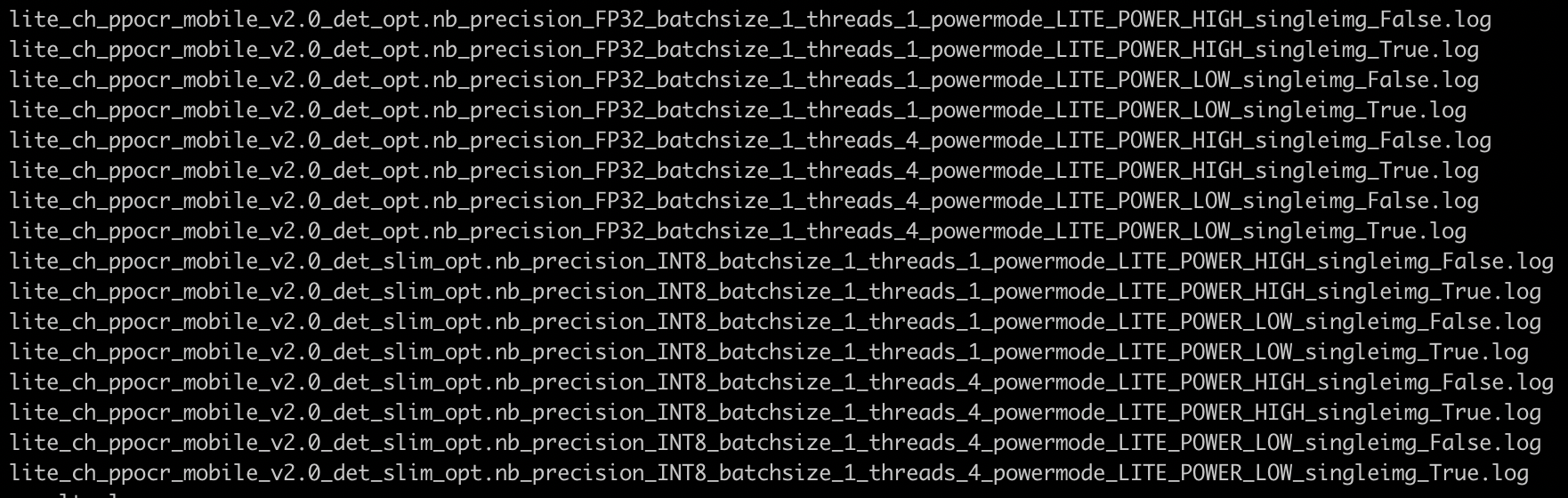

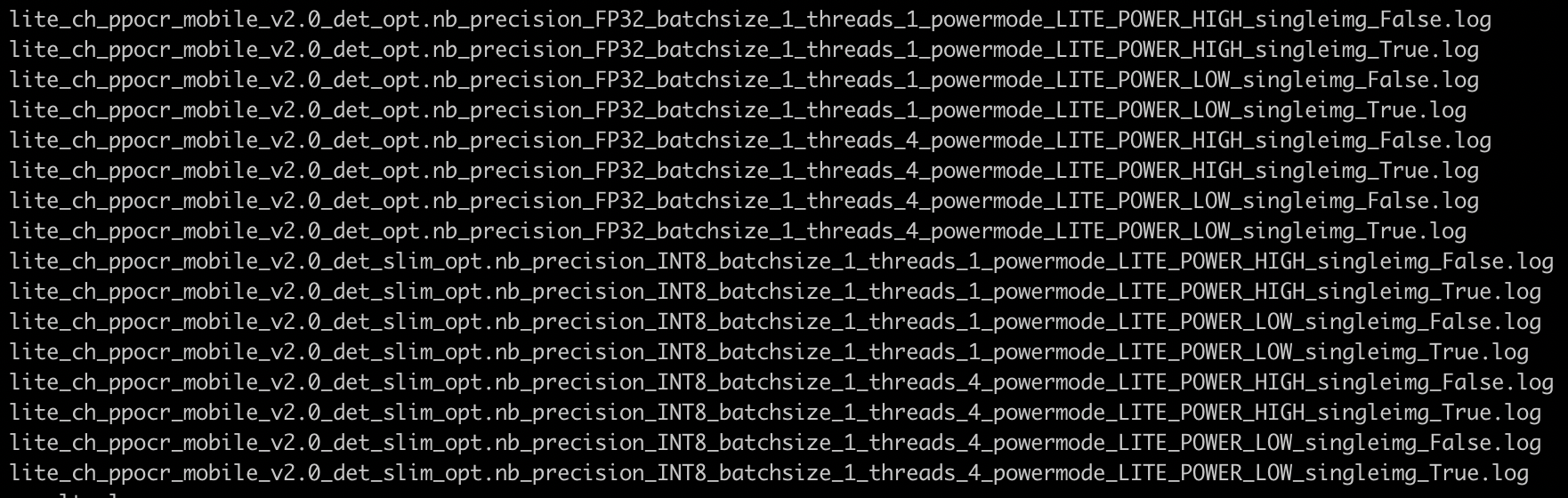

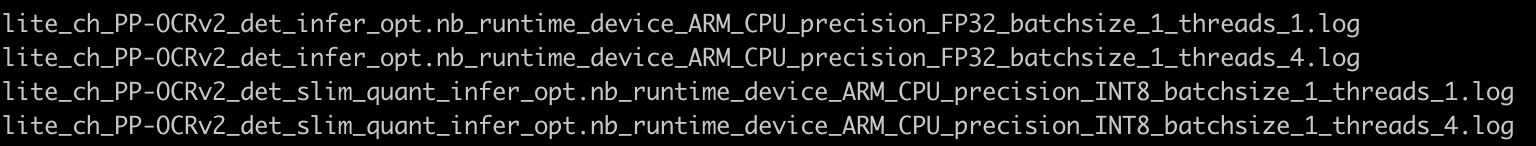

test_tipc/prepare_lite.sh

0 → 100644

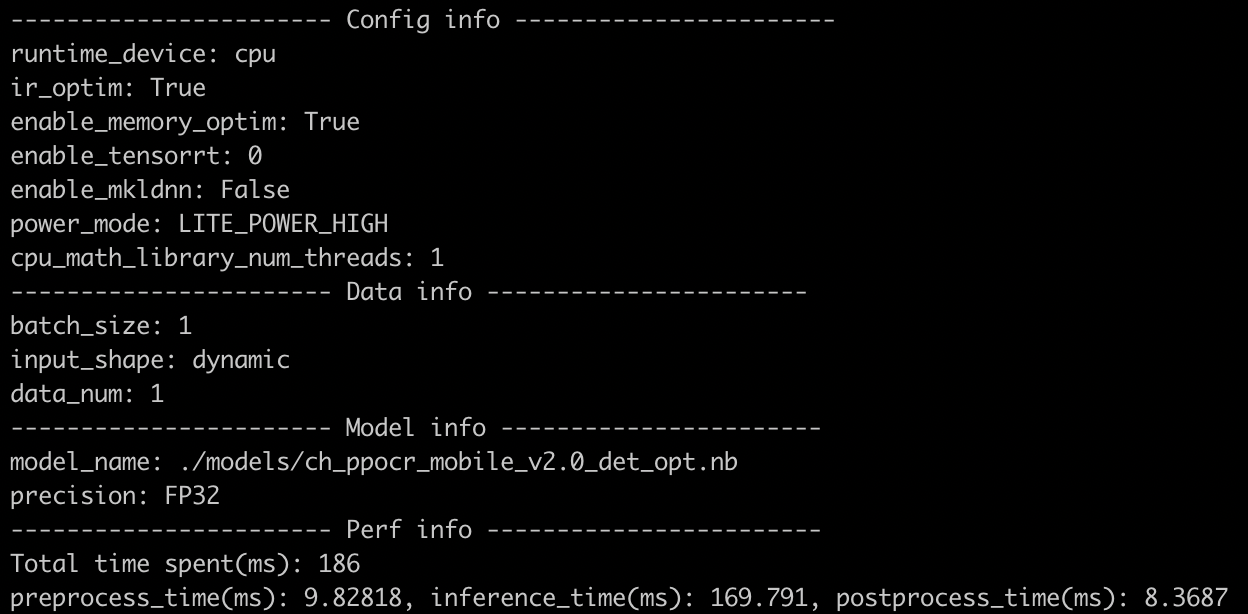

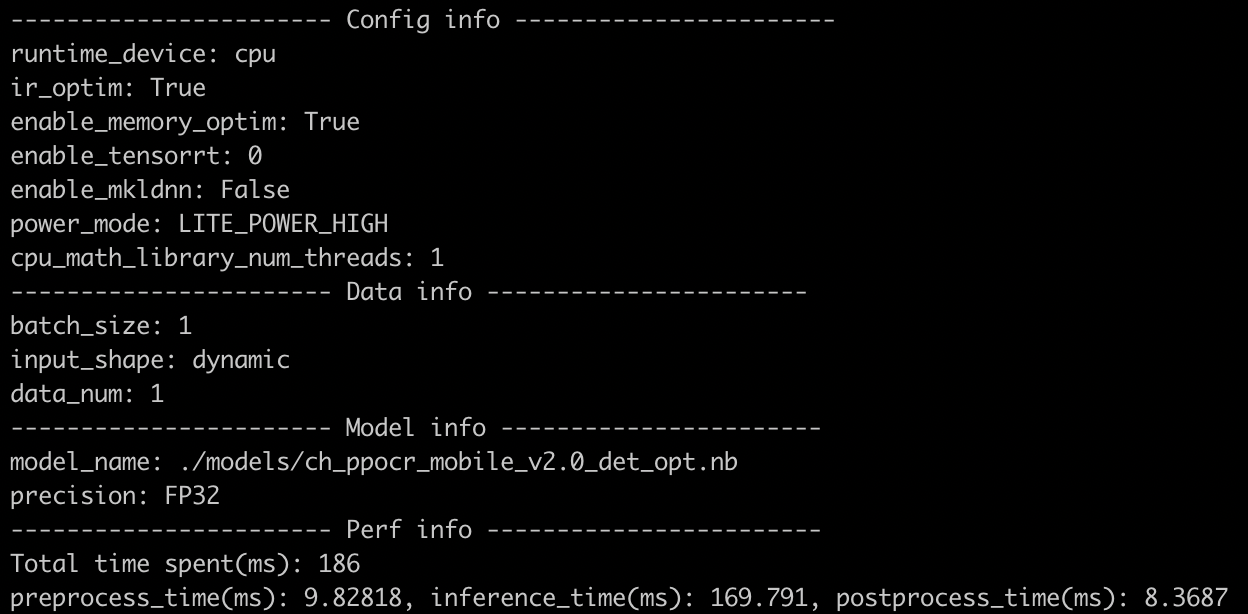

290 KB | W: | H:

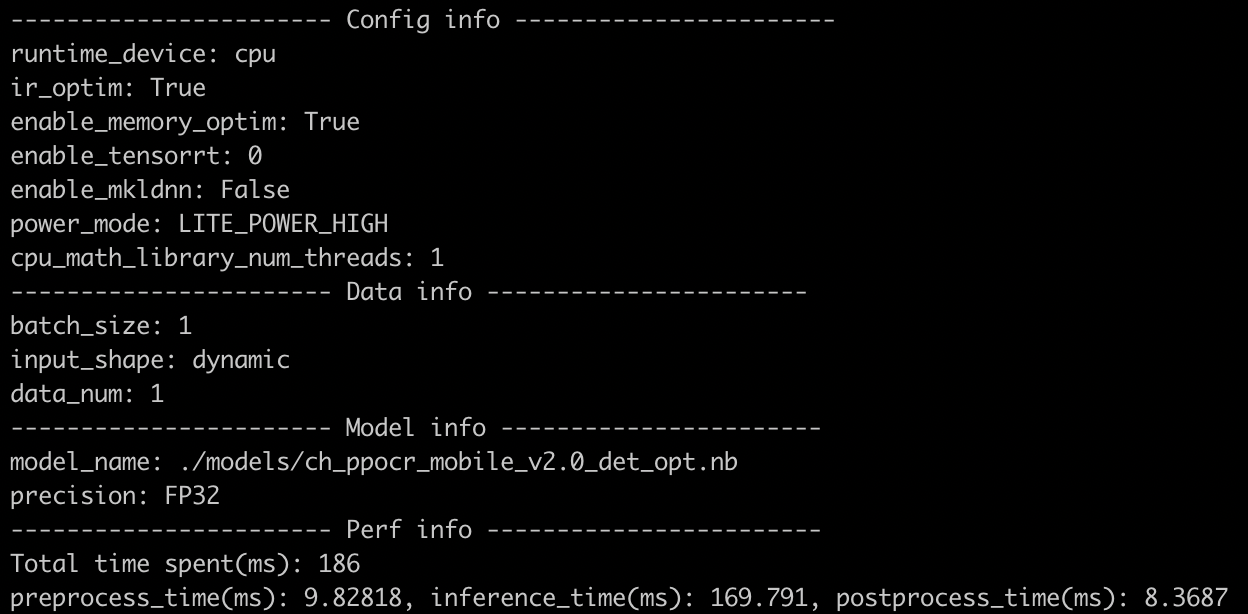

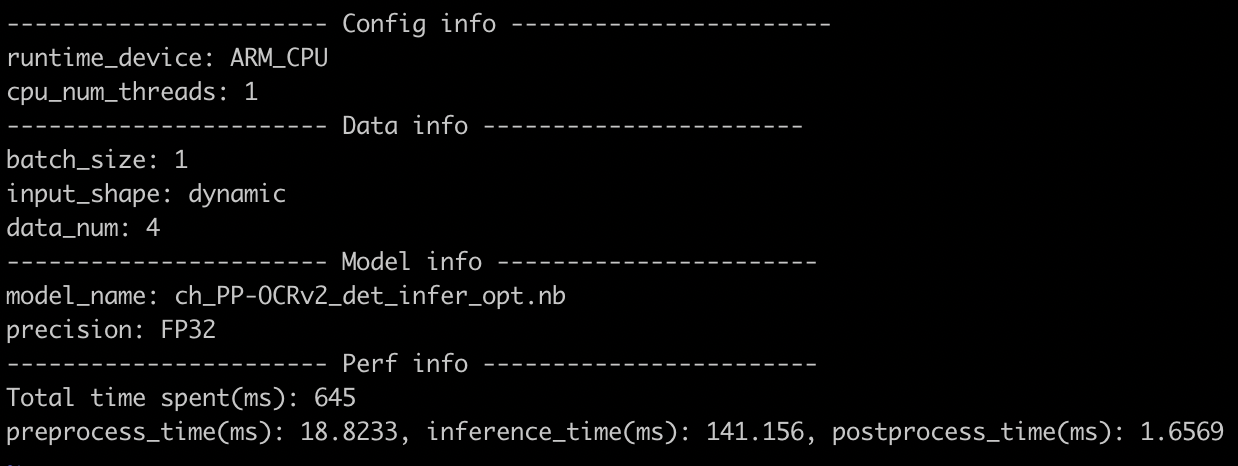

210 KB | W: | H:

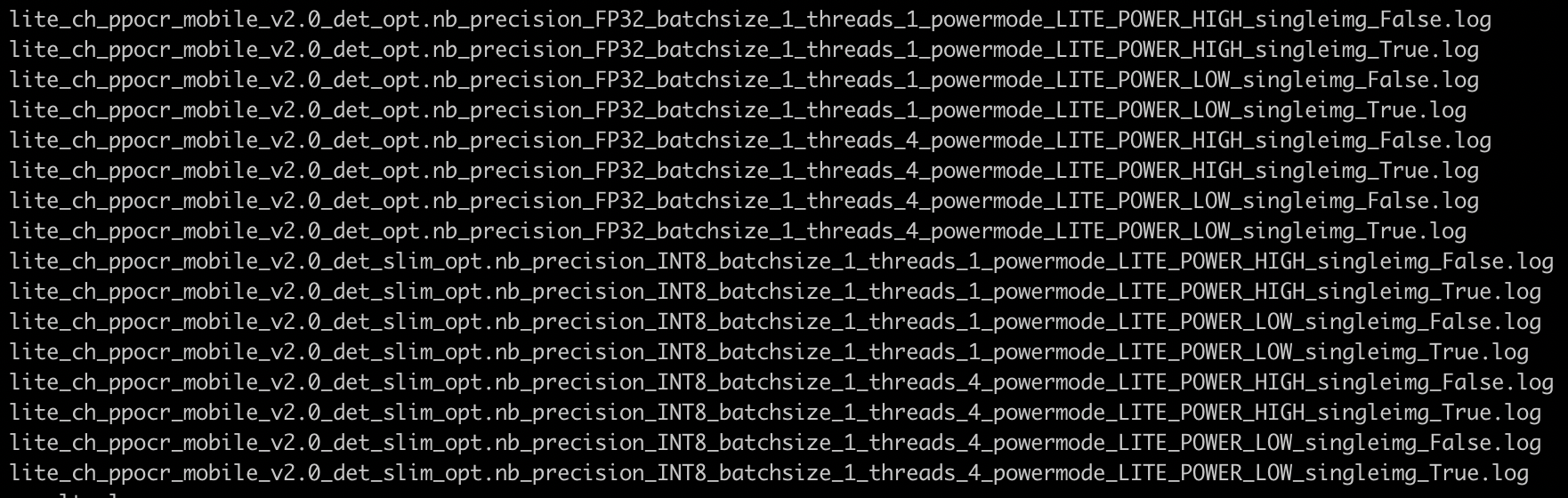

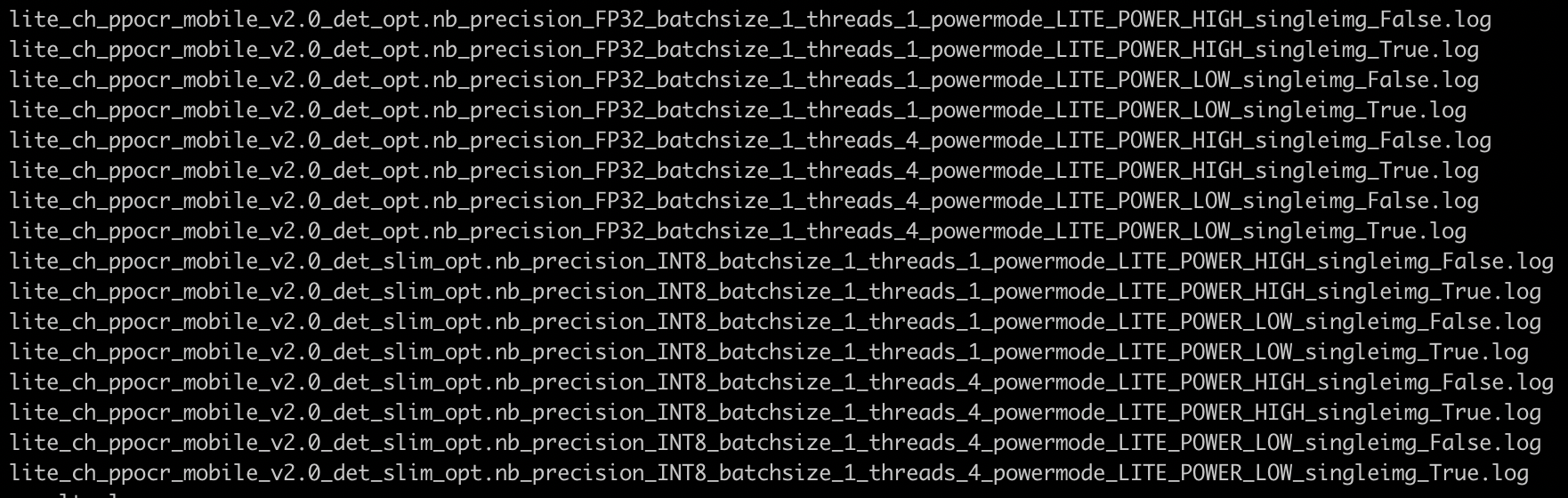

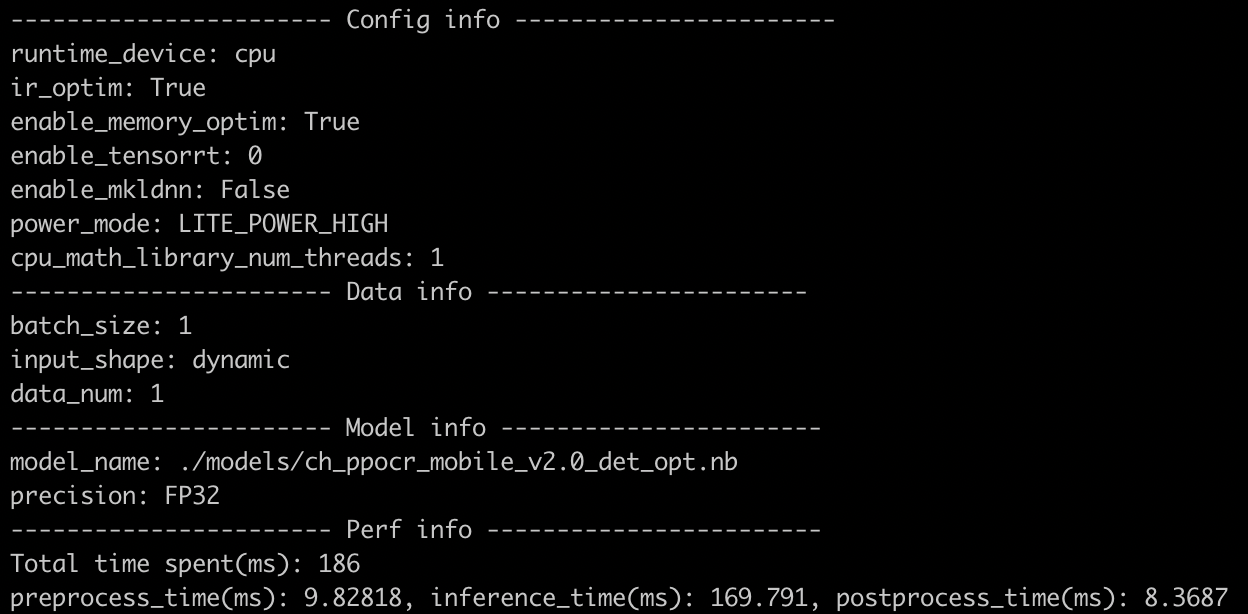

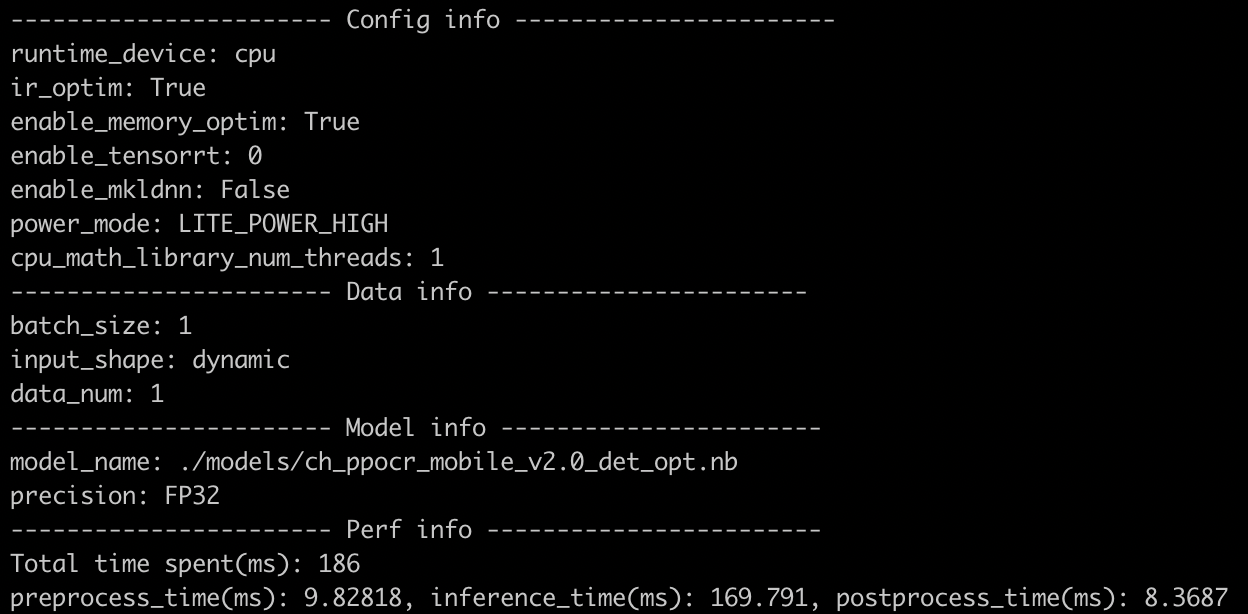

776 KB | W: | H:

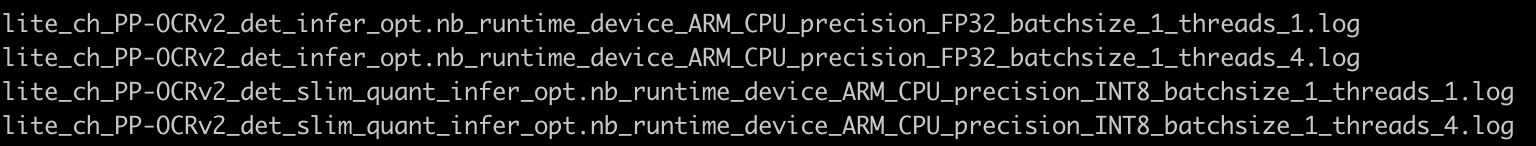

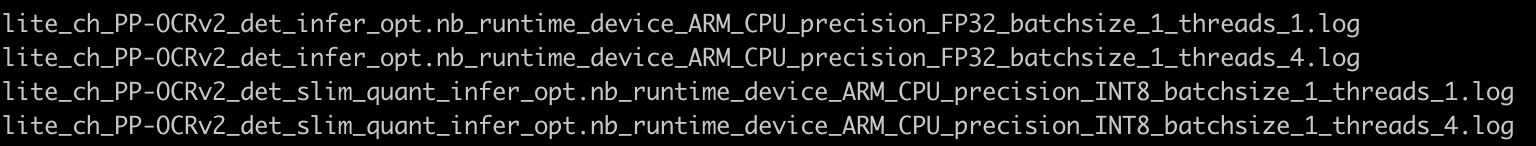

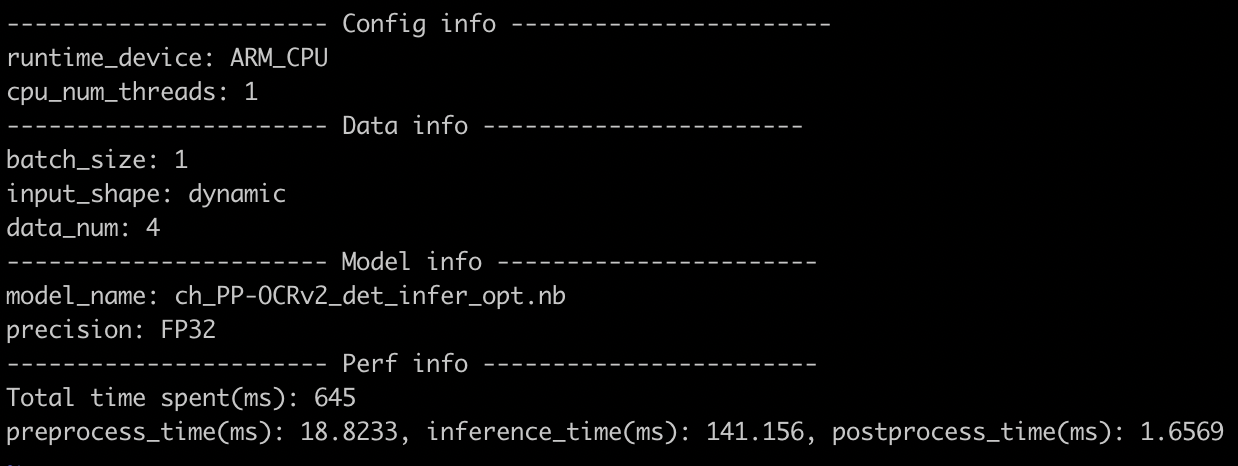

169 KB | W: | H: