"...composable_kernel_onnxruntime.git" did not exist on "6dfb4e7851a99eab605d239873b7eca777980fa8"

Merge branch 'dygraph' of https://github.com/PaddlePaddle/PaddleOCR into test_v11

Showing

| W: | H:

| W: | H:

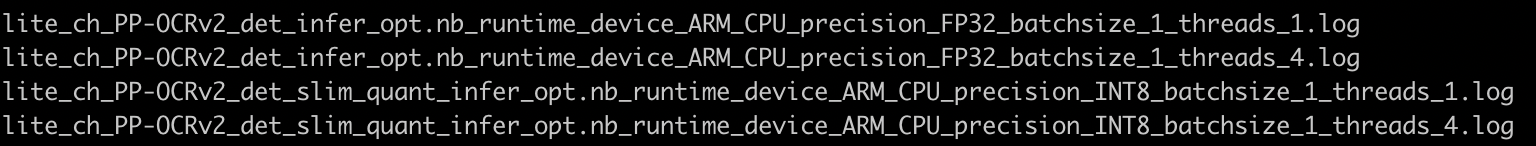

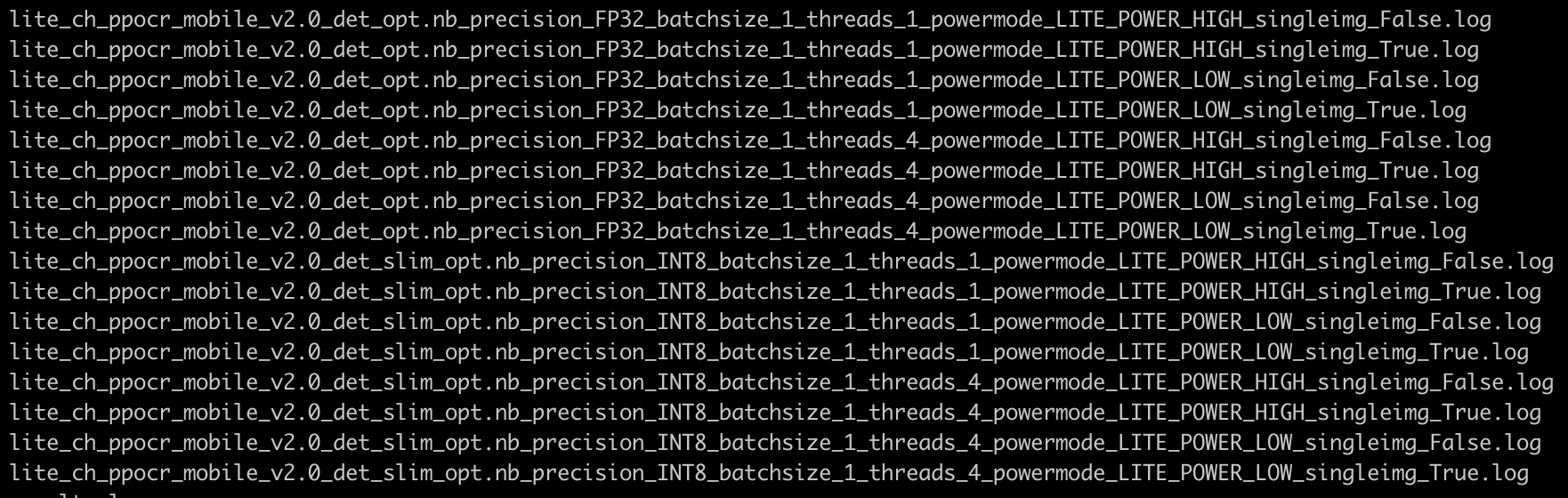

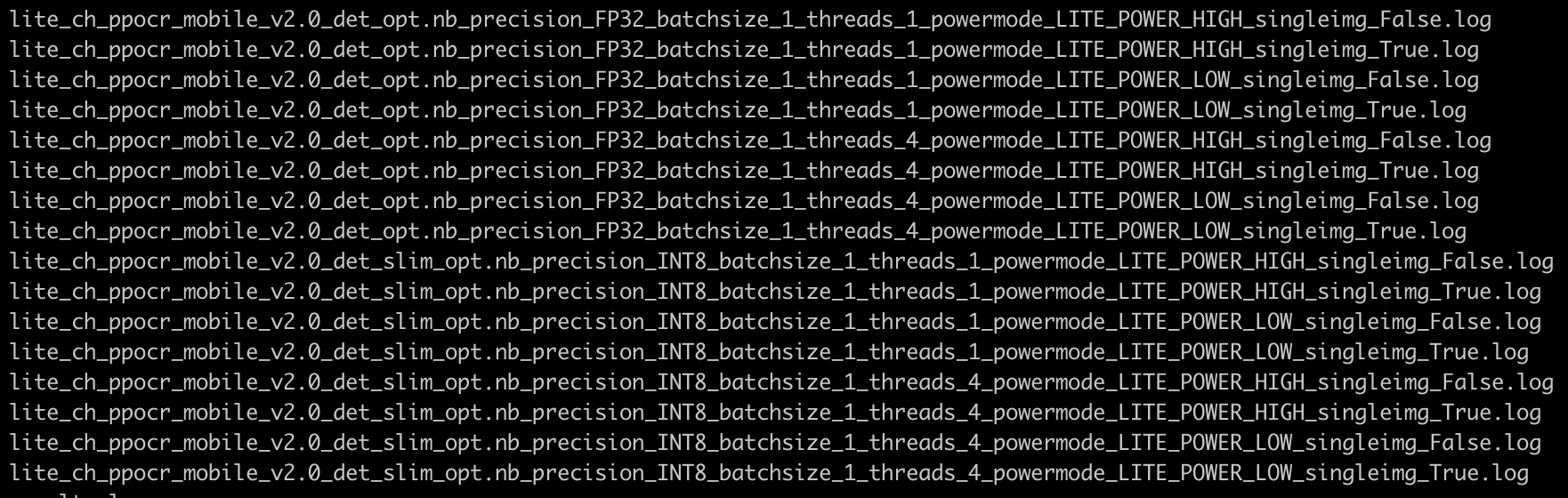

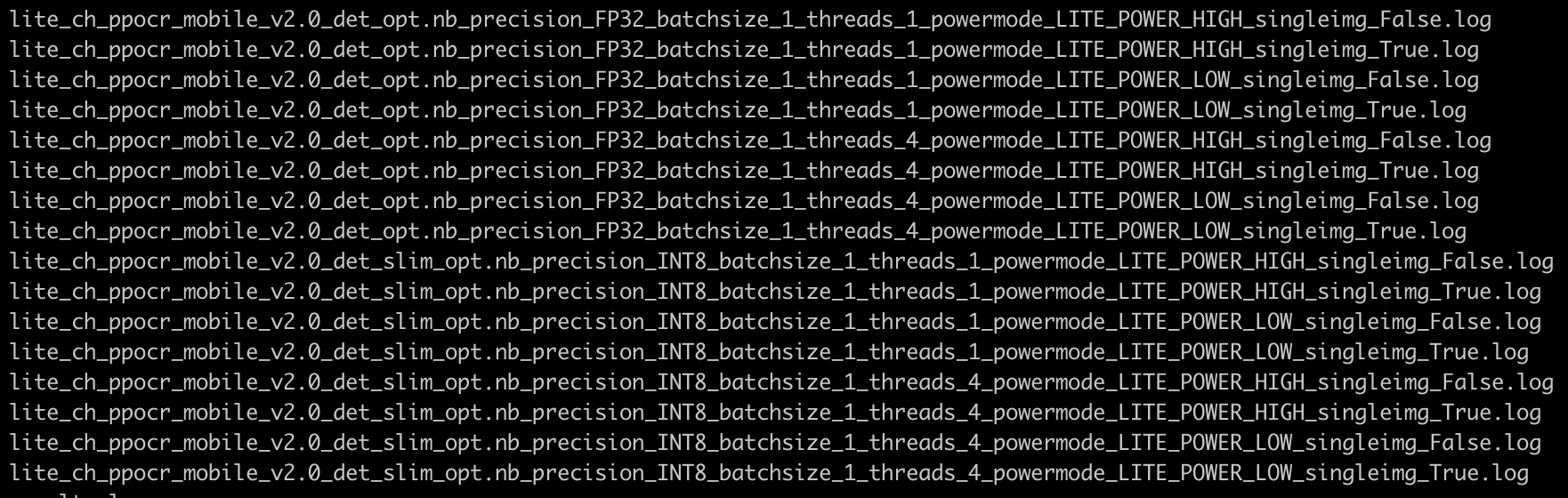

test_tipc/prepare_lite.sh

0 → 100644