Merge branch 'PaddlePaddle:dygraph' into dygraph

Showing

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

doc/PaddleOCR_log.png

0 → 100644

75.5 KB

80.1 KB

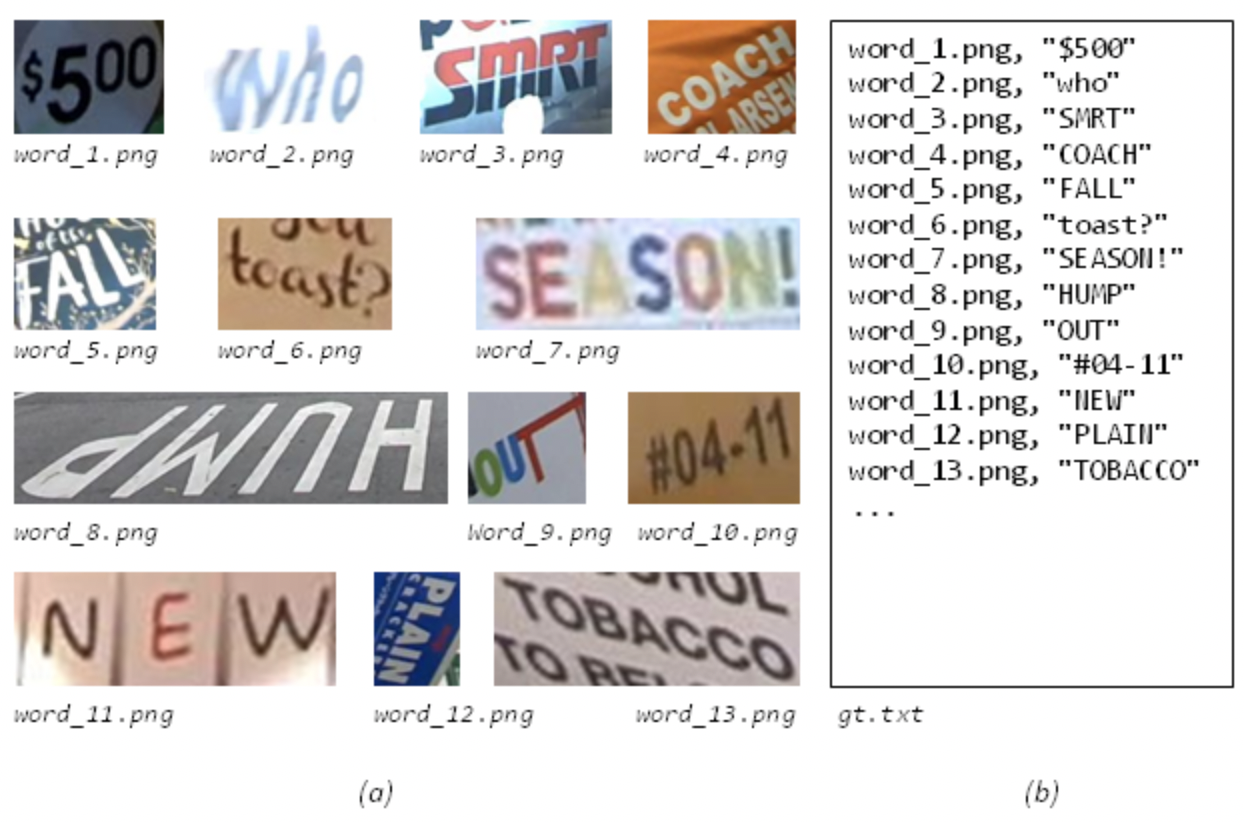

doc/datasets/icdar_rec.png

0 → 100644

921 KB

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.