-## 简介

-Text Generation Inference(TGI)是一个用 Rust 和 Python 编写的框架,用于部署和提供LLM模型的推理服务。TGI为很多大模型提供了高性能的推理服务,如LLama,Falcon,BLOOM,Baichuan,Qwen等。

+

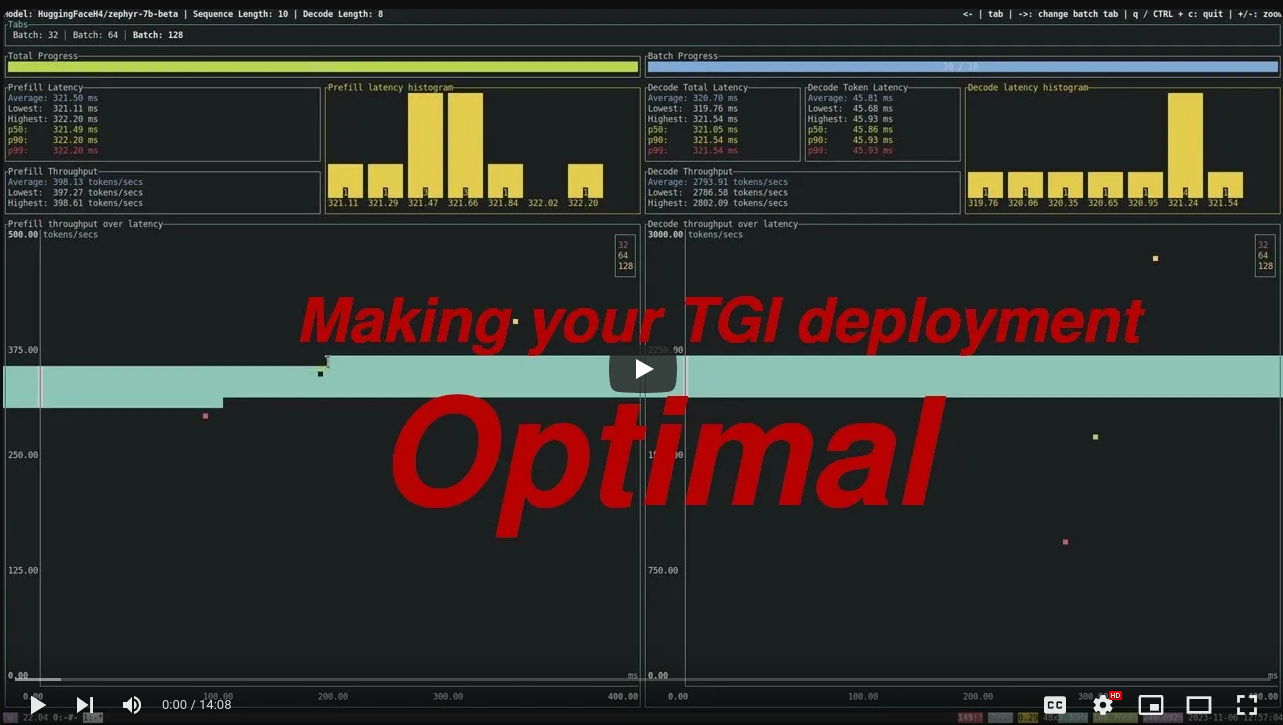

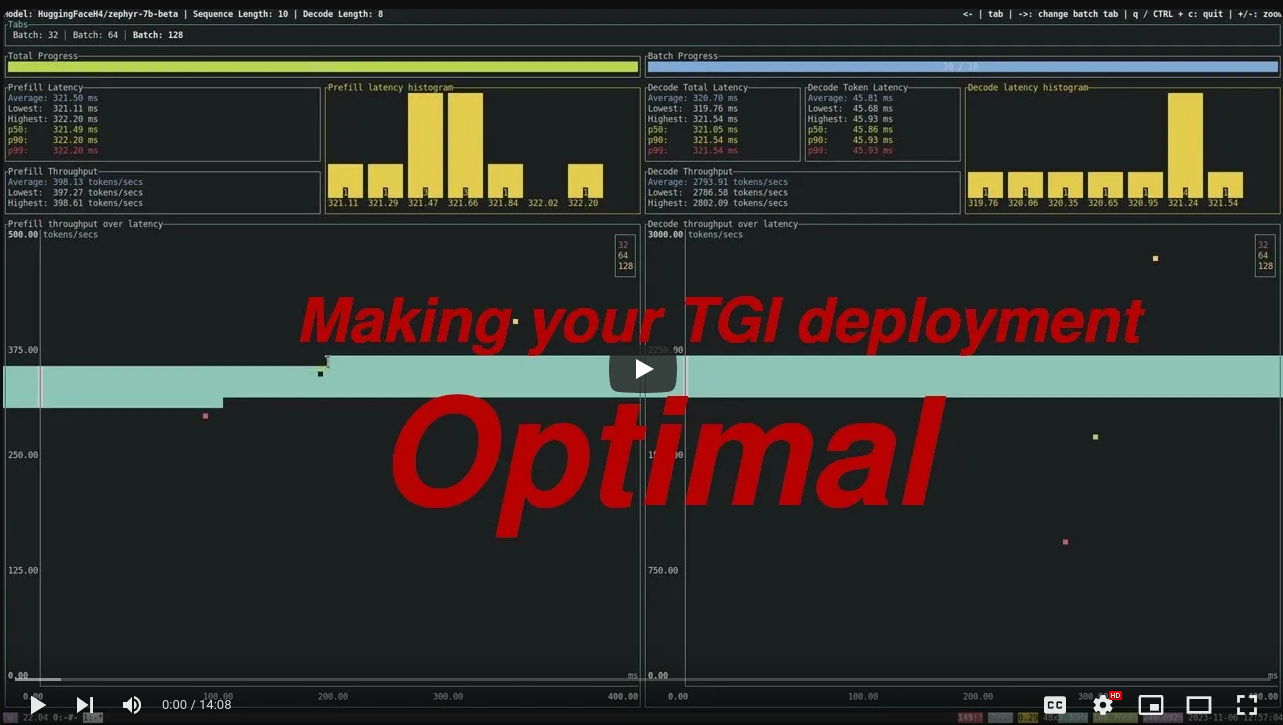

+  +

+

-## 支持模型结构列表

-| 模型 | 模型并行 | FP16 |

-| :----------: | :------: | :--: |

-| LLaMA | Yes | Yes |

-| LLaMA-2 | Yes | Yes |

-| LLaMA-2-GPTQ | Yes | Yes |

-| LLaMA-3 | Yes | Yes |

-| Codellama | Yes | Yes |

-| QWen2 | Yes | Yes |

-| QWen2-GPTQ | Yes | Yes |

-| Baichuan-7B | Yes | Yes |

-| Baichuan2-7B | Yes | Yes |

-| Baichuan2-13B | Yes | Yes |

+# Text Generation Inference

+

+  +

+

+

+  +

+

-## 环境要求

-+ Python 3.10

-+ DTK 24.04.2

-+ torch 2.1.0

+A Rust, Python and gRPC server for text generation inference. Used in production at [Hugging Face](https://huggingface.co)

+to power Hugging Chat, the Inference API and Inference Endpoint.

-### 使用源码编译方式安装

+

+

-## 支持模型结构列表

-| 模型 | 模型并行 | FP16 |

-| :----------: | :------: | :--: |

-| LLaMA | Yes | Yes |

-| LLaMA-2 | Yes | Yes |

-| LLaMA-2-GPTQ | Yes | Yes |

-| LLaMA-3 | Yes | Yes |

-| Codellama | Yes | Yes |

-| QWen2 | Yes | Yes |

-| QWen2-GPTQ | Yes | Yes |

-| Baichuan-7B | Yes | Yes |

-| Baichuan2-7B | Yes | Yes |

-| Baichuan2-13B | Yes | Yes |

+# Text Generation Inference

+

+

+

-## 支持模型结构列表

-| 模型 | 模型并行 | FP16 |

-| :----------: | :------: | :--: |

-| LLaMA | Yes | Yes |

-| LLaMA-2 | Yes | Yes |

-| LLaMA-2-GPTQ | Yes | Yes |

-| LLaMA-3 | Yes | Yes |

-| Codellama | Yes | Yes |

-| QWen2 | Yes | Yes |

-| QWen2-GPTQ | Yes | Yes |

-| Baichuan-7B | Yes | Yes |

-| Baichuan2-7B | Yes | Yes |

-| Baichuan2-13B | Yes | Yes |

+# Text Generation Inference

+

+  +

+

+

+

+

+  +

-## 环境要求

-+ Python 3.10

-+ DTK 24.04.2

-+ torch 2.1.0

+A Rust, Python and gRPC server for text generation inference. Used in production at [Hugging Face](https://huggingface.co)

+to power Hugging Chat, the Inference API and Inference Endpoint.

-### 使用源码编译方式安装

+

+

-## 环境要求

-+ Python 3.10

-+ DTK 24.04.2

-+ torch 2.1.0

+A Rust, Python and gRPC server for text generation inference. Used in production at [Hugging Face](https://huggingface.co)

+to power Hugging Chat, the Inference API and Inference Endpoint.

-### 使用源码编译方式安装

+