"vscode:/vscode.git/clone" did not exist on "e1ef122260d015255b0a7c075fd08ed114670671"

精简代码

Showing

Doc/Tutorial_Cpp.md

0 → 100644

Doc/Tutorial_Python.md

0 → 100644

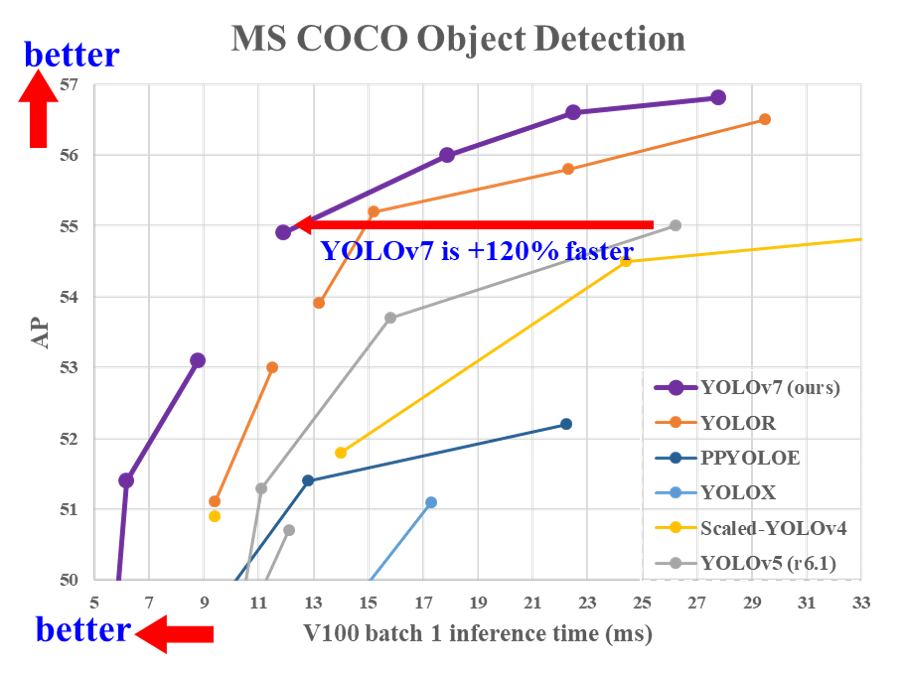

Doc/YOLOV7_01.png

0 → 100644

165 KB

File moved

File moved

Src/Sample.cpp

deleted

100644 → 0

Src/Sample.h

deleted

100644 → 0