yolov5-qat

Showing

models/hub/yolov5s6.yaml

0 → 100644

models/hub/yolov5x6.yaml

0 → 100644

models/tf.py

0 → 100644

models/yolo.py

0 → 100644

models/yolov5l.yaml

0 → 100644

models/yolov5m.yaml

0 → 100644

models/yolov5n.yaml

0 → 100644

models/yolov5s.yaml

0 → 100644

models/yolov5x.yaml

0 → 100644

pyproject.toml

0 → 100644

quantization/quantize.py

0 → 100644

quantization/rules.py

0 → 100644

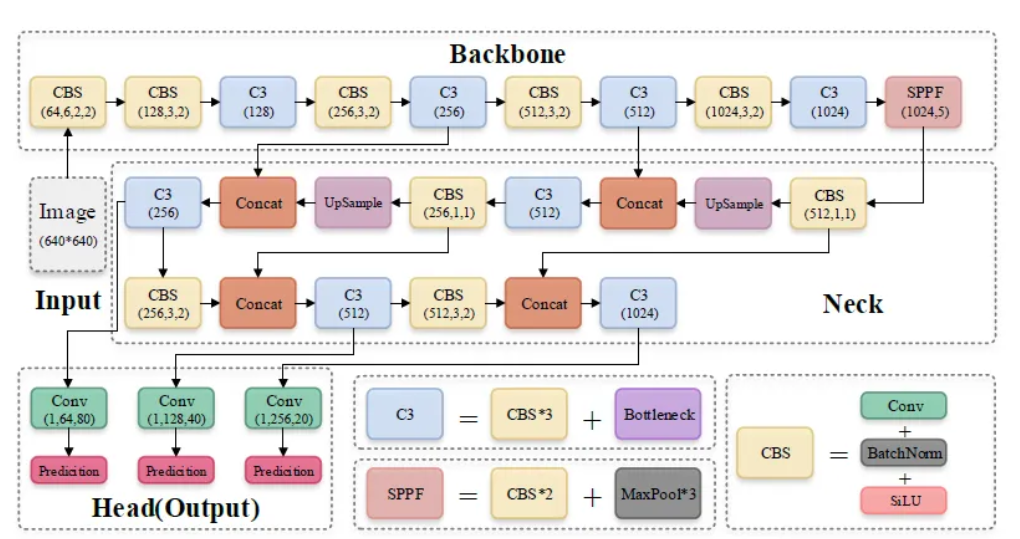

readme_imgs/image-1.png

0 → 100644

491 KB