add new files

Showing

docs/images/android4.jpg

0 → 100644

77.8 KB

docs/images/desktop1.jpg

0 → 100644

19.3 KB

docs/images/desktop2.jpg

0 → 100644

32 KB

docs/images/desktop3.jpg

0 → 100644

25.3 KB

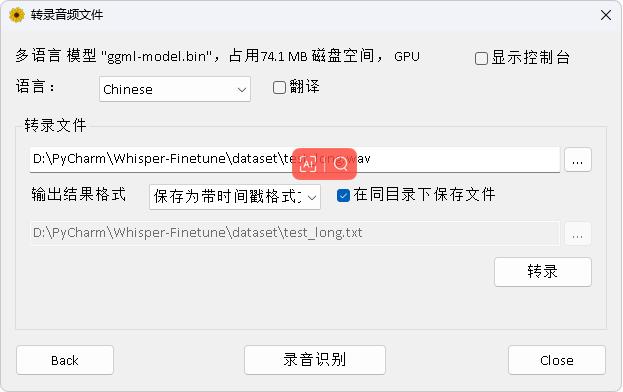

docs/images/gui.jpg

0 → 100644

18 KB

docs/images/qq.png

0 → 100644

50.4 KB

docs/images/web.jpg

0 → 100644

23.9 KB

evaluation.py

0 → 100644

finetune.py

0 → 100644

infer.py

0 → 100644

infer_ct2.py

0 → 100644

infer_gui.py

0 → 100644

infer_server.py

0 → 100644

merge_lora.py

0 → 100644

metrics/__init__.py

0 → 100644

metrics/cer.py

0 → 100644

metrics/wer.py

0 → 100644

requirements.txt

0 → 100644

| numpy>=1.23.1 | ||

| soundfile>=0.12.1 | ||

| librosa>=0.10.0 | ||

| dataclasses>=0.6 | ||

| transformers>=4.39.3 | ||

| bitsandbytes>=0.41.0 | ||

| datasets>=2.11.0 | ||

| evaluate>=0.4.0 | ||

| ctranslate2>=3.21.0 | ||

| faster-whisper>=0.10.0 | ||

| jiwer>=2.5.1 | ||

| peft>=0.6.2 | ||

| accelerate>=0.21.0 | ||

| zhconv>=1.4.2 | ||

| tqdm>=4.62.1 | ||

| soundcard>=0.4.2 | ||

| uvicorn>=0.21.1 | ||

| fastapi>=0.95.1 | ||

| starlette>=0.26.1 | ||

| tensorboardX>=2.2 |

run.sh

0 → 100644

static/index.css

0 → 100644