wan2.1

Showing

modified/envs.py

0 → 100644

modified/fix.sh

0 → 100644

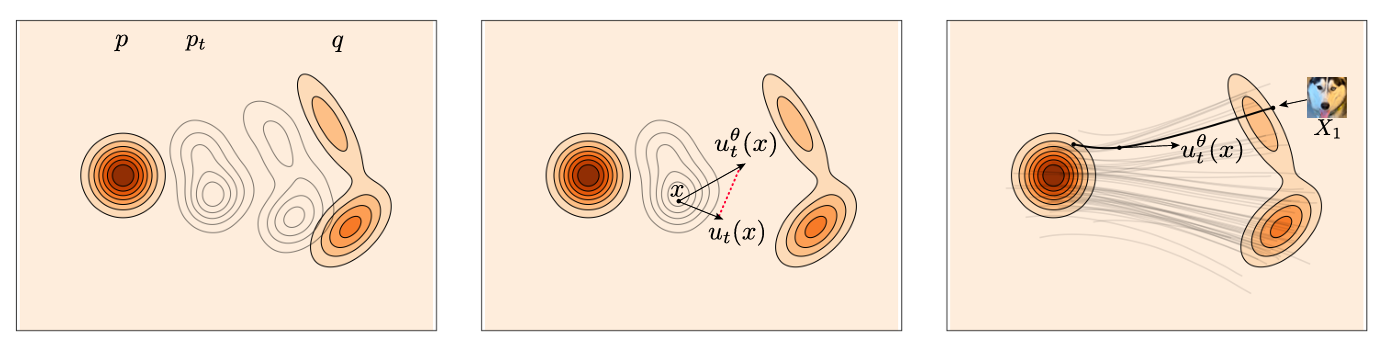

readme_imgs/alg.png

0 → 100644

189 KB

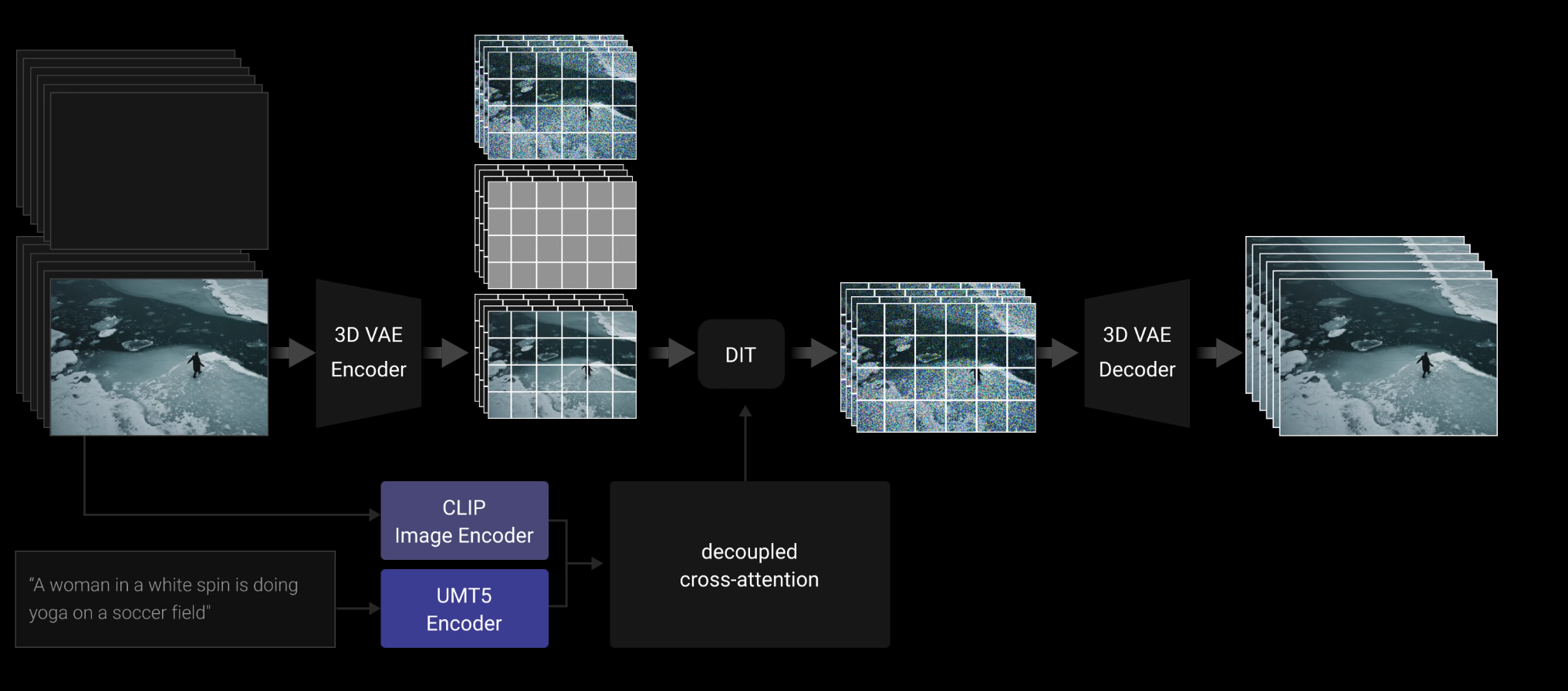

readme_imgs/arch.png

0 → 100644

694 KB

readme_imgs/i2v-14B_480.gif

0 → 100644

9.32 MB

readme_imgs/i2v-14B_720.gif

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

readme_imgs/t2i-14B.png

0 → 100644

1.21 MB

readme_imgs/t2v-1.3B.gif

0 → 100644

7.9 MB

readme_imgs/t2v-14B.gif

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

requirements.txt

0 → 100644

| # torch>=2.4.0 | |||

| # torchvision>=0.19.0 | |||

| opencv-python>=4.9.0.80 | |||

| diffusers>=0.31.0 | |||

| transformers>=4.49.0 | |||

| tokenizers>=0.20.3 | |||

| accelerate>=1.1.1 | |||

| tqdm | |||

| imageio | |||

| easydict | |||

| ftfy | |||

| dashscope | |||

| imageio-ffmpeg | |||

| # flash_attn | |||

| gradio>=5.0.0 | |||

| numpy==1.24.4 | |||

| yunchang | |||

| DistVAE | |||

| \ No newline at end of file |

tests/README.md

0 → 100644

tests/test.sh

0 → 100644

wan/__init__.py

0 → 100644

File added

File added

File added

wan/configs/__init__.py

0 → 100644

File added

File added

File added