Initial commit

Showing

File added

File added

File added

File added

File added

images/demo.mp4

0 → 100644

File added

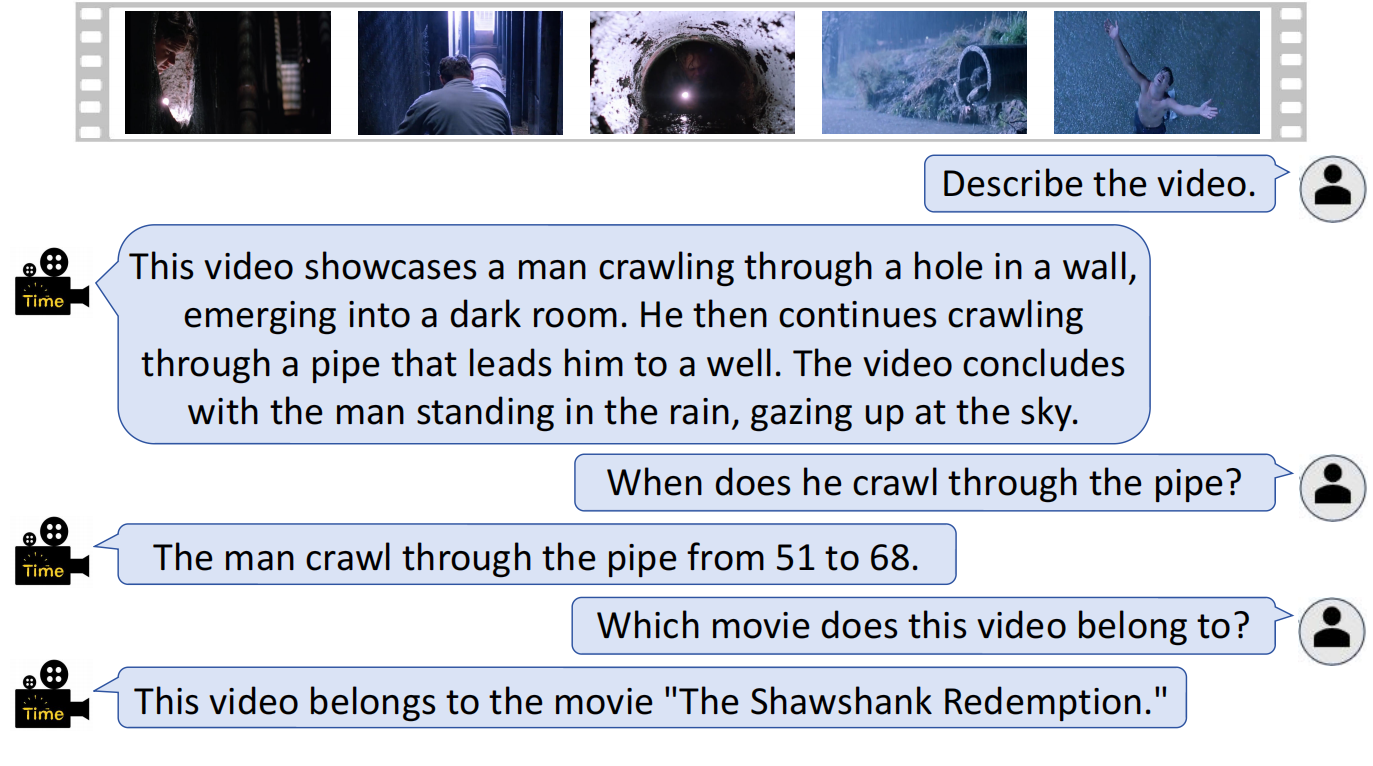

images/ex.png

0 → 100644

406 KB

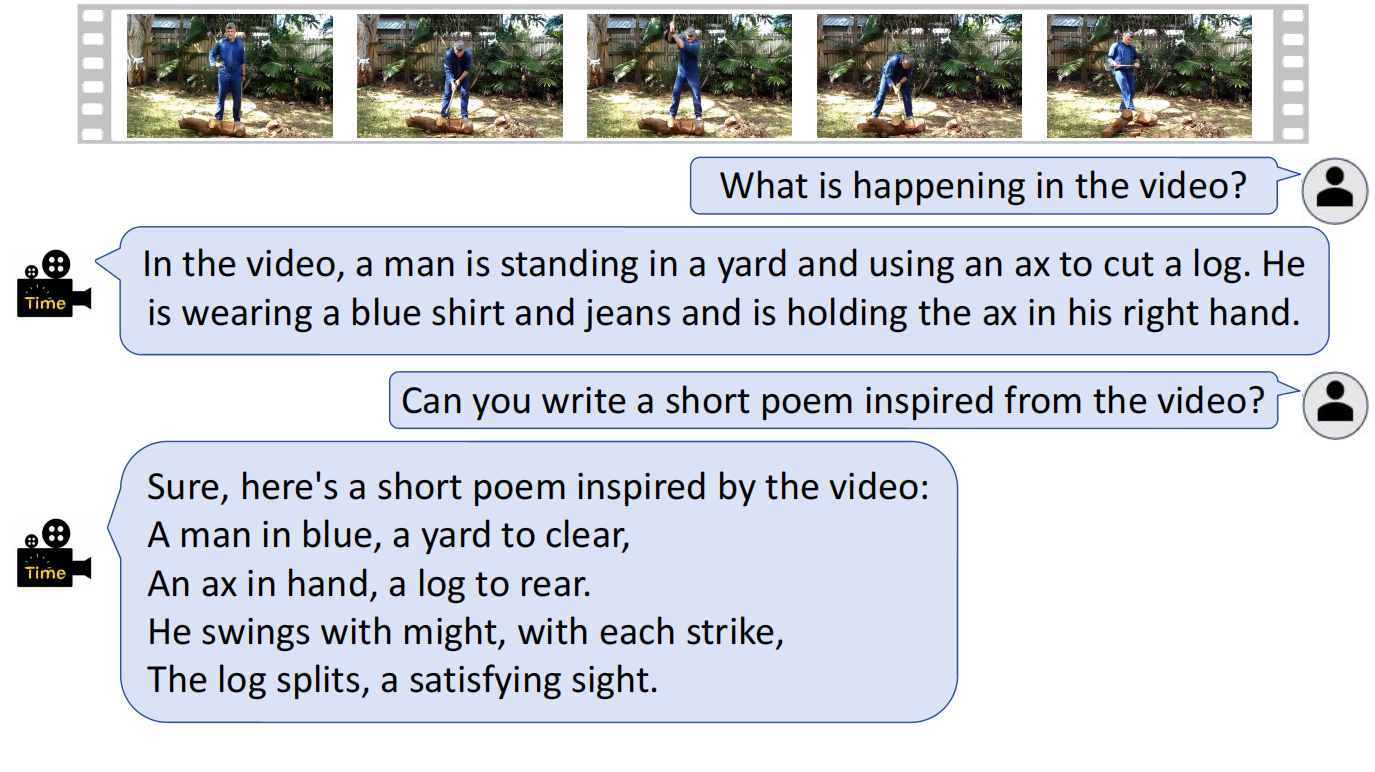

images/ex1.png

0 → 100644

487 KB

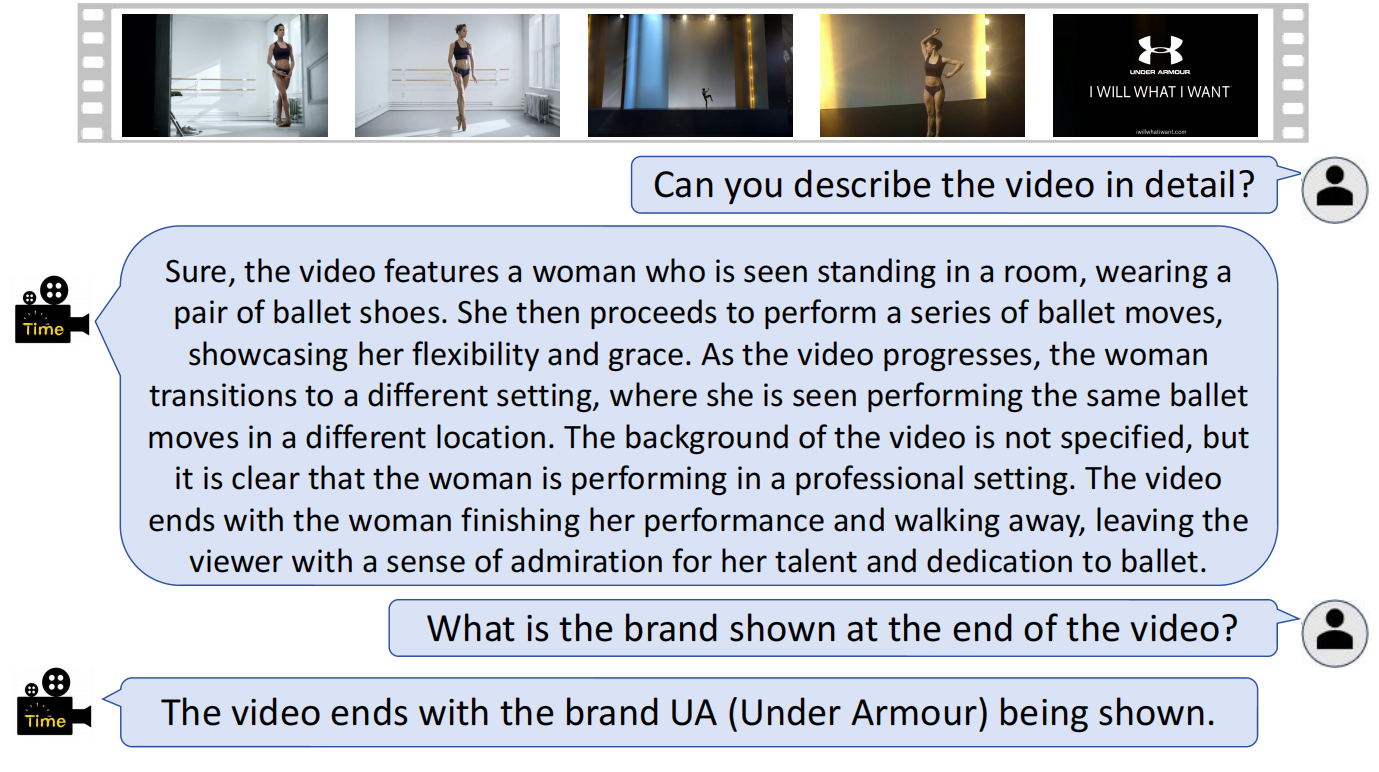

images/ex2.png

0 → 100644

306 KB

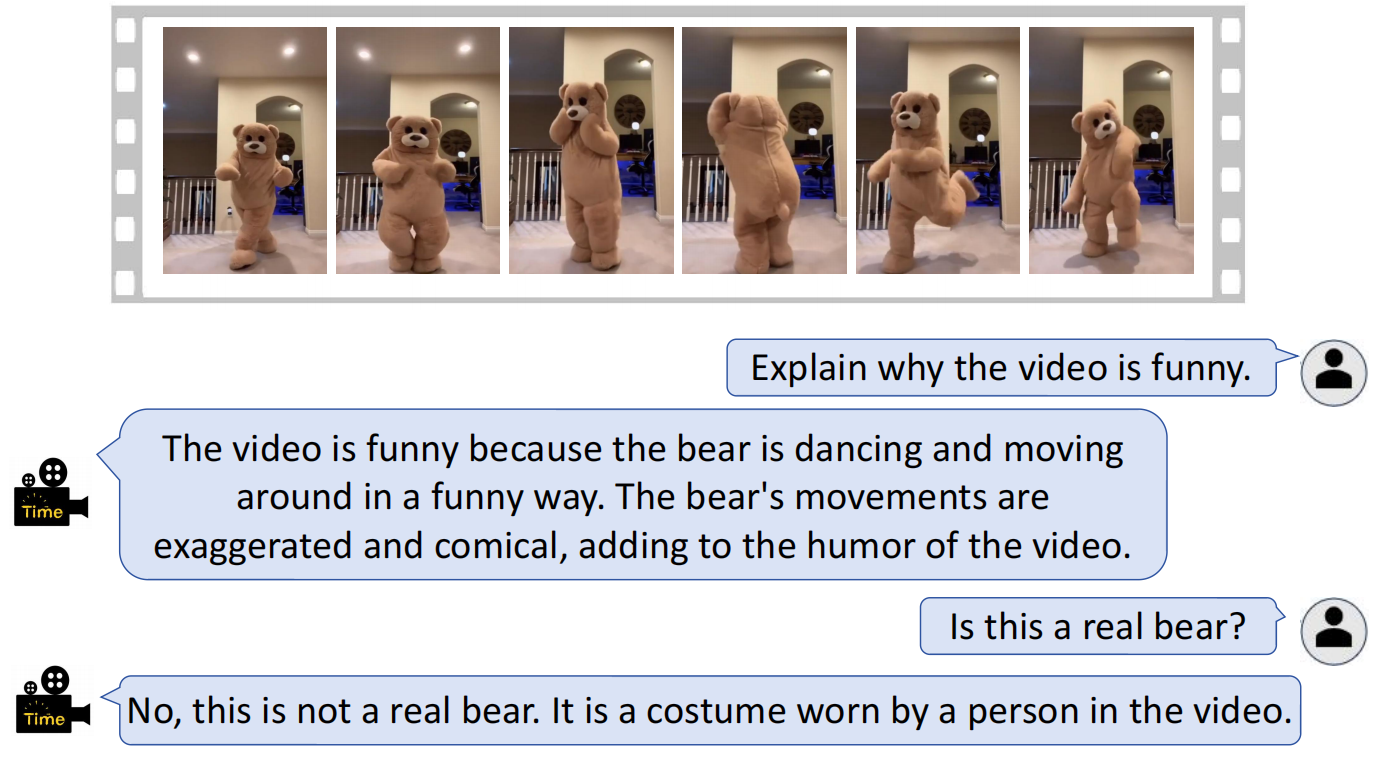

images/ex3.png

0 → 100644

426 KB

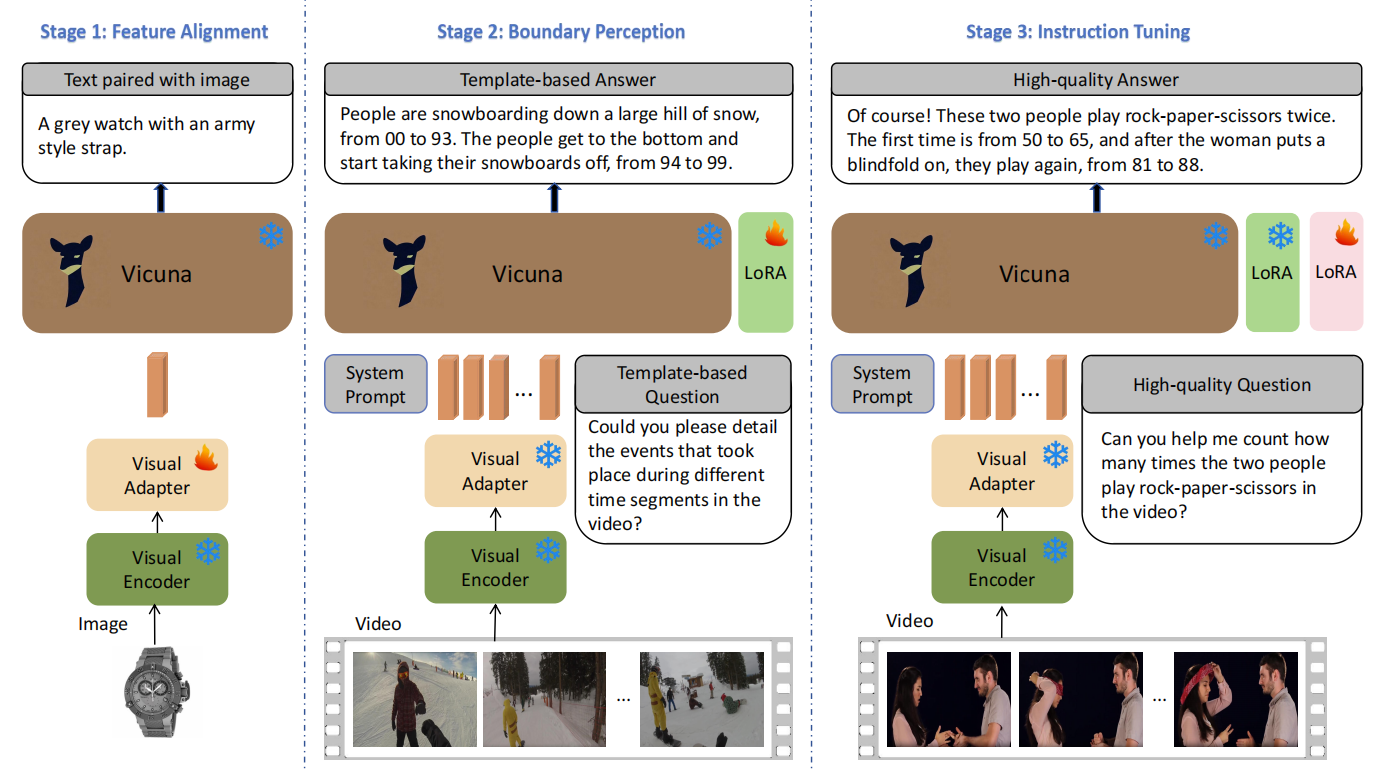

images/framework.png

0 → 100644

325 KB

model.properties

0 → 100644

requirements.txt

0 → 100644

| # torch | |||

| # flash-attn | |||

| # torchvision | |||

| # deepspeed | |||

| decord | |||

| easydict | |||

| einops | |||

| gradio | |||

| numpy | |||

| pandas>=2.0.3 | |||

| peft>=0.4.0 | |||

| Pillow | |||

| tqdm | |||

| transformers==4.31.0 | |||

| git+https://github.com/openai/CLIP.git | |||

| sentencepiece | |||

| protobuf | |||

| wandb | |||

| ninja | |||

| huggingface_hub |

scripts/stage1.sh

0 → 100644

scripts/stage1_glm.sh

0 → 100755

scripts/stage2.sh

0 → 100644

scripts/stage2_glm.sh

0 → 100755

scripts/stage3.sh

0 → 100644

scripts/stage3_glm.sh

0 → 100755

scripts/zero2.json

0 → 100755