v1

Showing

monotonic_align/core.pyx

0 → 100644

File added

monotonic_align/setup.py

0 → 100644

preprocess.py

0 → 100644

requirements.txt

0 → 100644

| Cython | ||

| librosa==0.9.1 | ||

| matplotlib | ||

| numpy==1.24.0 | ||

| phonemizer | ||

| scipy | ||

| tensorboard | ||

| Unidecode |

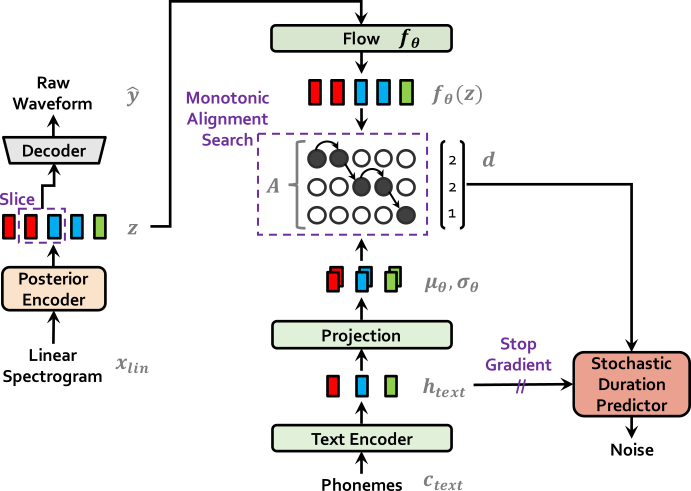

resources/fig_1a.png

0 → 100644

62.6 KB

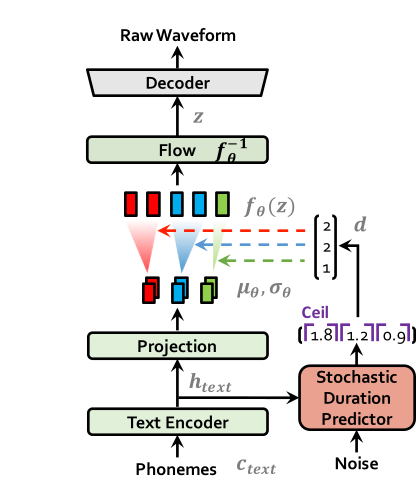

resources/fig_1b.png

0 → 100644

35.3 KB

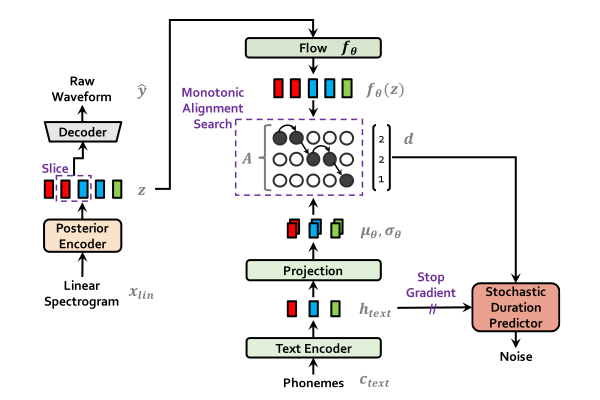

resources/training.png

0 → 100644

45.2 KB

run_multi.sh

0 → 100644

run_single.sh

0 → 100644

text/LICENSE

0 → 100644

text/__init__.py

0 → 100644

File added

File added

File added

text/cleaners.py

0 → 100644

text/symbols.py

0 → 100644

train.py

0 → 100644

train_ms.py

0 → 100644

transforms.py

0 → 100644