veros

Showing

pyproject.toml

0 → 100755

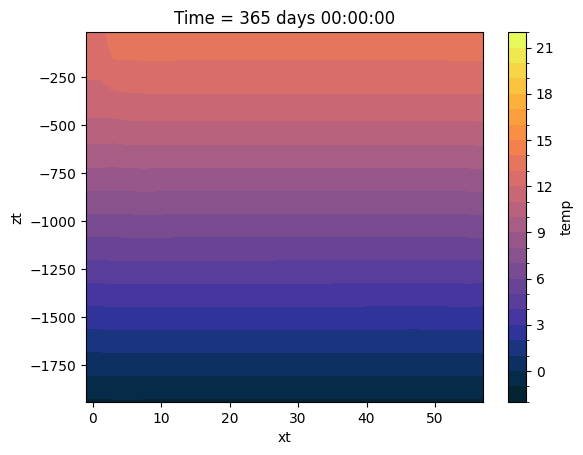

readme_imgs/image-1.png

0 → 100644

30.5 KB

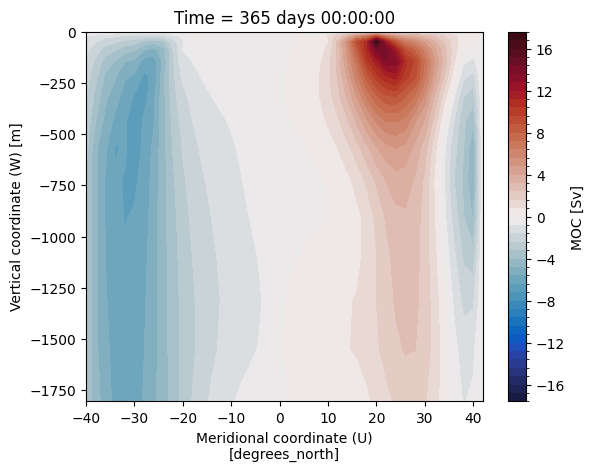

readme_imgs/image-3.png

0 → 100644

46.6 KB

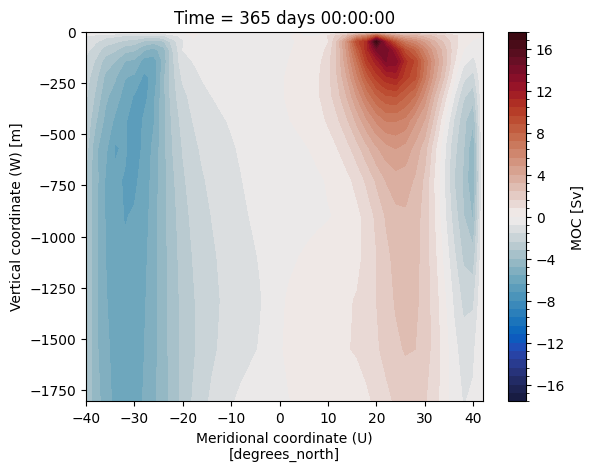

readme_imgs/image-4.png

0 → 100644

46.7 KB

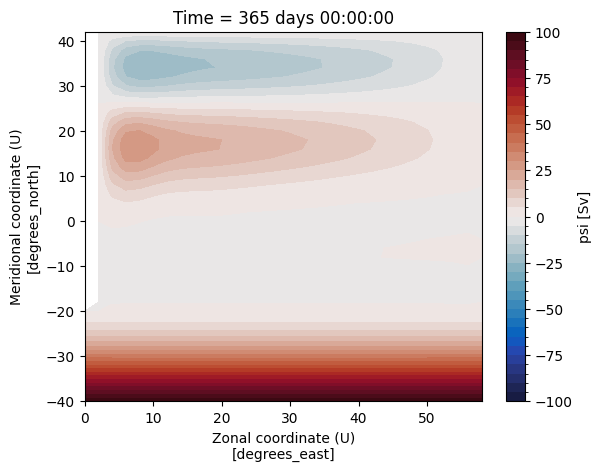

readme_imgs/image-5.png

0 → 100644

20.2 KB

readme_imgs/image-6.png

0 → 100644

20.2 KB

readme_imgs/image-7.png

0 → 100644

46.2 KB

readme_imgs/image-8.png

0 → 100644

45.2 KB

readme_imgs/image.png

0 → 100644

30.5 KB

requirements.txt

0 → 100755

requirements_jax.txt

0 → 100755

run_benchmarks.py

0 → 100755

setup.cfg

0 → 100755

setup.py

0 → 100755

test/cli_test.py

0 → 100755

test/conftest.py

0 → 100755