Initial commit

parents

Showing

README.md

0 → 100644

README_vary.md

0 → 100644

Vary_paper.pdf

0 → 100644

File added

assets/car_number.png

0 → 100644

5.67 KB

assets/logo.jpg

0 → 100644

321 KB

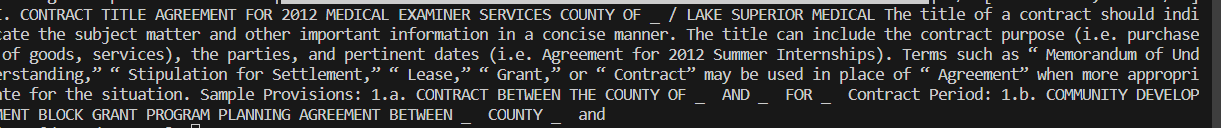

assets/ocr_cn.png

0 → 100644

66 KB

assets/ocr_en.png

0 → 100644

22.4 KB

assets/pic.jpg

0 → 100644

113 KB

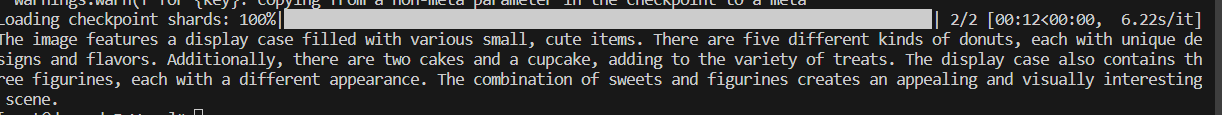

assets/pic_result.png

0 → 100644

16.2 KB

File added

cache/vary.png

0 → 100644

597 KB