"configs/datasets/siqa/siqa_ppl_7845b0.py" did not exist on "7d346000bb8f1f7611f88dc8e003bdf8c9ae3ece"

update uni-fold

Showing

File added

finetune_monomer.sh

0 → 100755

finetune_multimer.sh

0 → 100755

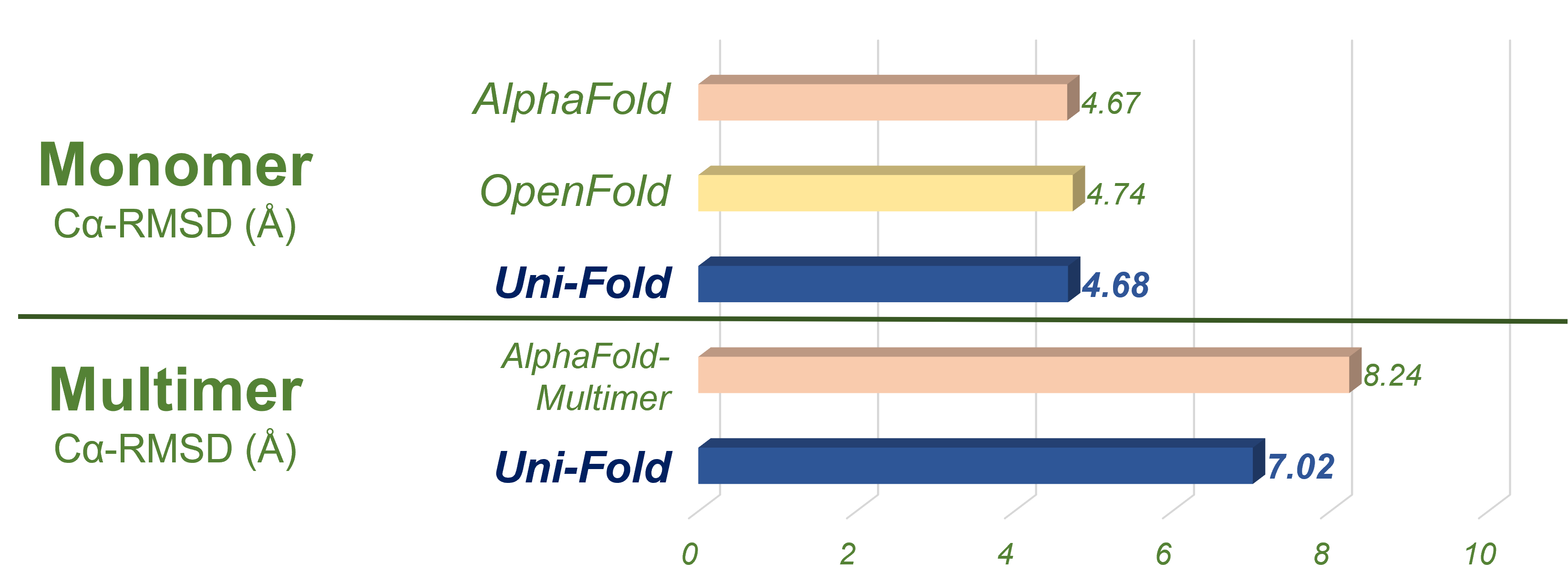

img/accuracy.png

0 → 100755

119 KB

img/case.png

0 → 100755

914 KB

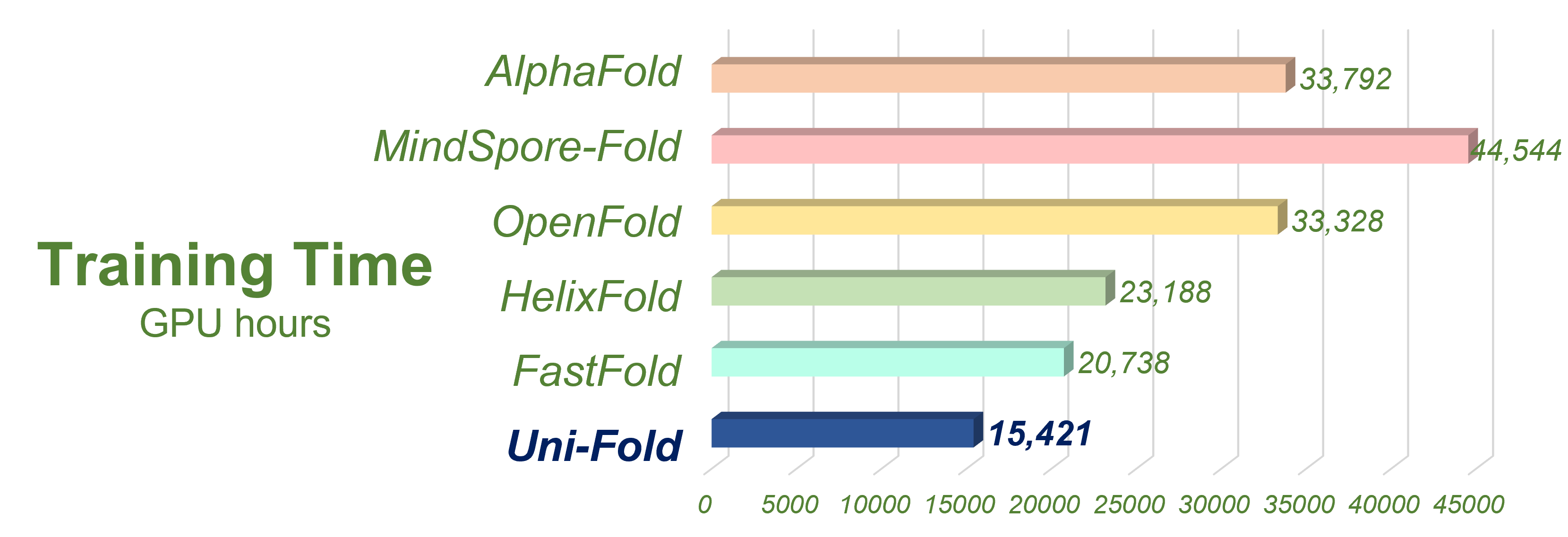

img/train_time.png

0 → 100755

119 KB

img/uf-symmetry-effect.gif

0 → 100755

2.27 MB

notebooks/unifold.ipynb

0 → 100755

require.txt

0 → 100644

result.monomer

0 → 100644

result.multimer

0 → 100644

run_monomer.sh

0 → 100755

run_multimer.sh

0 → 100755

run_uf_symmetry.sh

0 → 100755

run_unifold.sh

0 → 100755