description: "Master time series cross-validation with TimeGPT. Complete Python tutorial for model validation, rolling-window techniques, and prediction intervals with code examples."

icon: "check"

---

## What is Cross-validation?

Time series cross-validation is essential for validating machine learning models and ensuring accurate forecasts. Unlike traditional k-fold cross-validation, time series validation requires specialized rolling-window techniques that respect temporal order. This comprehensive tutorial shows you how to perform cross-validation in Python using TimeGPT, including prediction intervals, exogenous variables, and model performance evaluation.

One of the primary challenges in time series forecasting is the inherent uncertainty and variability over time, making it crucial to validate the accuracy and reliability of the models employed. Cross-validation, a robust model validation technique, is particularly adapted for this task, as it provides insights into the expected performance of a model on unseen data, ensuring the forecasts are reliable and resilient before being deployed in real-world scenarios.

TimeGPT incorporates the `cross_validation` method, designed to streamline the validation process for [time series forecasting models](/forecasting/timegpt_quickstart). This functionality enables practitioners to rigorously test their forecasting models against historical data, with support for [prediction intervals](/forecasting/probabilistic/prediction_intervals) and [exogenous variables](/forecasting/exogenous-variables/numeric_features). This tutorial will guide you through the nuanced process of conducting cross-validation within the `NixtlaClient` class, ensuring your time series forecasting models are not just well-constructed, but also validated for trustworthiness and precision.

### Why Use Cross-Validation for Time Series?

Cross-validation provides several critical benefits for time series forecasting:

- **Prevent overfitting**: Test model performance across multiple time periods

- **Validate generalization**: Ensure forecasts work on unseen data

- **Quantify uncertainty**: Generate prediction intervals for risk assessment

- **Compare models**: Evaluate different forecasting approaches systematically

- **Optimize hyperparameters**: Fine-tune model parameters with confidence

## How to Perform Cross-validation with TimeGPT

<Info>

**Quick Summary**: Learn time series cross-validation with TimeGPT in Python. This tutorial covers rolling-window validation, prediction intervals, model performance metrics, and advanced techniques with real-world examples using the Peyton Manning dataset.

</Info>

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/08_cross_validation.ipynb)

### Step 1: Import Packages and Initialize NixtlaClient

First, we install and import the required packages and initialize the Nixtla client.

We start off by initializing an instance of `NixtlaClient`.

```python

import pandas as pd

from nixtla import NixtlaClient

from IPython.display import display

# Initialize TimeGPT client for cross-validation

nixtla_client = NixtlaClient(

api_key='my_api_key_provided_by_nixtla'

)

```

### Step 2: Load Example Data

Use the Peyton Manning dataset as an example. The dataset can be loaded directly from Nixtla's S3 bucket:

<Info>If you are using your own data, ensure your data is properly formatted: you must have a time column (e.g., `ds`), a target column (e.g., `y`), and, if necessary, an identifier column (e.g., `unique_id`) for multiple time series.</Info>

The `cross_validation` method within the TimeGPT class is an advanced functionality crafted to perform systematic validation on time series forecasting models. This method necessitates a dataframe comprising time-ordered data and employs a rolling-window scheme to meticulously evaluate the model's performance across different time periods, thereby ensuring the model's reliability and stability over time. The animation below shows how TimeGPT performs cross-validation.

<Frame caption="Rolling-window cross-validation conceptually splits your dataset into multiple training and validation sets over time.">

- `freq`: Frequency of your data (e.g., `'D'` for daily). If not specified, it will be inferred.

- `id_col`, `time_col`, `target_col`: Columns representing series ID, timestamps, and target values.

- `n_windows`: Number of separate validation windows.

- `step_size`: Step size between each validation window.

- `h`: Forecast horizon (e.g., the number of days ahead to predict).

In execution, `cross_validation` assesses the model's forecasting accuracy in each window, providing a robust view of the model's performance variability over time and potential overfitting. This detailed evaluation ensures the forecasts generated are not only accurate but also consistent across diverse temporal contexts.

<Info>

**Key Concepts**: Rolling-window cross-validation splits your dataset into multiple training and testing sets over time. Each window moves forward chronologically, training on historical data and validating on future periods. This approach mimics real-world forecasting scenarios where you predict forward in time.

</Info>

Use `cross_validation` on the Peyton Manning dataset:

```python

# Perform cross-validation with 5 windows and 7-day forecast horizon

timegpt_cv_df = nixtla_client.cross_validation(

pm_df,

h=7, # Forecast 7 days ahead

n_windows=5, # Test across 5 different time periods

freq='D' # Daily frequency

)

timegpt_cv_df.head()

```

The logs below indicate successful cross-validation calls and data preprocessing.

```bash

INFO:nixtla.nixtla_client:Validating inputs...

INFO:nixtla.nixtla_client:Querying model metadata...

Visualize forecast performance for each cutoff period. Here's an example plotting the last 100 rows of actual data along with cross-validation forecasts for each cutoff.

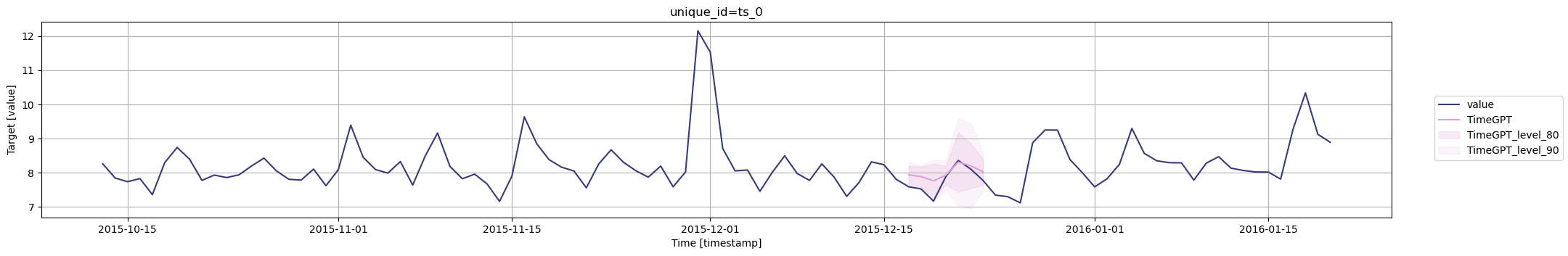

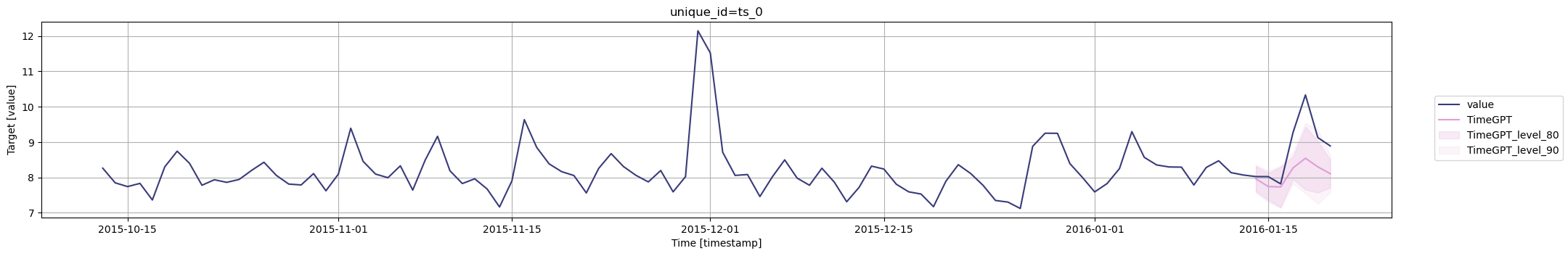

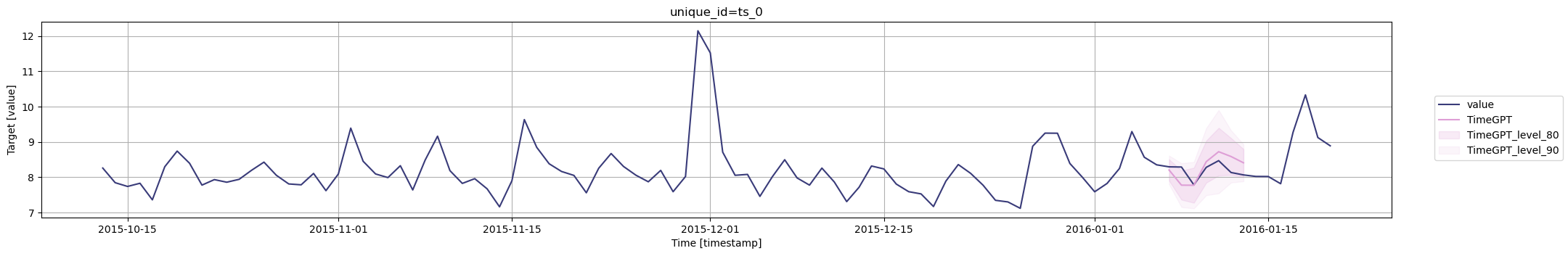

caption="An example visualization of predicted vs. actual values in the Peyton Manning dataset with prediction intervals."

>

</Frame>

### Step 6: Enhance Forecasts with Exogenous Variables

#### Time Features

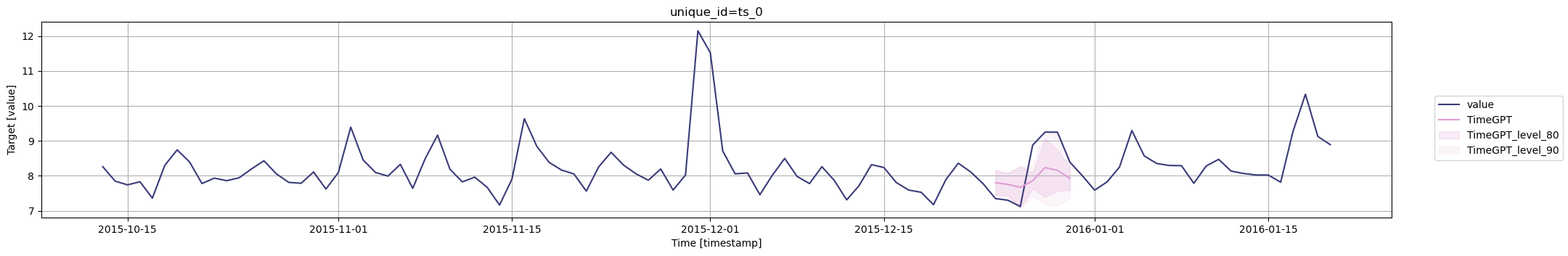

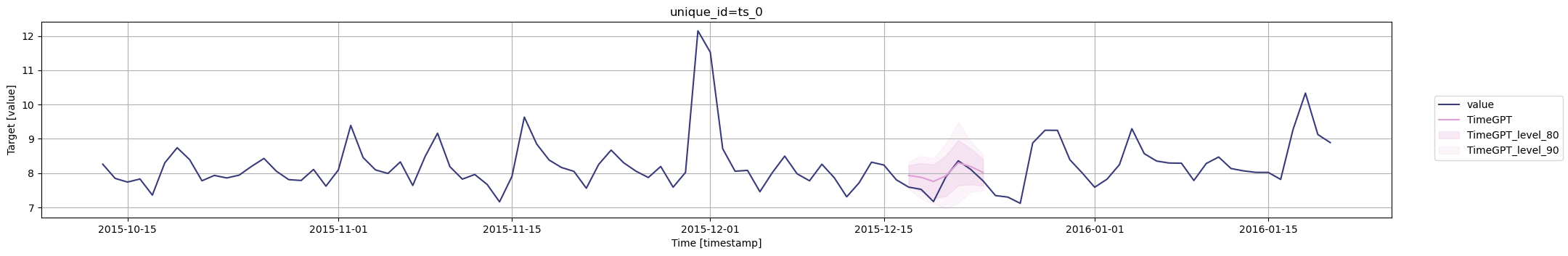

It is possible to include exogenous variables when performing cross-validation. Here we use the `date_features` parameter to create labels for each month. These features are then used by the model to make predictions during cross-validation.

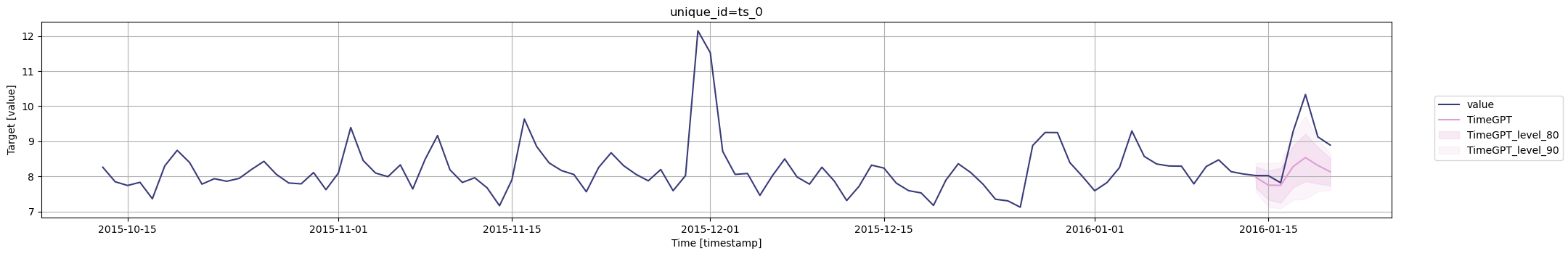

caption="An example visualization of predicted vs. actual values in the Peyton Manning dataset with time features."

>

</Frame>

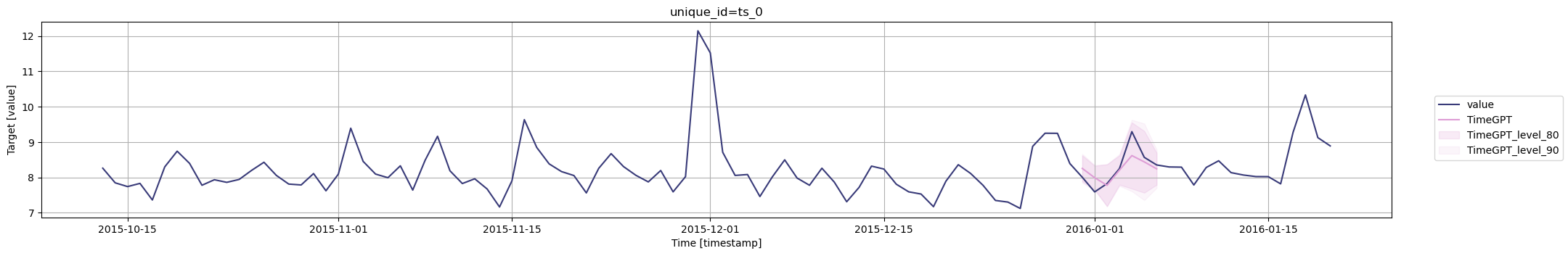

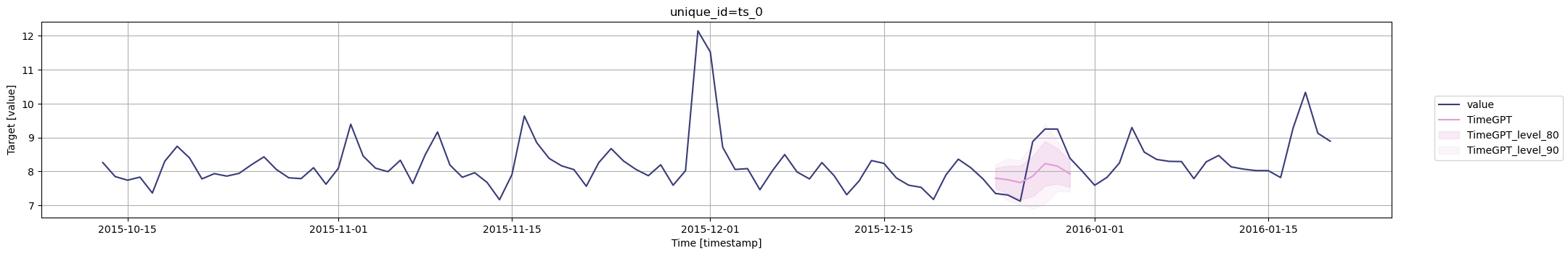

#### Dynamic Features

Additionally you can pass dynamic exogenous variables to better inform TimeGPT about the data. You just simply have to add the exogenous regressors after the target column.

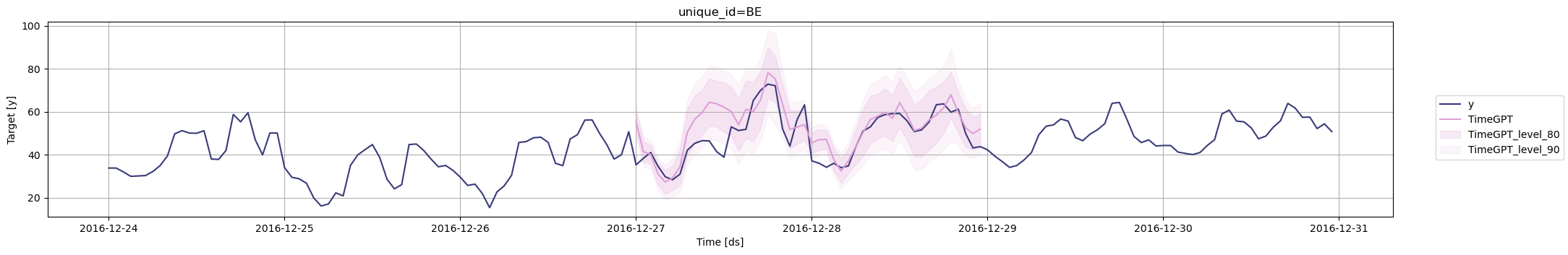

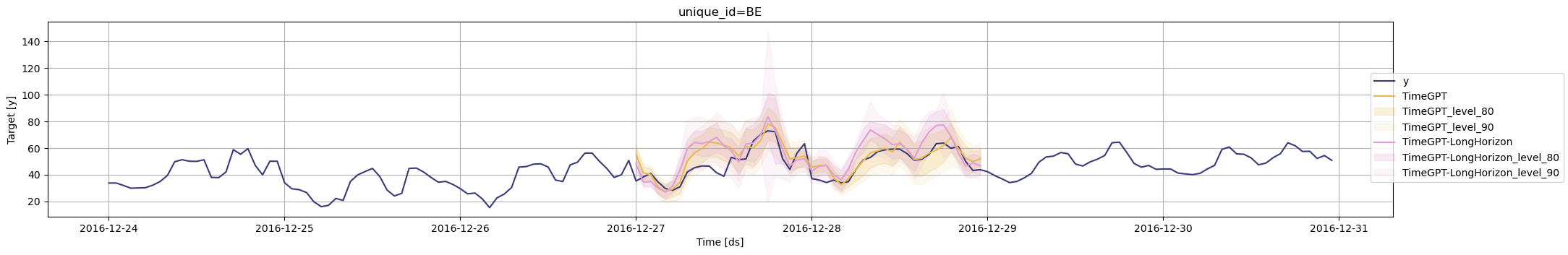

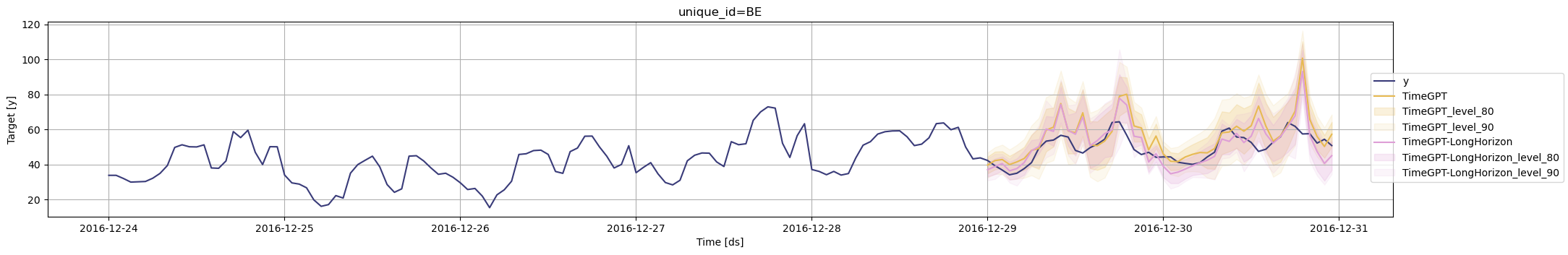

caption="An example visualization of predicted vs. actual values in the electricity dataset with dynamic exogenous variables."

>

</Frame>

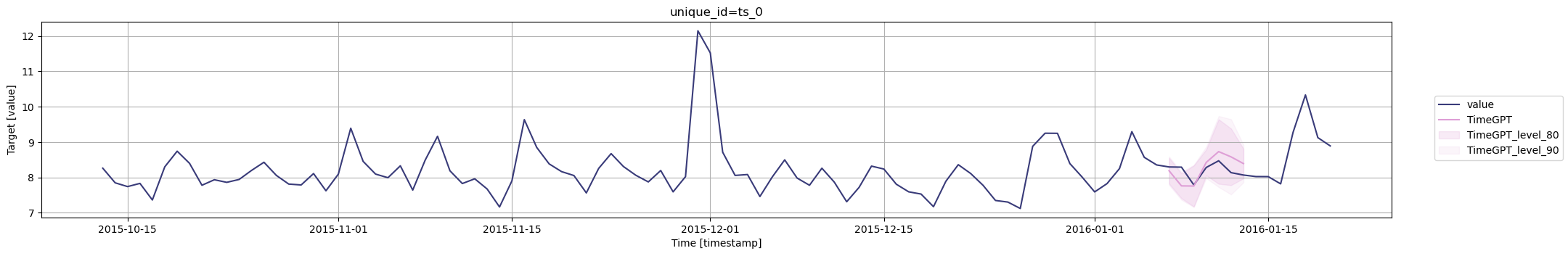

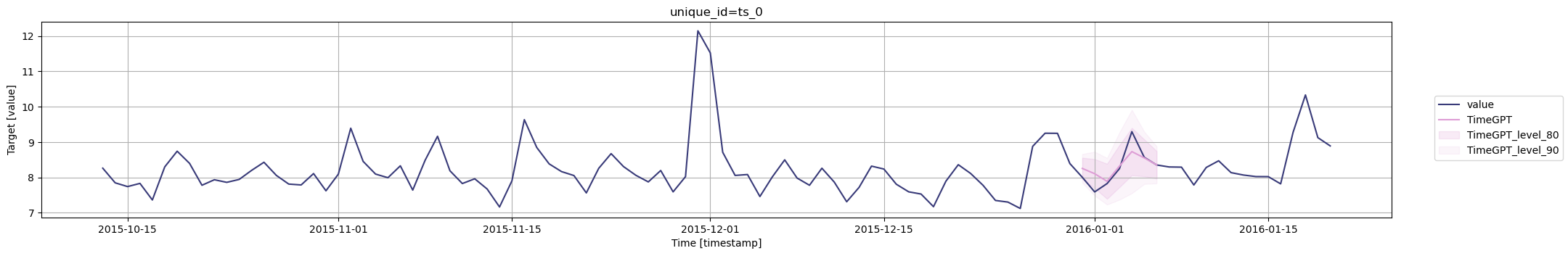

### Step 7: Long-Horizon Forecasting with TimeGPT

Also, you can generate cross validation for different instances of `TimeGPT` using the `model` argument. Here we use the base model and the model for long-horizon forecasting.

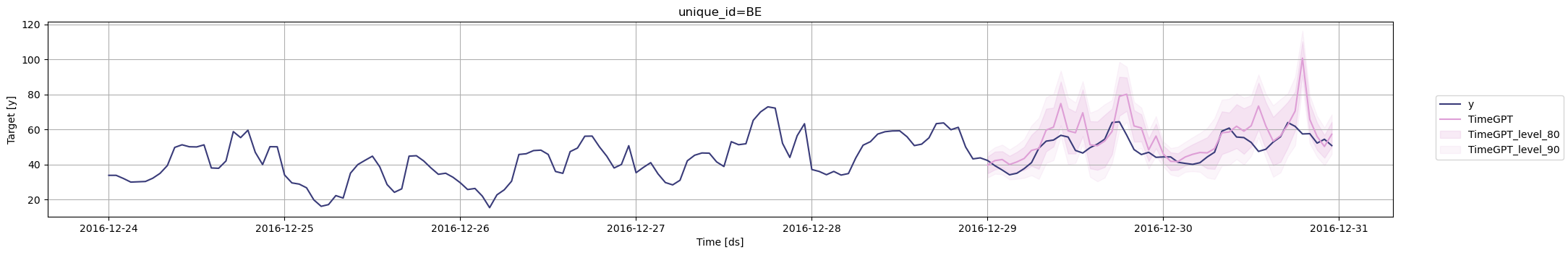

caption="An example visualization of predicted vs. actual values in the electricity dataset with dynamic exogenous variables and long horizon forecasting."

>

</Frame>

## Frequently Asked Questions

**What is time series cross-validation?**

Time series cross-validation is a model validation technique that uses rolling windows to evaluate forecasting accuracy while preserving temporal order, ensuring reliable predictions on unseen data.

**How is time series cross-validation different from k-fold cross-validation?**

Unlike k-fold cross-validation which randomly shuffles data, time series cross-validation maintains temporal order using techniques like walk-forward validation and expanding windows to prevent data leakage.

**What are the key parameters for cross-validation in TimeGPT?**

Key parameters include `h` (forecast horizon), `n_windows` (number of validation windows), `step_size` (window increment), and `level` (prediction interval confidence levels).

**How do you evaluate cross-validation results?**

Evaluate results by comparing forecasted values against actual values across multiple time windows, analyzing prediction intervals, and calculating metrics like MAE, RMSE, and MAPE.

## Conclusion

You've mastered time series cross-validation with TimeGPT, including rolling-window validation, prediction intervals, exogenous variables, and long-horizon forecasting. These model validation techniques ensure your forecasts are accurate, reliable, and production-ready.

### Next Steps in Model Validation

- Explore [evaluation metrics](/forecasting/evaluation/evaluation_metrics) to quantify forecast accuracy

- Learn about [fine-tuning TimeGPT](/forecasting/fine-tuning/steps) for domain-specific data

- Apply cross-validation to [multiple time series](/data_requirements/multiple_series)

Ready to validate your forecasts at scale? [Start your TimeGPT trial](https://dashboard.nixtla.io/) and implement robust cross-validation today.

description: "Learn to select the right evaluation metrics to measure the performance of TimeGPT."

icon: "vial"

---

Selecting the right evaluation metric is crucial, as it guides the selection of the best settings for TimeGPT to ensure the model is making accurate forecasts.

## Overview of Common Evaluation Metrics

The following table summarizes the common evaluation metrics used in forecasting depending on the type of forecasts. It also indicates when to use and when to avoid a particular metric.

| Metric | Types of forecast | Properties | When to avoid |

| MAE | Point forecast | <ul><li>robust to outliers</li><li>easy to interpret</li><li>same units as the data</li></ul> | When averaging over series of different scales |

| MSE | Point forecast | <ul><li>penalizes large errors</li><li>not the same units as the data</li><li>sensitive to outliers</li></ul> | There are unrepresentative outliers in the data |

| RMSE | Point forecast | <ul><li>penalizes large errors</li><li>same units as the data</li><li>sensitive to outliers</li></ul> | There are unrepresentative outliers in the data |

| MAPE | Point forecast | <ul><li>expressed as a percentage</li><li>easy to interpret</li><li>favors under-forecasts</li></ul> | When data has zero values |

| sMAPE | Point forecast | <ul><li>robust to over- and under-forecasts</li><li>expressed as a percentage</li><li>easy to interpret</li></ul> | When data has zero values |

| MASE | Point forecast | <ul><li>like the MAE, but scaled by the naive forecast</li><li>inherently compares to a simple benchmark</li><li>requires technical knowledge to interpret</li></ul> | There is only one series to evaluate |

| CRPS | Probabilistic forecast | <ul><li>generalizaed MAE for probabilistic forecasts</li><li>requires technical knowledge to interpret</li></ul> | When evaluating point forecasts |

In the following sections, we dive deeper into each metric. Note that all of these metrics can be used to evaluate the forecasts of TimeGPT using the _utilsforecast_ library. For more information, read our tutorial on [evaluating TimeGPT with utilsforecast](/forecasting/evaluation/evaluation_utilsforecast).

## Mean Absolute Error (MAE)

The mean absolute error simply averages the absolute distance between the forecasts and the actual values.

It is a good evaluation metric that works in the vast majority of forecasting tasks. It is robust to outliers, meaning that it will not magnifiy large errors, and it is expressed as the same units as the data, making it easy to interpret.

Simply be careful when average the MAE over multiple series of different scales, since then a series with smaller values might bring down the MAE, while a series with larger values will bring it up.

## Mean Squared Error (MSE)

The mean squared error squares the forecast errors before averaging them, which heavily penalizes large errors while giving less weight to small ones.

As such, it is not robust to outliers since a single large error can dramatically inflate the MSE value. Additionally, the units are squared (e.g., dollars²), making it difficult to interpret in practical terms.

Avoid MSE when your data contains outliers or when you need an easily interpretable metric. It's best used in optimization contexts where you specifically want to penalize large errors more severely.

## Root Mean Squared Error (RMSE)

The root mean squared error is simply the square root of the MSE, bringing the metric back to the original units of the data while preserving MSE's property of penalizing large errors.

RMSE is more interpretable than MSE since it's expressed in the same units as your data.

You should avoid RMSE when outliers are present or when you want equal treatment of all errors.

## Mean Absolute Percentage Error (MAPE)

The mean absolute percentage error expresses forecast errors as percentages of the actual values, making it scale-independent and easy to interpret.

MAPE is excellent for comparing forecast accuracy across different time series with varying scales. It's intuitive and easily understood in business contexts.

Avoid MAPE when your data contains zero or near-zero values (causes division by zero) or when you have intermittent demand patterns.

Not that it's also asymmetric, penalizing positive errors (over-forecasts) more heavily than negative errors (under-forecasts).

## Symmetric Mean Absolute Percentage Error (sMAPE)

The symmetric mean absolute percentage error attempts to address MAPE's asymmetry by using the average of actual and forecast values in the denominator, making it more balanced between over- and under-forecasts.

sMAPE is more stable than MAPE and less prone to extreme values. It's still scale-independent and relatively easy to interpret, though not as intuitive as MAPE.

Avoid sMAPE when dealing with zero values or when the sum of actual and forecast values approaches zero. While more symmetric than MAPE, it's still not perfectly symmetric and can behave unexpectedly in edge cases.

## Mean Absolute Scaled Error (MASE)

The mean absolute scaled error scales forecast errors relative to the average error of a naive seasonal forecast, providing a scale-independent measure that's robust and interpretable.

MASE is excellent for comparing forecasts across different time series and scales. A MASE value less than 1 indicates your forecast is better than the naive benchmark, while values greater than 1 indicate worse performance.

It's robust to outliers and handles zero values well.

While it is a good metric to compare across multiple series, it might not make sense for you to compare against naive forecasts, and it does require some technical knowledge to interpret correctly.

## Continuous Ranked Probability Score (CRPS)

The continuous ranked probability score measures the distance between the entire forecast distribution and the observed value, making it ideal for evaluating probabilistic forecasts.

CRPS is a proper scoring rule that reduces to MAE when dealing with deterministic forecasts, making it a natural extension for probabilistic forecasting. It's expressed in the same units as the original data and provides a comprehensive evaluation of forecast distributions, rewarding both accuracy and good uncertainty quantification.

CRPS is specifically designed for probabilistic forecasts, so avoid it when you only have point forecasts. It's also more computationally intensive to calculate than simpler metrics and may be less intuitive for stakeholders unfamiliar with probabilistic forecasting concepts.

## Evaluating TimeGPT

To learn how to use any of the metrics outlined above to evaluate the forecasts of TimeGPT, read our tutorial on [evaluating TimeGPT with utilsforecast](/forecasting/evaluation/evaluation_utilsforecast).

description: "Learn how to evaluate TimeGPT model performance using tools in utilforecast"

icon: "square-root-variable"

---

## Overview

After generating forecasts with TimeGPT, the next step is to evaluate how accurate those forecasts are. The evaluate function from the utilsforecast library provides a fast and flexible way to assess model performance using a wide range of metrics. This pipeline works seamlessly with TimeGPT and other forecasting models.

With the evaluation pipeline, you can easily select models and define metrics like MAE, MSE, or MAPE to benchmark forecasting performance.

## Step-to-Step Guide

### Step 1. Import Required Packages

Start by importing the necessary libraries and initializing the `NixtlaClient` with your API key.

```python

import pandas as pd

from nixtla import NixtlaClient

from functools import partial

from utilsforecast.evaluation import evaluate

from utilsforecast.losses import mae, mse, rmse, mape, smape, mase, scaled_crps

For this example, we use the Air Passenger dataset, which records monthly totals of international airline passengers. We'll load the dataset, format the timestamps, and split the data into a training set and a test set. The last 12 months are used for testing.

fcst_timegpt = fcst_timegpt.merge(df_test, on = ['timestamp','unique_id'])

```

### Step 4. Define Models and Metrics for Evaluation

Next, we define the models to evaluate and the metrics to use. For more information about supported metrics, refer to the [evaluation metrics tutorial](forecasting/evaluation/evaluation_metrics) .

```python

models = ['TimeGPT']

metrics = [

mae,

mse,

rmse,

mape,

smape,

partial(mase, seasonality=12),

scaled_crps

]

```

### Step 5. Run the Evaluation

Finally, call the evaluate function with your merged forecast results. Include `train_df` for metrics that need the training data and `level` if using probabilistic metrics.

description: "Learn how to incorporate external categorical variables in your TimeGPT forecasts to improve accuracy."

icon: "input-text"

---

## What Are Categorical Variables?

Categorical variables are external factors that take on a limited range of discrete values, grouping observations by categories. For example, "Sporting" or "Cultural" events in a dataset describing product demand.

By capturing unique external conditions, categorical variables enhance the predictive power of your model and can reduce forecasting error. They are easy to incorporate by merging each time series data point with its corresponding categorical data.

This tutorial demonstrates how to incorporate categorical (discrete) variables into TimeGPT forecasts.

## How to Use Categorical Variables in TimeGPT

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/03_categorical_variables.ipynb)

### Step 1: Import Packages and Initialize the Nixtla Client

Make sure you have the necessary libraries installed: pandas, nixtla, and datasetsforecast.

```python

import pandas as pd

import os

from nixtla import NixtlaClient

from datasetsforecast.m5 import M5

# Initialize the Nixtla Client

nixtla_client = NixtlaClient(

api_key='my_api_key_provided_by_nixtla'

)

```

### Step 2: Load M5 Data

We use the **M5 dataset** — a collection of daily product sales demands across 10 US stores — to showcase how categorical variables can improve forecasts.

Start by loading the M5 dataset and converting the date columns to datetime objects.

```python

Y_df, X_df, _ = M5.load(directory=os.getcwd())

Y_df['ds'] = pd.to_datetime(Y_df['ds'])

X_df['ds'] = pd.to_datetime(X_df['ds'])

Y_df.head(10)

```

| unique_id | ds | y |

|-----------|----|---|

| FOODS_1_001_CA_1 | 2011-01-29 | 3.0 |

| FOODS_1_001_CA_1 | 2011-01-30 | 0.0 |

| FOODS_1_001_CA_1 | 2011-01-31 | 0.0 |

| FOODS_1_001_CA_1 | 2011-02-01 | 1.0 |

| FOODS_1_001_CA_1 | 2011-02-02 | 4.0 |

| FOODS_1_001_CA_1 | 2011-02-03 | 2.0 |

| FOODS_1_001_CA_1 | 2011-02-04 | 0.0 |

| FOODS_1_001_CA_1 | 2011-02-05 | 2.0 |

| FOODS_1_001_CA_1 | 2011-02-06 | 0.0 |

| FOODS_1_001_CA_1 | 2011-02-07 | 0.0 |

Extract the categorical columns from the X_df dataframe.

```python

X_df = X_df[['unique_id', 'ds', 'event_type_1']]

X_df.head(10)

```

| unique_id | ds | event_type_1 |

|-----------|----|--------------|

| FOODS_1_001_CA_1 | 2011-01-29 | nan |

| FOODS_1_001_CA_1 | 2011-01-30 | nan |

| FOODS_1_001_CA_1 | 2011-01-31 | nan |

| FOODS_1_001_CA_1 | 2011-02-01 | nan |

| FOODS_1_001_CA_1 | 2011-02-02 | nan |

| FOODS_1_001_CA_1 | 2011-02-03 | nan |

| FOODS_1_001_CA_1 | 2011-02-04 | nan |

| FOODS_1_001_CA_1 | 2011-02-05 | nan |

| FOODS_1_001_CA_1 | 2011-02-06 | Sporting |

| FOODS_1_001_CA_1 | 2011-02-07 | nan |

Notice that there is a Sporting event on February 6, 2011, listed under `event_type_1`.

### Step 3: Prepare Data for Forecasting

We'll select a specific product to demonstrate how to incorporate categorical features into TimeGPT forecasts.

#### Select a High-Selling Product and Merge Data

Start by selecting a high-selling product and merging the data.

Encode categorical variables using one-hot encoding. One-hot encoding transforms each category into a separate column containing binary indicators (0 or 1).

<Frame caption="Forecast with categorical variables">

</Frame>

TimeGPT already provides a reasonable forecast, but it seems to somewhat underforecast the peak on the 6th of February 2016 - the day before the Super Bowl.

<Frame caption="Forecast with categorical variables">

</Frame>

## 5. Evaluate Forecast Accuracy

Finally, we calculate the **Mean Absolute Error (MAE)** for the forecasts with and without categorical variables.

Including categorical variables noticeably improves forecast accuracy, reducing MAE by about 20%.

## Conclusion

Categorical variables are powerful additions to TimeGPT forecasts, helping capture valuable external factors. By properly encoding these variables and merging them with your time series, you can significantly enhance predictive performance.

Continue exploring more advanced techniques or different datasets to further improve your TimeGPT forecasting models.

description: "Learn how to incorporate date/time features into your forecasts to improve performance."

icon: "clock"

---

## Why incorporate Date/Time Features in your Forecasts

Many time series display patterns that repeat based on the calendar like demand

increasing on weekends, sales peaking at the end of the month, or traffic

varying by hour of the day. Recognizing and capturing these time-based patterns

can be a powerful way to improve forecasting accuracy.

While you can forecast a time series based solely on its historical values,

adding additional date/time related features, such as the day of the

week, month, quarter, or hour, can often enhance the model's performance. These

features can be especially useful when your dataset lacks exogenous variables,

but they can also complement external regressors when available.

In this tutorial, we'll walk through how to incorporate these date/time features

into TimeGPT to boost the accuracy of your forecasts.

## How to incorporate Date/Time Features in your Forecasts

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/date_features.ipynb)

### Step 1: Import Packages

Import the necessary libraries and initialize the Nixtla client.

```python

import numpy as np

import pandas as pd

from nixtla import NixtlaClient

# For forecast evaluation

from utilsforecast.evaluation import evaluate

from utilsforecast.losses import mae, rmse

```

You can instantiate the `NixtlaClient` class providing your authentication API key.

```python

nixtla_client = NixtlaClient(

# defaults to os.environ.get("NIXTLA_API_KEY")

api_key='my_api_key_provided_by_nixtla'

)

```

### Step 2: Load Data

In this notebook, we use hourly electricity prices as our example dataset, which

consists of 5 time series, each with approximately 1700 data points. For

demonstration purposes, we focus on the German electricity price series. The

time series is split, with the last 240 steps (10 days) set aside as the test set.

For simplicity, we will also demonstrate this tutorial without the use of any

additional exogenous variables, but you could extend this same technique for

description: "Guide to using holiday calendar variables and special dates to improve forecast accuracy in time series."

icon: "calendar"

---

## What Are Holiday Variables and Special Dates?

Special dates, such as holidays, promotions, or significant events, often cause notable deviations from normal patterns in your time series. By incorporating these special dates into your forecasting model, you can better capture these expected variations and improve prediction accuracy.

## How to Add Holiday Variables and Special Dates

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/02_holidays.ipynb)

### Step 1: Import Packages

Import the required libraries and initialize the Nixtla client.

```python

import pandas as pd

from nixtla import NixtlaClient

```

```python

nixtla_client = NixtlaClient(

api_key='my_api_key_provided_by_nixtla'

)

```

### Step 2: Load Data

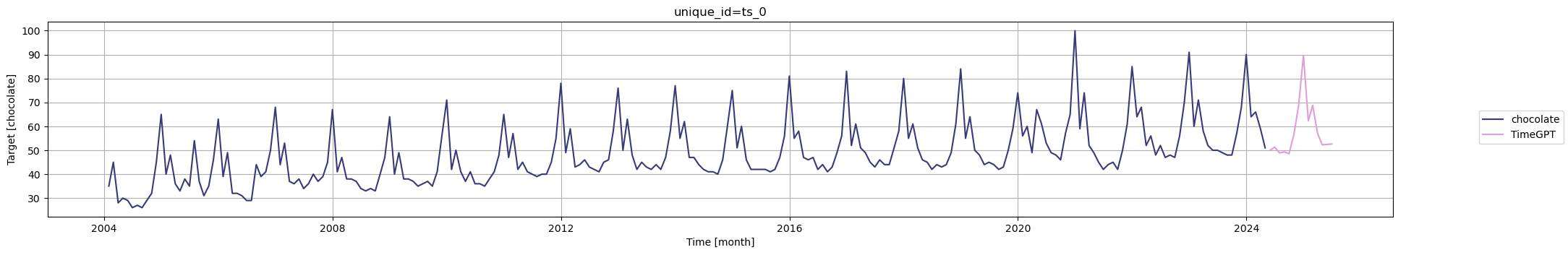

We use a Google Trends dataset on "chocolate" with monthly frequency:

When adding exogenous variables (like holidays) to time series forecasting, we need a future DataFrame because:

- Historical data already exists: Our training data contains past values of both the target variable and exogenous features

- Future exogenous features are known: Unlike the target variable, we can determine future values of exogenous features (like holidays) in advance

For example, we know that Christmas will occur on December 25th next year, so we can include this information in our future DataFrame to help the model understand seasonal patterns during the forecast period.

Start with creating a future DataFrame with 14 months of dates starting from May 2024.

### Step 4: Forecast with Holidays and Special Dates

TimeGPT automatically generates standard date-based features (like month, day of week, etc.) during forecasting. For more specialized temporal patterns, you can manually add holiday indicators to both your historical and future datasets.

#### Create a Function to Add Date Features

To make it easier to add date features to a DataFrame, we'll create the `add_date_features_to_DataFrame` function that takes:

- A pandas DataFrame

- A date extractor function, which can be `CountryHolidays` or `SpecialDates`

| | month | US_New Year's Day | US_Memorial Day | US_Juneteenth National Independence Day | US_Independence Day | US_Labor Day | US_Veterans Day | US_Thanksgiving Day | US_Christmas Day | US_Martin Luther King Jr. Day | US_Washington's Birthday | US_Columbus Day |

| | month | chocolate | US_New Year's Day | US_New Year's Day (observed) | US_Memorial Day | US_Independence Day | US_Independence Day (observed) | US_Labor Day | US_Veterans Day | US_Thanksgiving Day | US_Christmas Day | US_Christmas Day (observed) | US_Martin Luther King Jr. Day | US_Washington's Birthday | US_Columbus Day | US_Veterans Day (observed) | US_Juneteenth National Independence Day | US_Juneteenth National Independence Day (observed) |

Now, your historical DataFrame also contains holiday flags for each month.

Finally, forecast with the holiday features.

```python

fcst_df_holidays = nixtla_client.forecast(

df=df_with_holidays,

h=14,

freq="ME",

time_col="month",

target_col="chocolate",

X_df=future_df_holidays,

model="timegpt-1-long-horizon",

hist_exog_list=[

"US_New Year's Day (observed)",

"US_Independence Day (observed)",

"US_Christmas Day (observed)",

"US_Veterans Day (observed)",

"US_Juneteenth National Independence Day (observed)",

],

feature_contributions=True, # for shapley values

)

fcst_df_holidays.head()

```

Plot the forecast with holiday effects.

```python

nixtla_client.plot(

df_with_holidays,

fcst_df_holidays,

time_col='month',

target_col='chocolate',

)

```

We can then plot the weights of each holiday to see which are more important in forecasting the interest in chocolate. We will use the [SHAP library](https://shap.readthedocs.io/en/latest/) to plot the weights.

> For more details on how to use the shap library, see our [tutorial on model interpretability](/forecasting/exogenous-variables/interpretability_with_shap).

The SHAP values reveal that Christmas, Independence Day, and Labor Day have the strongest influence on chocolate interest forecasting. These holidays show the highest feature importance weights, indicating they significantly impact consumer behavior patterns. This aligns with expectations since these are major US holidays associated with gift-giving, celebrations, and seasonal consumption patterns that drive chocolate sales.

#### Add Special Dates

Beyond country holidays, you can create custom special dates with `SpecialDates`. These can represent unique one-time events or recurring patterns on specific dates of your choice.

Assume we already have a future DataFrame with monthly dates. We'll create Valentine's Day and Halloween as custom special dates and add them to the future DataFrame.

```python

from nixtla.date_features import SpecialDates

# Generate special dates programmatically for the full data range (2004-2025)

valentine_dates = [f"{year}-02-14" for year in range(2004, 2026)]

halloween_dates = [f"{year}-10-31" for year in range(2004, 2026)]

# Define custom special dates - chocolate-related seasonal events

Examine the feature importance of the special dates.

```python

plot_shap_values(ds_column="month", title="SHAP values for special dates")

```

The SHAP values reveal that Valentine's Day has the strongest positive impact on chocolate sales forecasts. This aligns with consumer behavior patterns, as chocolate is a popular gift choice during Valentine's Day celebrations.

<Check>

Congratulations! You have successfully integrated holiday and special date features into your time series forecasts. Use these steps as a starting point for further experimentation with advanced date features.

description: "Learn how to interpret model predictions using SHAP values to understand the impact of exogenous variables."

icon: "square-root-variable"

---

## What Are SHAP Values?

SHAP (SHapley Additive exPlanation) values use game theory concepts to explain how each feature influences machine learning forecasts. They're particularly useful when working with exogenous (external) variables, letting you understand contributions both at individual prediction steps and across entire forecast horizons.

SHAP values can be seamlessly combined with visualization methods from the [SHAP](https://shap.readthedocs.io/en/latest/) Python package for powerful plots and insights. Before proceeding, make sure you understand forecasting with exogenous features. For reference, see our [tutorial on exogenous variables](/forecasting/exogenous-variables/numeric_features).

## How to Use SHAP Values for TimeGPT

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/21_shap_values.ipynb)

## Install SHAP

Install the SHAP library.

```bash

pip install shap

```

For more details, visit the [official SHAP documentation](https://shap.readthedocs.io/en/latest/).

### Step 1: Import Packages

Import the necessary libraries and initialize the Nixtla client.

```python

import pandas as pd

from nixtla import NixtlaClient

nixtla_client = NixtlaClient(

api_key='my_api_key_provided_by_nixtla' # Or use os.environ.get("NIXTLA_API_KEY")

)

```

### Step 2: Load Data

We'll use exogenous variables (covariates) to enhance electricity market forecasting accuracy. The widely known EPF dataset is available at

[this link](https://zenodo.org/records/4624805). It contains hourly prices and relevant exogenous factors for five different electricity markets.

For this tutorial, we'll focus on the Belgian electricity market (BE). The data includes:

- Hourly prices (y)

- Forecasts for load (Exogenous1) and generation (Exogenous2)

- Day-of-week indicators (one-hot encoded)

If your data relies on factors such as weather, holiday calendars, marketing, or other elements, ensure they're similarly structured.

To make forecasts with exogenous variables, you must have future data for these variables available at the time of prediction.

Before generating forecasts, ensure you have (or can generate) future exogenous values. Below, we load future exogenous features to obtain 24-step-ahead predictions:

description: "Learn how to incorporate external numeric variables to improve your forecasting accuracy."

icon: "binary"

---

## What Are Exogenous Variables?

Exogenous variables or external factors are crucial in time series forecasting

as they provide additional information that might influence the prediction.

These variables could include holiday markers, marketing spending, weather data,

or any other external data that correlate with the time series data you are

forecasting.

For example, if you're forecasting ice cream sales, temperature data could serve

as a useful exogenous variable. On hotter days, ice cream sales may increase.

## How to Use Exogenous Variables

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/01_exogenous_variables_reworked.ipynb)

To incorporate exogenous variables in TimeGPT, you'll need to pair each point

in your time series data with the corresponding external data.

### Step 1: Import Packages

Import the required libraries and initialize the Nixtla client.

```python

import pandas as pd

from nixtla import NixtlaClient

nixtla_client = NixtlaClient(

# defaults to os.environ.get("NIXTLA_API_KEY")

api_key="my_api_key_provided_by_nixtla"

)

```

### Step 2: Load Dataset

In this tutorial, we'll predict day-ahead electricity prices. The dataset contains:

- Hourly electricity prices (`y`) from various markets (identified by `unique_id`)

title: "Fine-tuning with a Specific Loss Function"

description: "Learn how to fine-tune a model using specific loss functions, configure the Nixtla client, and evaluate performance improvements."

icon: "gear"

---

## Fine-tuning with a Specific Loss Function

When you fine-tune, the model trains on your dataset to tailor predictions to

your specific dataset. You can specify the loss function to be used during

fine-tuning using the `finetune_loss` argument. Below are the available loss

functions:

* `"default"`: A proprietary function robust to outliers.

* `"mae"`: Mean Absolute Error

* `"mse"`: Mean Squared Error

* `"rmse"`: Root Mean Squared Error

* `"mape"`: Mean Absolute Percentage Error

* `"smape"`: Symmetric Mean Absolute Percentage Error

## How to Fine-tune with a Specific Loss Function

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/07_loss_function_finetuning.ipynb)

### Step 1: Import Packages and Initialize Client

First, we import the required packages and initialize the Nixtla client.

```python

import pandas as pd

from nixtla import NixtlaClient

from utilsforecast.losses import mae, mse, rmse, mape, smape

```

```python

nixtla_client = NixtlaClient(

# defaults to os.environ.get("NIXTLA_API_KEY")

api_key='my_api_key_provided_by_nixtla'

)

```

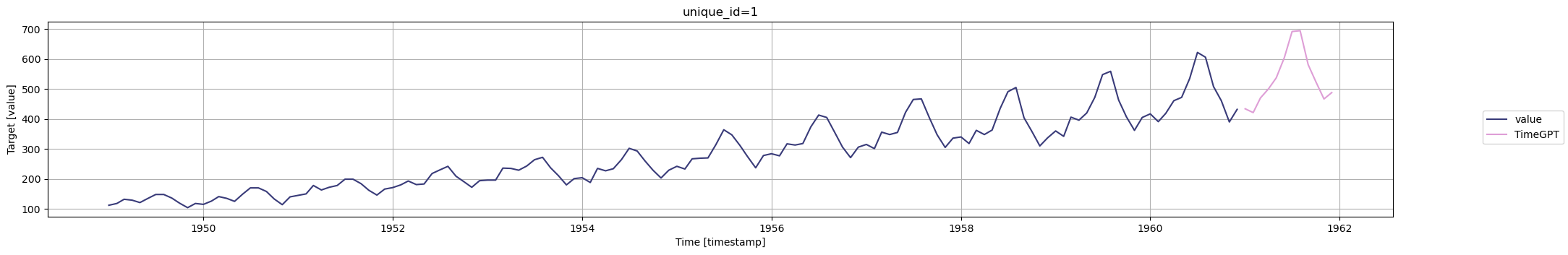

### Step 2: Load Data

Load your data and prepare it for fine-tuning. Here, we will demonstrate using

finetune_loss='mae', # Select desired loss function

time_col='timestamp',

target_col='value',

)

```

After training completes, you can visualize the forecast:

```python

nixtla_client.plot(

df,

timegpt_fcst_finetune_mae_df,

time_col='timestamp',

target_col='value',

)

```

<Frame>

</Frame>

## Explanation of Loss Functions

Now, depending on your data, you will use a specific error metric to accurately

evaluate your forecasting model's performance.

Below is a non-exhaustive guide on which metric to use depending on your use case.

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/23_finetune_depth_finetuning.ipynb)

description: "Learn how to save, fine-tune, list, and delete TimeGPT models to optimize forecasting."

icon: "gear"

---

## Re-using Fine-tuned Models

Reusing previously fine-tuned TimeGPT models can help reduce computation time

and costs while maintaining or improving forecast accuracy. This guide walks you

through the steps to save, fine-tune, list, and delete your TimeGPT models effectively.

## How to Re-use Fine-tuned Models

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/061_reusing_finetuned_models.ipynb)

### Step 1: Import Packages

First, we import the required packages and initialize the Nixtla client

```python

import pandas as pd

from nixtla import NixtlaClient

from utilsforecast.losses import rmse

from utilsforecast.evaluation import evaluate

```

```python

nixtla_client = NixtlaClient(

# defaults to os.environ["NIXTLA_API_KEY"]

api_key='my_api_key_provided_by_nixtla'

)

```

### Step 2: Load Data

Load the forecasting dataset and prepare the train/validation split.

description: "Adapt TimeGPT to your specific datasets for more accurate forecasts"

icon: "sliders"

---

Fine-tuning is a powerful process for utilizing TimeGPT more effectively.

Foundation models such as TimeGPT are pre-trained on vast amounts of data,

capturing wide-ranging features and patterns. These models can then be

specialized for specific contexts or domains. With fine-tuning, the model's

parameters are refined to forecast a new task, allowing it to tailor its vast

pre-existing knowledge towards the requirements of the new data. Fine-tuning

thus serves as a crucial bridge, linking TimeGPT's broad capabilities to your

tasks specificities.

Concretely, the process of fine-tuning consists of performing a certain number

of training iterations on your input data minimizing the forecasting error.

The forecasts will then be produced with the updated model. To control the

number of iterations, use the `finetune_steps` argument of the `forecast` method.

## Tutorial

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/06_finetuning.ipynb)

### Step 1: Import Packages and Initialize Client

First, we import the required packages and initialize the Nixtla client.

```python

import pandas as pd

from nixtla import NixtlaClient

from utilsforecast.losses import mae, mse

from utilsforecast.evaluation import evaluate

```

Next, initialize the NixtlaClient instance, providing your API key (or rely on

environment variables):

```python initialize-client

nixtla_client = NixtlaClient(

api_key='my_api_key_provided_by_nixtla' # Defaults to os.environ.get("NIXTLA_API_KEY")

title: "Distributed Forecasting with Spark, Dask & Ray"

description: "Scale your time series forecasting with TimeGPT using Spark, Dask, or Ray. Learn distributed computing for millions of time series with Python code examples and best practices."

icon: "microchip"

---

## Distributed Computing for Large-Scale Forecasting

Handling large datasets is a common challenge in time series forecasting. For example, when working with retail data, you may need to forecast sales for 100,000+ products across hundreds of stores—generating millions of forecasts daily. Similarly, when dealing with electricity consumption data, you may need to predict consumption for millions of smart meters across multiple regions in real-time.

### Why Distributed Computing for Forecasting?

Distributed computing offers significant advantages for time series forecasting:

- **Speed**: Reduce computation time by 10-100x compared to single-machine processing

- **Scalability**: Handle datasets that don't fit in memory on a single machine

- **Cost-efficiency**: Process more forecasts in less time, optimizing resource utilization

- **Reliability**: Fault-tolerant processing ensures forecasts complete even if individual nodes fail

Nixtla's **TimeGPT** enables you to efficiently handle expansive datasets by integrating distributed computing frameworks (**[Spark](https://spark.apache.org/)**, **[Dask](https://www.dask.org/)**, and **[Ray](https://www.ray.io/)** through **Fugue**) that parallelize forecasts across multiple time series and drastically reduce computation times.

## Getting Started

Before getting started, ensure you have your TimeGPT API key. Upon [registration](https://dashboard.nixtla.io/), you'll receive an email prompting you to confirm your signup. Once confirmed, access your dashboard and navigate to the **API Keys** section to retrieve your key. For detailed setup instructions, see the [Setting Up Your Authentication Key tutorial](/setup/setting_up_your_api_key).

## How to Use TimeGPT with Distributed Computing Frameworks

Using TimeGPT with distributed computing frameworks is straightforward. The process only slightly differs from non-distributed usage.

### Step 1: Instantiate a NixtlaClient class

```python

from nixtla import NixtlaClient

# Replace 'YOUR_API_KEY' with the key obtained from your Nixtla dashboard

client = NixtlaClient(api_key="YOUR_API_KEY")

```

### Step 2: Load your data into a pandas DataFrame

Make sure your data is properly formatted, with each time series uniquely identified (e.g., by store or product).

```python

import pandas as pd

data = pd.read_csv("your_time_series_data.csv")

```

### Step 3: Initialize a distributed computing framework

Follow the links above for examples on setting up each framework.

### Step 4: Use NixtlaClient methods to forecast at scale

Once your framework is initialized and your data is loaded, you can apply the forecasting methods:

```python

# Example function call within the distributed environment

forecast_results = client.forecast(

data=data,

h=14 # horizon (e.g., 14 days)

)

```

### Step 5: Stop the distributed computing framework

When you're finished, you may need to terminate your Spark, Dask, or Ray session. This depends on your environment and setup.

Parallelization in these frameworks operates across multiple time series within your dataset. Ensure each series is uniquely identified so the parallelization can be fully leveraged.

## Real-World Use Cases

Distributed forecasting with TimeGPT is essential for:

- **Retail & E-commerce**: Forecast demand for 100,000+ SKUs across multiple locations simultaneously

- **Energy & Utilities**: Predict consumption patterns for millions of smart meters in real-time

- **Finance**: Generate forecasts for thousands of stocks, currencies, or commodities

- **IoT & Manufacturing**: Process sensor data from thousands of devices for predictive maintenance

- **Telecommunications**: Forecast network traffic across thousands of cell towers

The distributed approach reduces forecast generation time from hours to minutes, enabling faster decision-making at scale.

## Important Considerations

### When to Use a Distributed Computing Framework

Consider a distributed framework if your dataset:

- Contains millions of observations across multiple time series

- Cannot fit into memory on a single machine

- Requires extensive processing time that is impractical on a single machine

### Choosing the Right Framework

When selecting Spark, Dask, or Ray, weigh your existing infrastructure and your team's expertise. Minimal code changes allow TimeGPT to work with each of these frameworks seamlessly. Pick the framework that aligns with your organization's tools and resources for the most efficient large-scale forecasting efforts.

### Framework Comparison

| Framework | Best For | Ideal Dataset Size | Learning Curve |

Each framework integrates seamlessly with TimeGPT through Fugue, requiring minimal code changes to scale from single-machine to distributed forecasting.

### Best Practices

To maximize the benefits of distributed forecasting:

- **Distribute workloads efficiently**: Spread your forecasts across multiple compute nodes to handle huge datasets without exhausting memory or overwhelming single-machine resources.

- **Use proper identifiers**: Ensure your data has distinct identifiers for each series. Correct labeling is crucial for successful multi-series parallel forecasts.

## Frequently Asked Questions

**Q: Which distributed framework should I choose for TimeGPT?**

Choose **Spark** if you have existing Hadoop infrastructure, **Dask** if you're already using Python/pandas and want the easiest transition, or **Ray** if you're building complex ML pipelines.

**Q: How much faster is distributed forecasting compared to single-machine?**

Speed improvements typically range from 10-100x depending on your dataset size, number of time series, and cluster configuration. Datasets with more independent time series see greater parallelization benefits.

**Q: Do I need to change my TimeGPT code to use distributed computing?**

Minimal changes are required. After initializing your chosen framework (Spark/Dask/Ray), TimeGPT automatically detects and uses distributed processing. The API calls remain the same.

**Q: Can I use distributed computing with fine-tuning and cross-validation?**

Yes, TimeGPT supports distributed [fine-tuning](/forecasting/fine-tuning/steps) and [cross-validation](/forecasting/evaluation/cross_validation) across all supported frameworks.

## Related Resources

Explore more TimeGPT capabilities:

- [Spark Integration Guide](/forecasting/forecasting-at-scale/spark) - Detailed Spark setup and examples

- [Dask Integration Guide](/forecasting/forecasting-at-scale/dask) - Dask configuration for TimeGPT

- [Ray Integration Guide](/forecasting/forecasting-at-scale/ray) - Ray distributed forecasting tutorial

- [Fine-tuning TimeGPT](/forecasting/fine-tuning/steps) - Improve accuracy at scale

description: "Scale pandas workflows with Dask and TimeGPT for distributed time series forecasting. Learn to process 10M+ time series in Python with minimal code changes."

icon: "server"

---

## Overview

[Dask](https://www.dask.org/) is an open-source parallel computing library for Python that scales pandas workflows seamlessly. This guide explains how to use TimeGPT from Nixtla with Dask for distributed forecasting tasks.

Dask is ideal when you're already using pandas and need to scale beyond single-machine memory limits—typically for datasets with 10-100 million observations across multiple time series. Unlike Spark, Dask requires minimal code changes from your existing pandas workflow.

## Why Use Dask for Time Series Forecasting?

Dask offers unique advantages for scaling time series forecasting:

- **Pandas-like API**: Minimal code changes from your existing pandas workflows

- **Easy scaling**: Convert pandas DataFrames to Dask with a single line of code

- **Python-native**: Pure Python implementation, no JVM required (unlike Spark)

- **Flexible deployment**: Run on your laptop or scale to a cluster

- **Memory efficiency**: Process datasets larger than RAM through intelligent chunking

Choose Dask when you need to scale from 10 million to 100 million observations and want the smoothest transition from pandas.

**What you'll learn:**

- Simplify distributed computing with Fugue

- Run TimeGPT at scale on a Dask cluster

- Seamlessly convert pandas DataFrames to Dask

## Prerequisites

Before proceeding, make sure you have an [API key from Nixtla](/setup/setting_up_your_api_key).

## How to Use TimeGPT with Dask

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/17_computing_at_scale_dask_distributed.ipynb)

### Step 1: Install Fugue and Dask

Fugue provides an easy-to-use interface for distributed computing over frameworks like Dask.

You can install Fugue with:

```bash

pip install fugue[dask]

```

If running on a distributed Dask cluster, ensure the `nixtla` library is installed on all worker nodes.

### Step 2: Load Your Data

You can start by loading data into a pandas DataFrame. In this example, we use hourly electricity prices from multiple markets:

TimeGPT with Dask also supports exogenous variables. Refer to the [Exogenous Variables Tutorial](/forecasting/exogenous-variables/numeric_features) for details. Simply substitute pandas DataFrames with Dask DataFrames—the API remains identical.

## Related Resources

Explore more distributed forecasting options:

- [Distributed Computing Overview](/forecasting/forecasting-at-scale/computing_at_scale) - Compare Spark, Dask, and Ray

- [Spark Integration](/forecasting/forecasting-at-scale/spark) - For datasets with 100M+ observations

- [Ray Integration](/forecasting/forecasting-at-scale/ray) - For ML pipeline integration

- [Fine-tuning TimeGPT](/forecasting/fine-tuning/steps) - Improve accuracy at scale

description: "Scale machine learning pipelines with Ray and TimeGPT for distributed time series forecasting. Learn to integrate TimeGPT with Ray for complex ML workflows in Python."

icon: "server"

---

## Overview

[Ray](https://www.ray.io/) is an open-source unified compute framework that helps scale Python workloads for distributed computing. This guide demonstrates how to distribute TimeGPT forecasting jobs on top of Ray.

Ray is ideal for machine learning pipelines with complex task dependencies and datasets with 10+ million observations. Its unified framework excels at orchestrating distributed ML workflows, making it perfect for integrating TimeGPT into broader AI applications.

## Why Use Ray for Time Series Forecasting?

Ray offers unique advantages for ML-focused time series forecasting:

- **ML pipeline integration**: Seamlessly integrate TimeGPT into complex ML workflows with Ray Tune and Ray Serve

- **Task parallelism**: Handle complex task dependencies beyond data parallelism

- **Python-native**: Pure Python with minimal boilerplate code

- **Flexible architecture**: Scale from laptop to cluster with the same code

- **Actor model**: Stateful computations for advanced forecasting scenarios

Choose Ray when you're building ML pipelines, need complex task orchestration, or want to integrate TimeGPT with other ML frameworks like PyTorch or TensorFlow.

**What you'll learn:**

- Install Fugue with Ray support for distributed computing

- Initialize Ray clusters for distributed forecasting

- Run TimeGPT forecasting and cross-validation on Ray

## Prerequisites

Before proceeding, make sure you have an [API key from Nixtla](/setup/setting_up_your_api_key).

When executing on a distributed Ray cluster, ensure the `nixtla` library is installed on all workers.

## How to Use TimeGPT with Ray

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/19_computing_at_scale_ray_distributed.ipynb)

### Step 1: Install Fugue and Ray

Fugue provides an easy-to-use interface for distributed computation across frameworks like Ray.

Install Fugue with Ray support:

```bash

pip install fugue[ray]

```

### Step 2: Load Your Data

Load your dataset into a pandas DataFrame. This tutorial uses hourly electricity prices from various markets:

To use TimeGPT with Ray, provide a Ray Dataset to Nixtla's client methods instead of a pandas DataFrame. The API remains the same as local usage.

Instantiate the `NixtlaClient` class to interact with Nixtla's API:

```python

from nixtla import NixtlaClient

nixtla_client = NixtlaClient(

api_key='my_api_key_provided_by_nixtla'

)

```

You can use any method from the `NixtlaClient`, such as `forecast` or `cross_validation`.

<Tabs>

<Tab title="Forecast Example">

```python

fcst_df = nixtla_client.forecast(ray_df, h=12)

fcst_df.to_pandas().tail()

```

Public API models supported include `timegpt-1` (default) and `timegpt-1-long-horizon`. For long horizon forecasting, see the [long-horizon model tutorial](/forecasting/model-version/longhorizon_model).

</Tab>

<Tab title="Cross-validation Example">

```python

cv_df = nixtla_client.cross_validation(

ray_df,

h=12,

freq='H',

n_windows=5,

step_size=2

)

cv_df.to_pandas().tail()

```

</Tab>

</Tabs>

### Step 5: Shutdown Ray

Always shut down Ray after you finish your tasks to free up resources:

```python

ray.shutdown()

```

## Working with Exogenous Variables

TimeGPT with Ray also supports exogenous variables. Refer to the [Exogenous Variables Tutorial](/forecasting/exogenous-variables/numeric_features) for details. Simply substitute pandas DataFrames with Ray Datasets—the API remains identical.

## Related Resources

Explore more distributed forecasting options:

- [Distributed Computing Overview](/forecasting/forecasting-at-scale/computing_at_scale) - Compare Spark, Dask, and Ray

- [Spark Integration](/forecasting/forecasting-at-scale/spark) - For datasets with 100M+ observations

- [Dask Integration](/forecasting/forecasting-at-scale/dask) - For datasets with 10M-100M observations

- [Fine-tuning TimeGPT](/forecasting/fine-tuning/steps) - Improve accuracy at scale

description: "Scale enterprise time series forecasting with Spark and TimeGPT. Learn to process 100M+ observations across distributed clusters with Python and PySpark."

icon: "server"

---

## Overview

[Spark](https://spark.apache.org/) is an open-source distributed compute framework designed for large-scale data processing. This guide demonstrates how to use TimeGPT with Spark to perform forecasting and cross-validation across distributed clusters.

Spark is ideal for enterprise environments with existing Hadoop infrastructure and datasets exceeding 100 million observations. Its robust distributed architecture handles massive-scale time series forecasting with fault tolerance and high performance.

## Why Use Spark for Time Series Forecasting?

Spark offers unique advantages for enterprise-scale time series forecasting:

- **Enterprise-grade scalability**: Handle datasets with 100M+ observations across distributed clusters

- **Hadoop integration**: Seamlessly integrate with existing HDFS and Hadoop ecosystems

- **Mature ecosystem**: Leverage Spark's rich ecosystem of tools and libraries

- **Multi-language support**: Work with Python (PySpark), Scala, or Java

Choose Spark when you have enterprise infrastructure, datasets exceeding 100 million observations, or need robust fault tolerance for mission-critical forecasting.

**What you'll learn:**

- Install Fugue with Spark support for distributed computing

- Convert pandas DataFrames to Spark DataFrames

- Run TimeGPT forecasting and cross-validation on Spark clusters

## Prerequisites

Before proceeding, make sure you have an [API key from Nixtla](/setup/setting_up_your_api_key).

If executing on a distributed Spark cluster, ensure the `nixtla` library is installed on all worker nodes for consistent execution.

## How to Use TimeGPT with Spark

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/16_computing_at_scale_spark_distributed.ipynb)

### Step 1: Install Fugue and Spark

Fugue provides a convenient interface to distribute Python code across frameworks like Spark.

Install Fugue with Spark support:

```bash

pip install fugue[spark]

```

To work with TimeGPT, make sure you have the `nixtla` library installed as well.

### Step 2: Load Your Data

Load the dataset into a pandas DataFrame. In this example, we use hourly electricity price data from different markets:

Create a Spark session and convert your pandas DataFrame to a Spark DataFrame:

```python

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

spark_df = spark.createDataFrame(df)

spark_df.show(5)

```

### Step 4: Use TimeGPT on Spark

To use TimeGPT with Spark, provide a Spark DataFrame to Nixtla's client methods instead of a pandas DataFrame. The main difference from local usage is working with Spark DataFrames instead of pandas DataFrames.

Instantiate the `NixtlaClient` class to interact with Nixtla's API:

```python

from nixtla import NixtlaClient

nixtla_client = NixtlaClient(

api_key='my_api_key_provided_by_nixtla'

)

```

You can use any method from the `NixtlaClient`, such as `forecast` or `cross_validation`.

<Tabs>

<Tab title="Forecast Example">

```python

fcst_df = nixtla_client.forecast(spark_df, h=12)

fcst_df.show(5)

```

When using Azure AI endpoints, specify `model="azureai"`:

```python

nixtla_client.forecast(

spark_df,

h=12,

model="azureai"

)

```

The public API supports two models: `timegpt-1` (default) and `timegpt-1-long-horizon`. For long horizon forecasting, see the [long-horizon model tutorial](/forecasting/model-version/longhorizon_model).

</Tab>

<Tab title="Cross-validation Example">

```python

cv_df = nixtla_client.cross_validation(

spark_df,

h=12,

n_windows=5,

step_size=2

)

cv_df.show(5)

```

</Tab>

</Tabs>

### Step 5: Stop Spark

After completing your tasks, stop the Spark session to free resources:

```python

spark.stop()

```

## Working with Exogenous Variables

TimeGPT with Spark also supports exogenous variables. Refer to the [Exogenous Variables Tutorial](/forecasting/exogenous-variables/numeric_features) for details. Simply substitute pandas DataFrames with Spark DataFrames—the API remains identical.

## Related Resources

Explore more distributed forecasting options:

- [Distributed Computing Overview](/forecasting/forecasting-at-scale/computing_at_scale) - Compare Spark, Dask, and Ray

- [Dask Integration](/forecasting/forecasting-at-scale/dask) - For datasets with 10M-100M observations

- [Ray Integration](/forecasting/forecasting-at-scale/ray) - For ML pipeline integration

- [Fine-tuning TimeGPT](/forecasting/fine-tuning/steps) - Improve accuracy at scale

description:"Advanced techniques to enhance TimeGPT forecast accuracy for energy and electricity."

icon:"bullseye"

---

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/22_how_to_improve_forecast_accuracy.ipynb)

<Framecaption="Hourly electricity price for Germany (training period highlighted).">

description: "Master long-horizon time series forecasting in Python using TimeGPT. Learn to predict 2+ seasonal periods ahead with confidence intervals and uncertainty quantification."

icon: "clock"

---

## What is Long-Horizon Forecasting?

Long-horizon forecasting refers to predictions far into the future, typically exceeding two seasonal periods. For example, forecasting electricity demand 3 months ahead for hourly data, or predicting sales 2 years ahead for monthly data. The exact threshold depends on data frequency. The further you forecast, the more uncertainty you face.

The key challenge with long-horizon forecasting is that these predictions extend so far into the future that they may be influenced by unforeseen factors not present in the initial dataset. This means long-horizon forecasts generally involve greater risk and uncertainty compared to short-term predictions.

To address these unique challenges, Nixtla provides the specialized `timegpt-1-long-horizon` model in TimeGPT. You can access this model by simply specifying `model="timegpt-1-long-horizon"` when calling `nixtla_client.forecast`.

Use the `timegpt-1-long-horizon` model when your forecast horizon exceeds two complete seasonal cycles in your data.

## How to Use the Long-Horizon Model

[](https://colab.research.google.com/github/Nixtla/nixtla/blob/main/nbs/docs/tutorials/04_longhorizon.ipynb)

### Step 1: Import Packages

Start by installing and importing the required packages, then initialize the Nixtla client:

```python

from nixtla import NixtlaClient

from datasetsforecast.long_horizon import LongHorizon

from utilsforecast.losses import mae

nixtla_client = NixtlaClient(

api_key='my_api_key_provided_by_nixtla' # defaults to os.environ.get("NIXTLA_API_KEY")

)

```

### Step 2: Load the Data

We'll demonstrate long-horizon forecasting using the ETTh1 dataset, which measures oil temperatures and load variations on an electricity transformer in China. Here, we only forecast oil temperatures (`y`):

### Step 3: Forecasting with the Long-Horizon Model

TimeGPT's `timegpt-1-long-horizon` model is optimized for predictions far into the future. Specify it like so:

```python

fcst_df = nixtla_client.forecast(

df=input_seq,

h=96,

level=[90],

finetune_steps=10,

finetune_loss='mae',

model='timegpt-1-long-horizon',

time_col='ds',

target_col='y'

)

```

Next, plot the forecast along with 90% confidence intervals:

```python

nixtla_client.plot(

Y_df[-168:],

fcst_df,

models=['TimeGPT'],

level=[90],

time_col='ds',

target_col='y'

)

```

<Frame caption="TimeGPT Long-Horizon Forecast with 90% Confidence Intervals">

</Frame>

### Step 4: Evaluation

Finally, assess forecast performance using Mean Absolute Error (MAE):

The model achieves a MAE of approximately 0.146, indicating strong performance for these longer-range forecasts.

## Frequently Asked Questions

**Q: What's the difference between timegpt-1 and timegpt-1-long-horizon?**

The `timegpt-1-long-horizon` model is specifically trained for extended forecast horizons (2+ seasonal periods), providing better accuracy for long-range predictions.

**Q: How far ahead can I forecast with the long-horizon model?**

The optimal horizon depends on your data frequency and patterns. Generally, the model performs well up to 4-6 seasonal cycles ahead.

**Q: Can I use exogenous variables with long-horizon forecasting?**

Yes, TimeGPT supports exogenous variables for improved long-horizon accuracy. See our [exogenous variables guide](/forecasting/exogenous-variables/numeric_features) for details.

## Related Resources

Learn more about TimeGPT capabilities:

- [Fine-tuning TimeGPT](/forecasting/fine-tuning/steps) - Improve accuracy for your specific dataset

description: "Learn how to generate quantile forecasts and prediction intervals to capture uncertainty in your forecasts."

icon: "question"

---

In time series forecasting, it is important to consider the full probability distribution of the predictions rather than a single point estimate. This provides a more accurate representation of the uncertainty around the forecasts and allows better decision-making.

**TimeGPT** supports uncertainty quantification through quantile forecasts and prediction intervals.

## Why Consider the Full Probability Distribution?

When you focus on a single point prediction, you lose valuable information about the range of possible outcomes. By quantifying uncertainty, you can:

- Identify best-case and worst-case scenarios

- Improve risk management and contingency planning

- Gain confidence in decisions that rely on forecast accuracy