TextMonkey (#75)

* textmonkey

* textmonkey code

* Delete README_cn.md

---------

Co-authored-by:  Yuliang Liu <34134635+Yuliang-Liu@users.noreply.github.com>

Yuliang Liu <34134635+Yuliang-Liu@users.noreply.github.com>

Showing

384 KB

images/logo_vlr.png

deleted

100644 → 0

13.8 KB

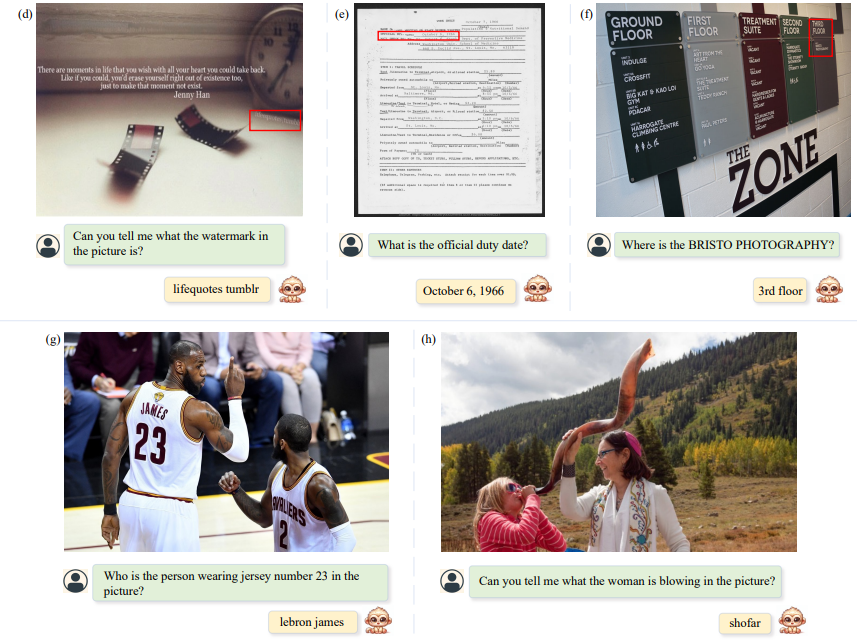

images/qa_1.png

deleted

100644 → 0

742 KB

8.65 MB

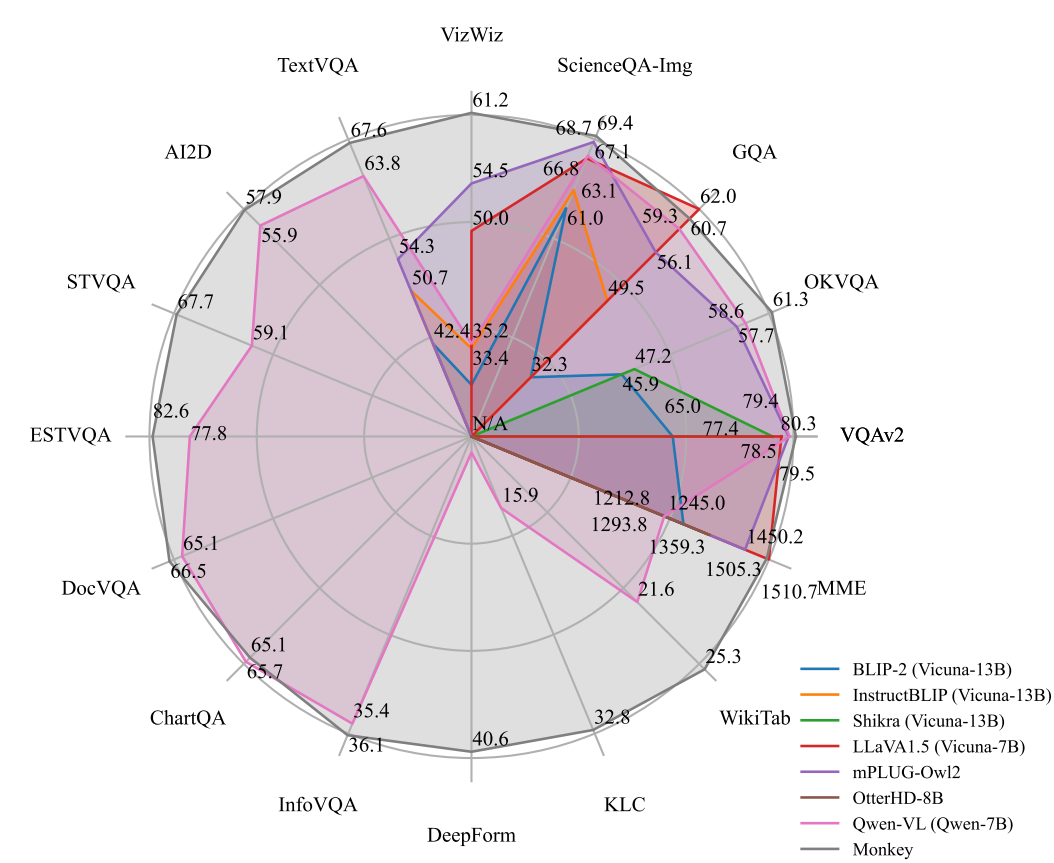

images/radar.pdf

deleted

100644 → 0

File deleted

images/radar.png

deleted

100644 → 0

205 KB

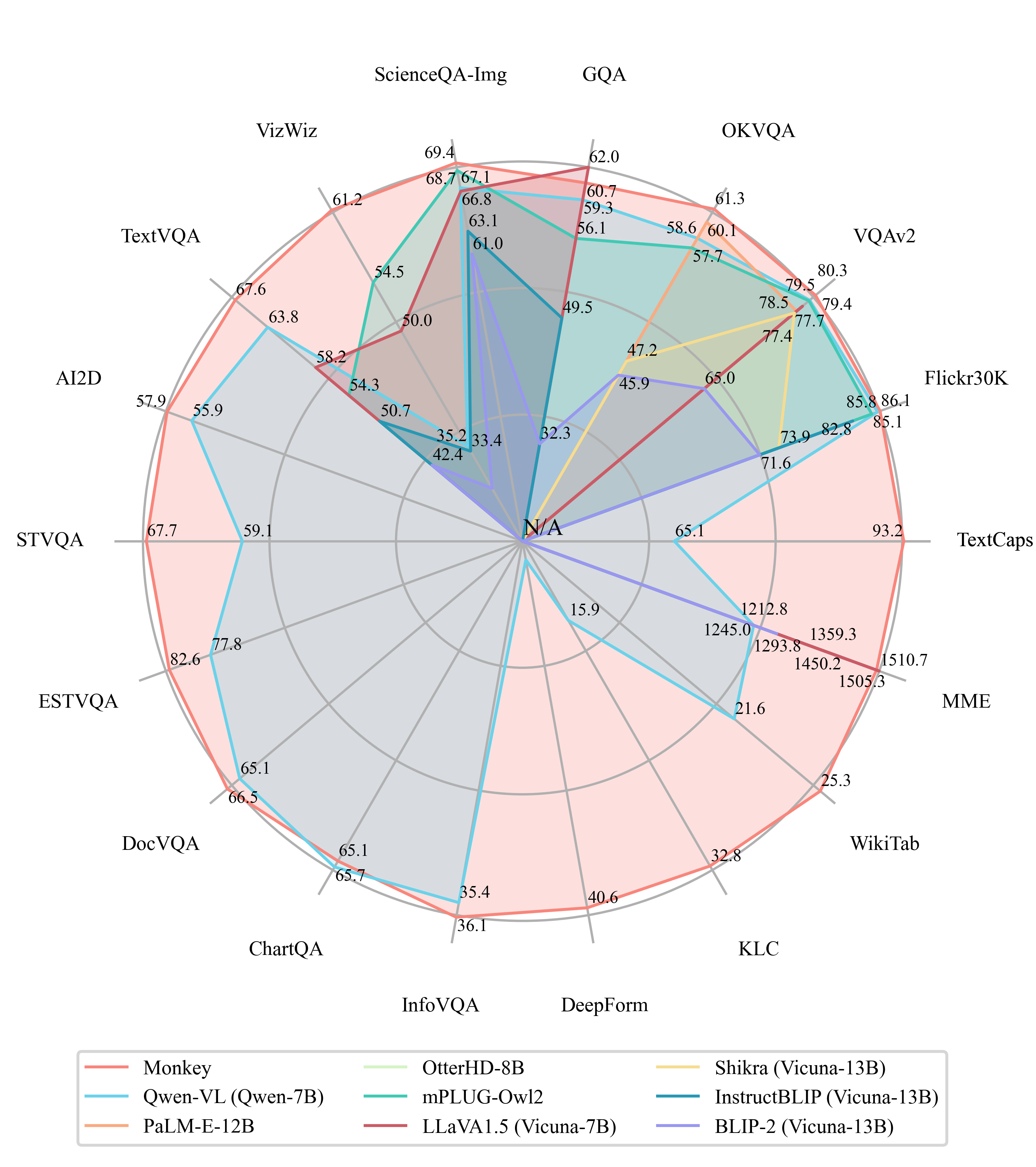

images/radar_1.png

deleted

100644 → 0

1.01 MB

inference.py

0 → 100644