"docs/vscode:/vscode.git/clone" did not exist on "7d5c5a33d7f2261ea0a988ca52749125ecc97db4"

Merge branch 'master' of github.com:open-mmlab/mmdetection

Conflicts: tools/train.py

Showing

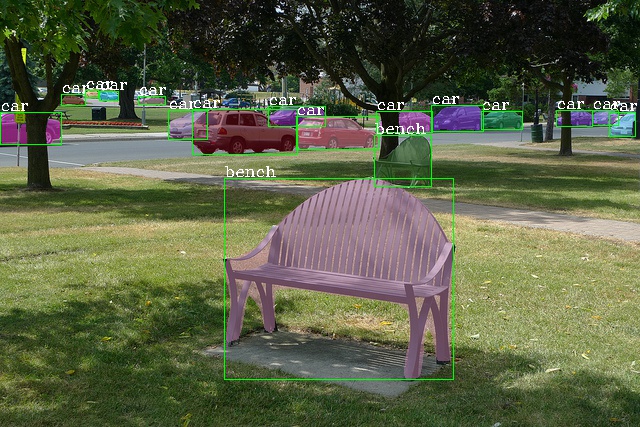

demo/coco_test_12510.jpg

0 → 100644

179 KB