init

Showing

README.md

0 → 100644

assets/qwen2.5.png

0 → 100644

112 KB

32.7 KB

assets/results.png

0 → 100644

25.6 KB

39.3 KB

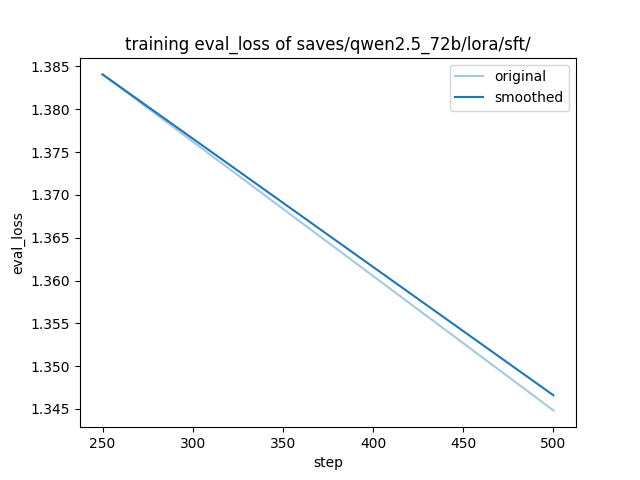

assets/training_loss.png

0 → 100644

48 KB

icon.png

0 → 100644

53.8 KB