"sgl-kernel/vscode:/vscode.git/clone" did not exist on "1bd5316873ee0ce327a5e92c0dc6bc799ff0d59c"

Initial commit

Showing

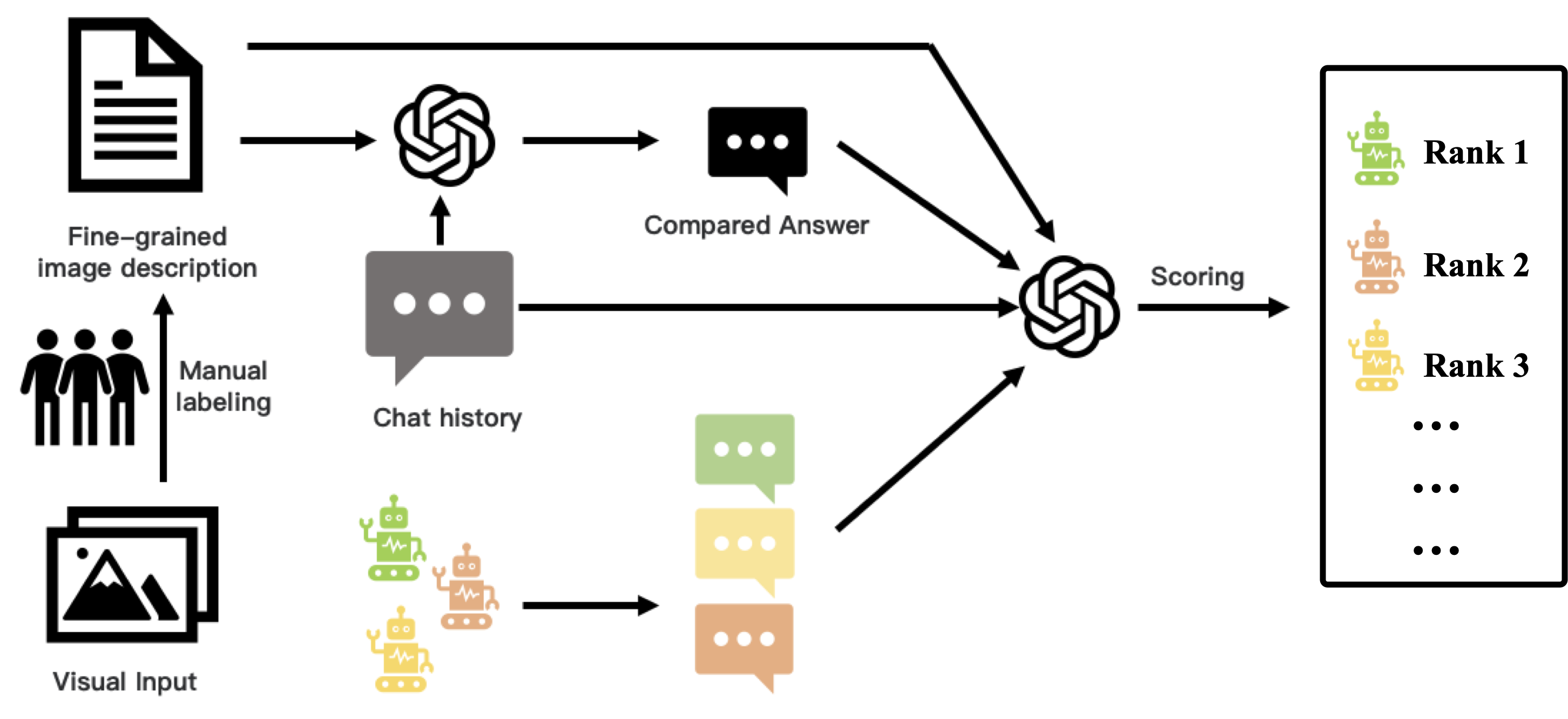

assets/touchstone_eval.png

0 → 100644

467 KB

assets/touchstone_logo.png

0 → 100644

1.01 MB

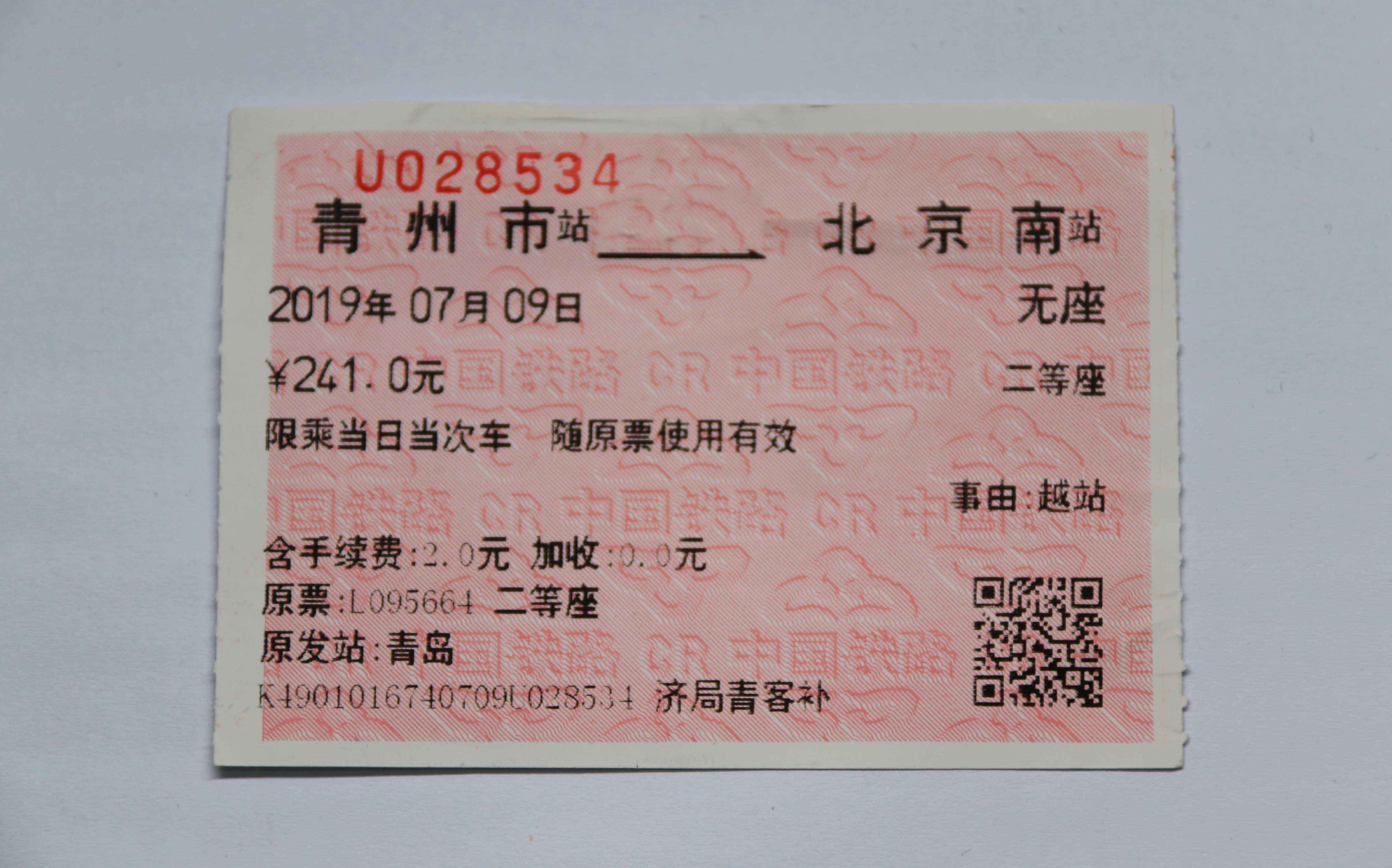

assets/train_ticket.jpg

0 → 100644

474 KB

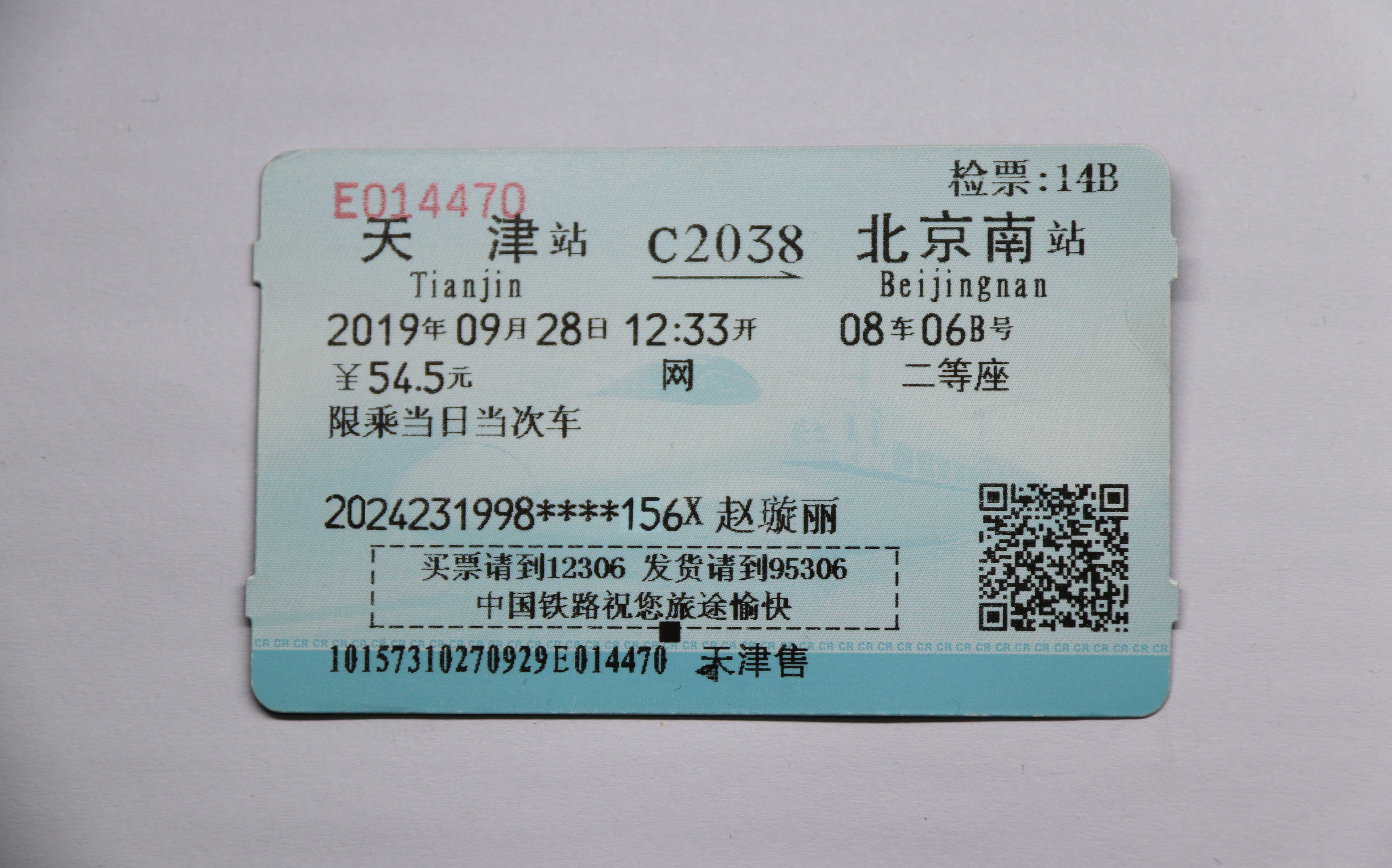

assets/train_ticket2.jpg

0 → 100644

755 KB

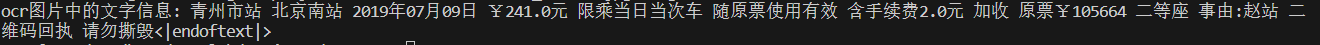

assets/train_ticket_info.png

0 → 100644

10.4 KB

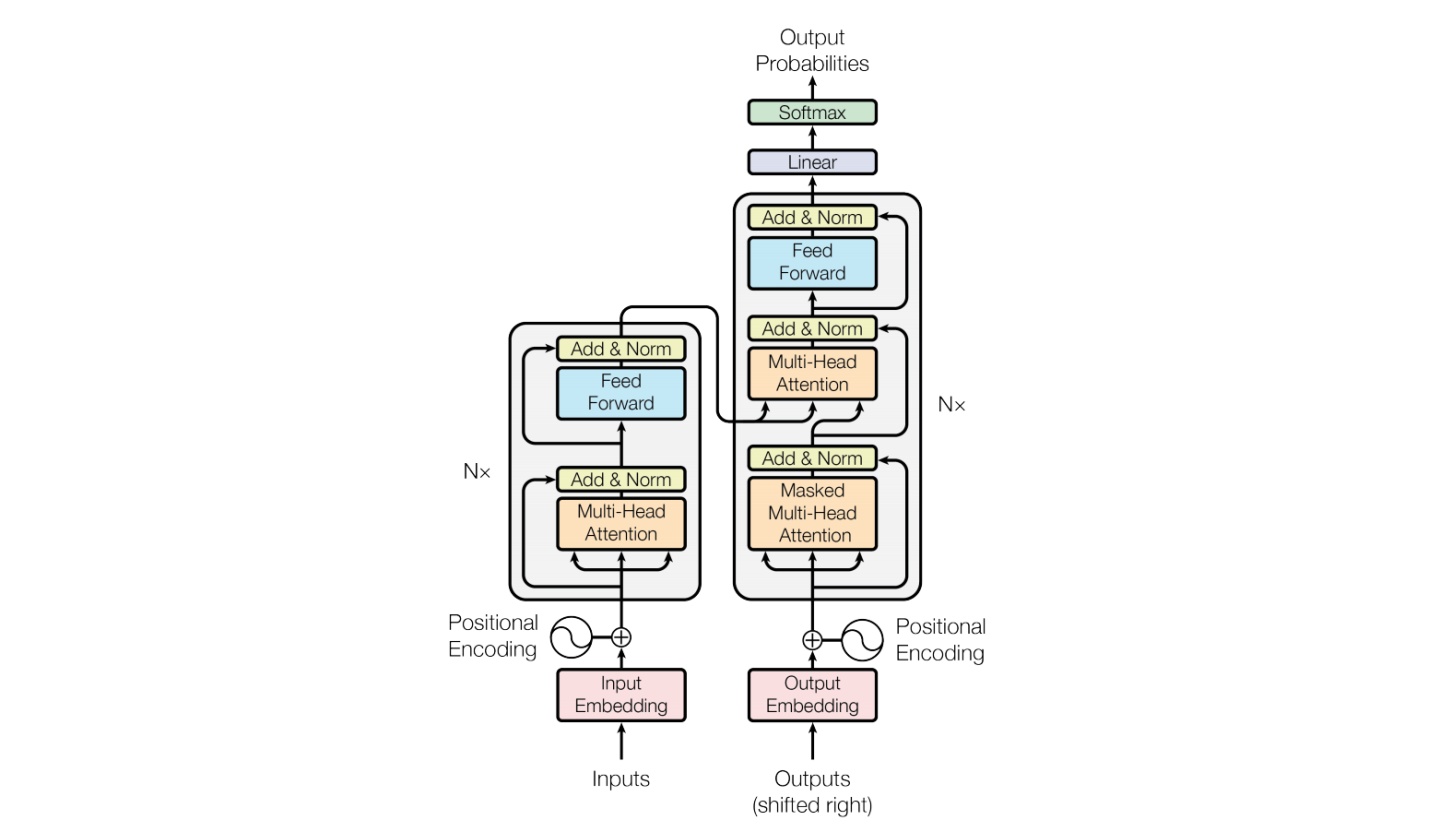

assets/transformer.jpg

0 → 100644

87.9 KB

assets/transformer.png

0 → 100644

112 KB

assets/wechat.png

0 → 100644

173 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

eval_mm/EVALUATION.md

0 → 100644

eval_mm/data

0 → 100644

eval_mm/evaluate_caption.py

0 → 100644

eval_mm/evaluate_vqa.py

0 → 100644

eval_mm/mmbench/MMBENCH.md

0 → 100644