Initial commit

Showing

finetune/finetune_lora_ds.sh

0 → 100644

label.py

0 → 100644

merge.py

0 → 100644

model.properties

0 → 100644

openai_api.py

0 → 100644

qwen_vl_chat_inference.py

0 → 100644

qwen_vl_inference.py

0 → 100644

requirements.txt

0 → 100644

| transformers | ||

| accelerate | ||

| tiktoken | ||

| einops | ||

| transformers_stream_generator | ||

| scipy | ||

| torchvision | ||

| pillow | ||

| tensorboard | ||

| matplotlib | ||

| tk | ||

| shutilwhich | ||

| deepspeed | ||

| \ No newline at end of file |

requirements_openai_api.txt

0 → 100644

requirements_web_demo.txt

0 → 100644

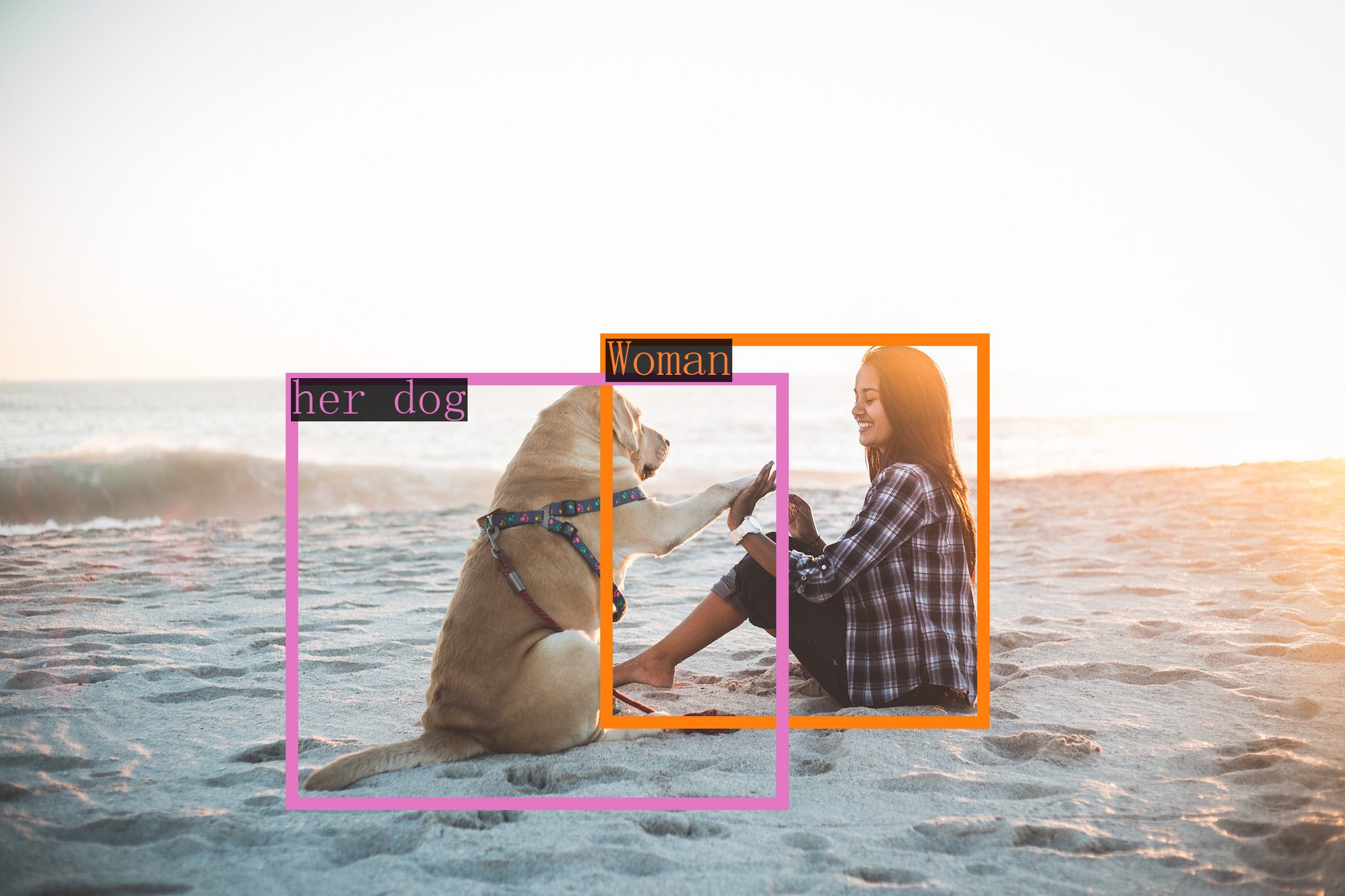

saves/2.jpg

0 → 100644

242 KB

saves/dcu_result.txt

0 → 100644

saves/gpu_result.txt

0 → 100644

touchstone/README.md

0 → 100644

touchstone/README_CN.md

0 → 100644

touchstone/README_JA.md

0 → 100644

touchstone/README_KO.md

0 → 100644