Initial commit

Showing

eval_mm/mme/EVAL_MME.md

0 → 100644

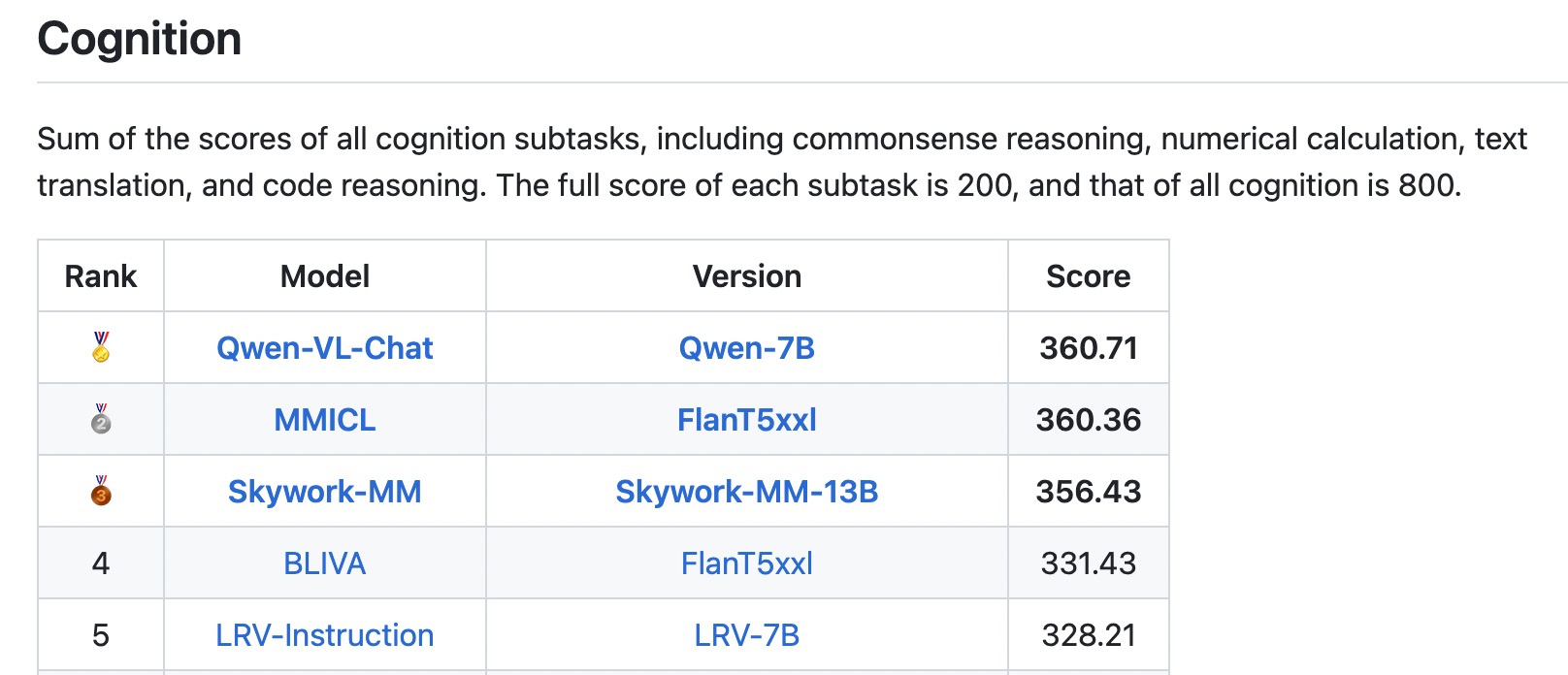

eval_mm/mme/cognition.jpg

0 → 100644

152 KB

eval_mm/mme/eval.py

0 → 100644

eval_mm/mme/get_images.py

0 → 100644

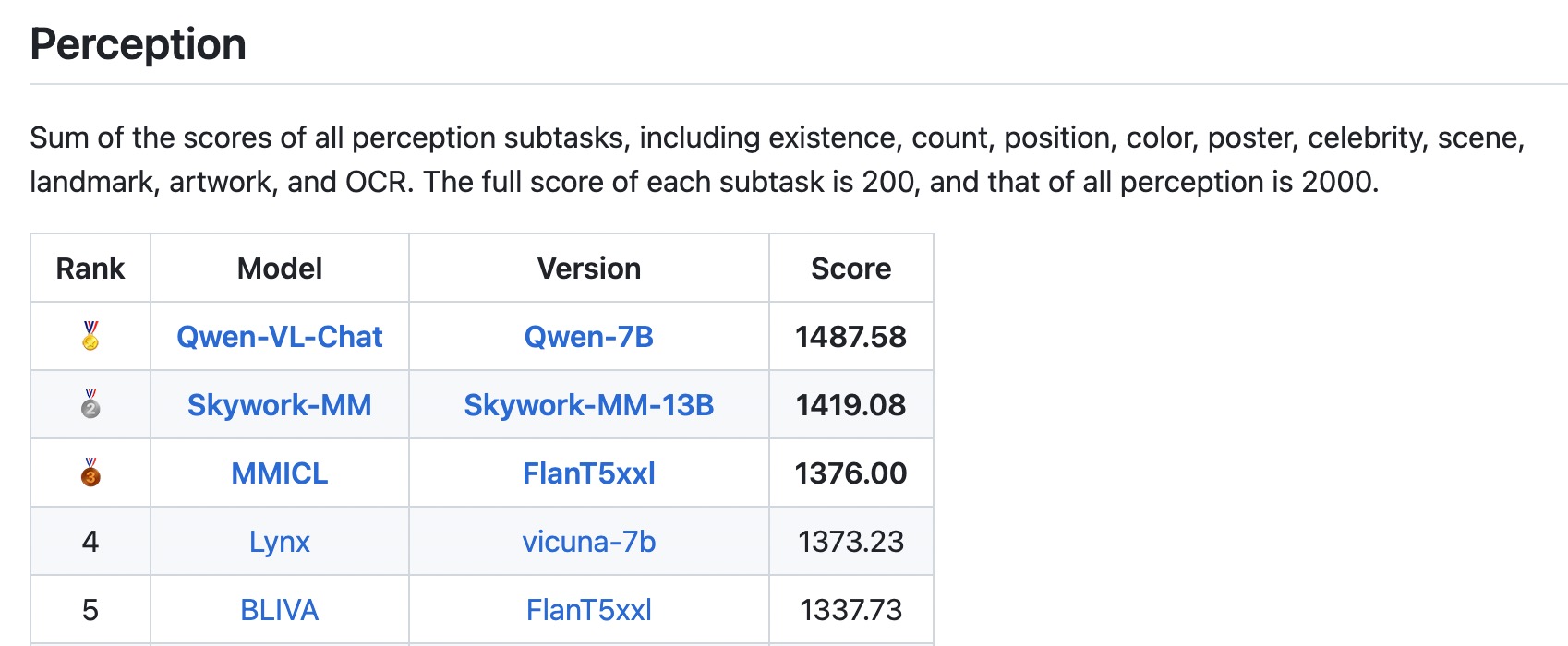

eval_mm/mme/perception.jpg

0 → 100644

147 KB

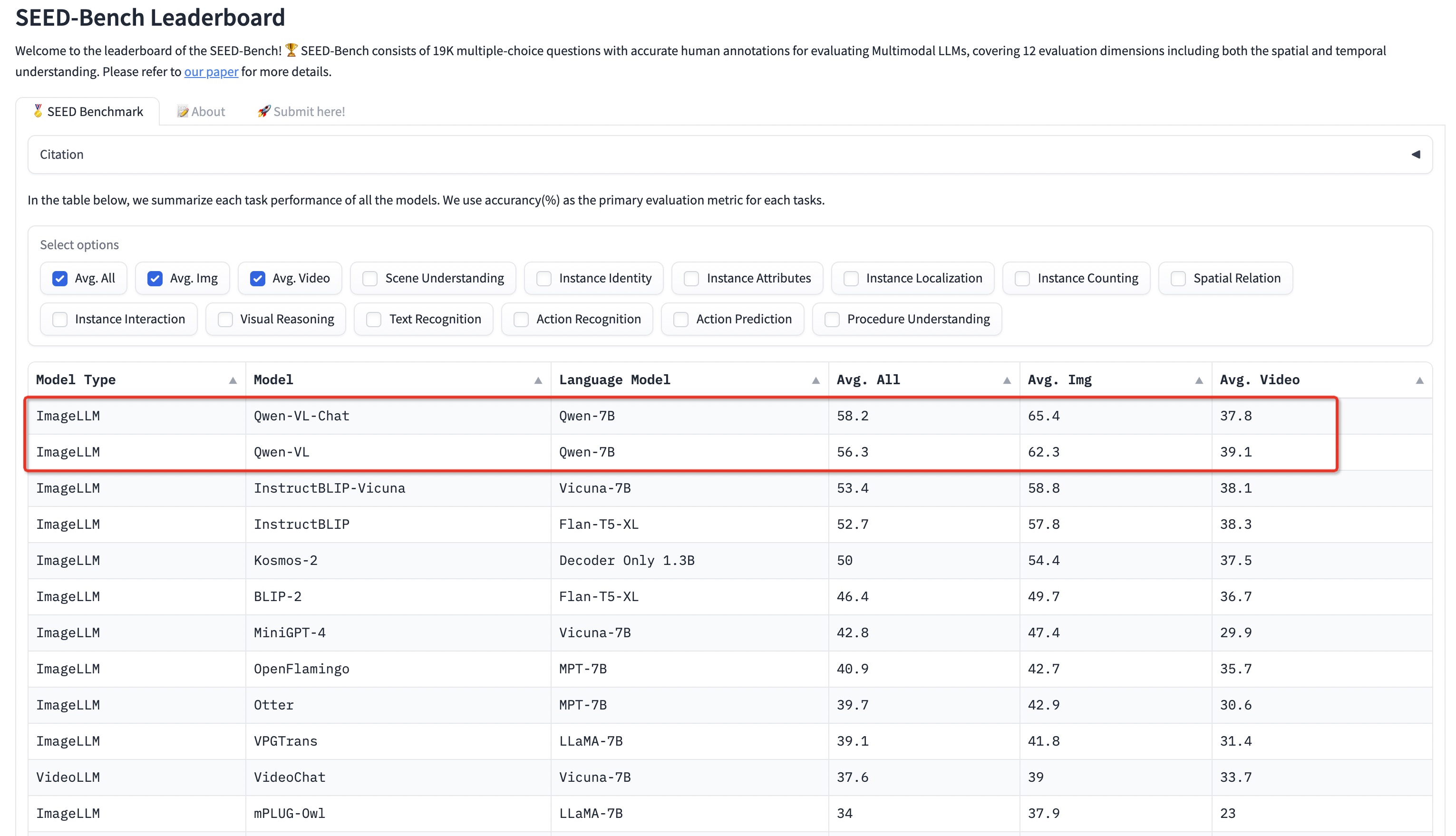

eval_mm/seed_bench/eval.py

0 → 100644

528 KB

eval_mm/seed_bench/trans.py

0 → 100644

eval_mm/vqa.py

0 → 100644

eval_mm/vqa_eval.py

0 → 100644

finetune.py

0 → 100644

finetune/finetune_ds.sh

0 → 100644