Initial commit

parents

Showing

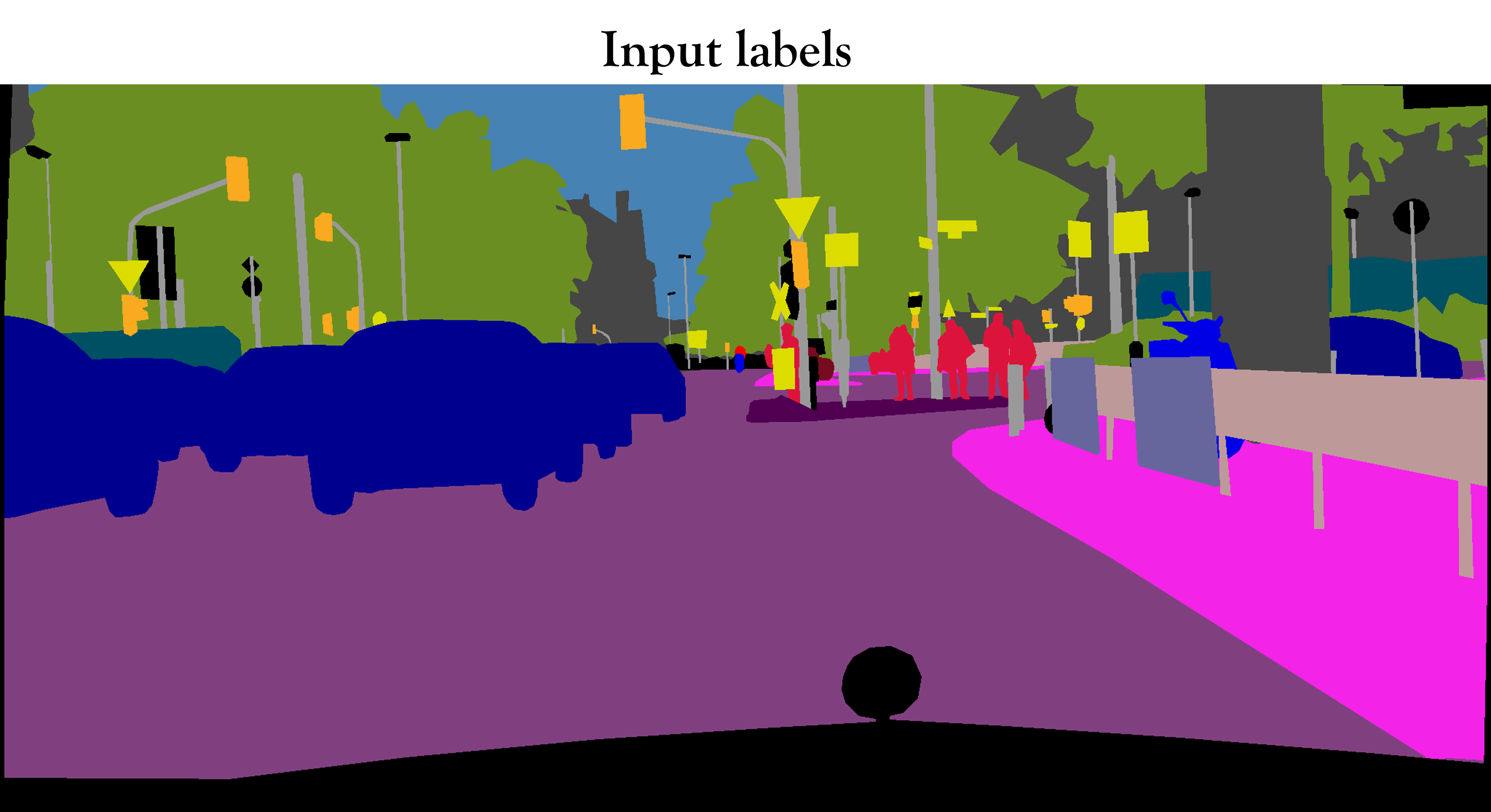

imgs/teaser_label.png

0 → 100644

465 KB

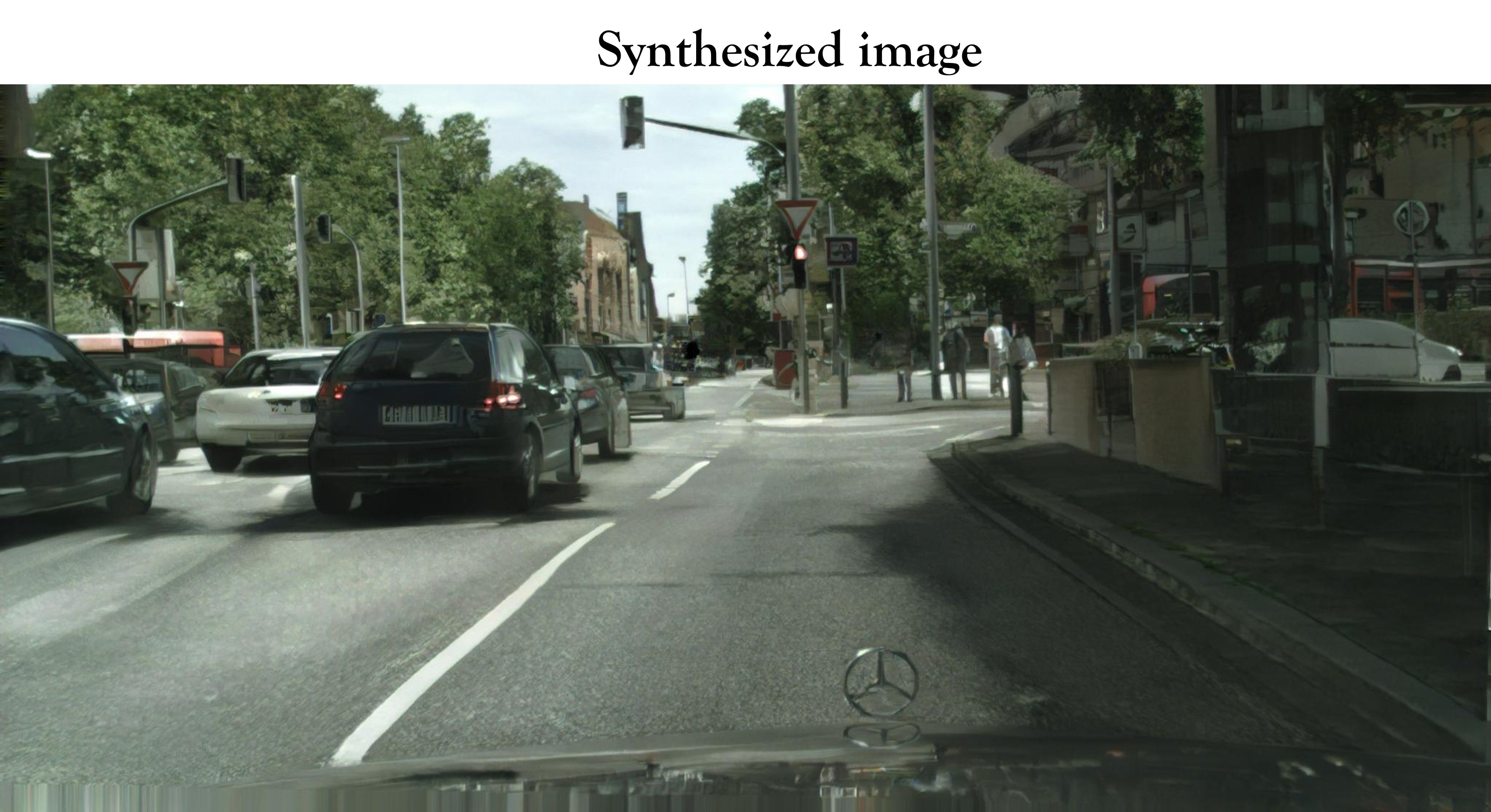

imgs/teaser_ours.jpg

0 → 100644

657 KB

imgs/teaser_style.gif

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

models/__init__.py

0 → 100644

models/base_model.py

0 → 100644

models/models.py

0 → 100644

models/networks.py

0 → 100644

models/pix2pixHD_model.py

0 → 100644

models/ui_model.py

0 → 100644

options/__init__.py

0 → 100644

options/base_options.py

0 → 100644

options/test_options.py

0 → 100644

options/train_options.py

0 → 100644

pix2pixHD_README.md

0 → 100644

precompute_feature_maps.py

0 → 100644

run_engine.py

0 → 100644

scripts/test_1024p.sh

0 → 100644

scripts/test_1024p_feat.sh

0 → 100644

scripts/test_512p.sh

0 → 100644

scripts/test_512p_feat.sh

0 → 100644