First commit

Showing

docs/CONTRIBUTING.md

0 → 100644

docs/DATA.md

0 → 100644

docs/EVAL.md

0 → 100644

docs/INSTALL.md

0 → 100644

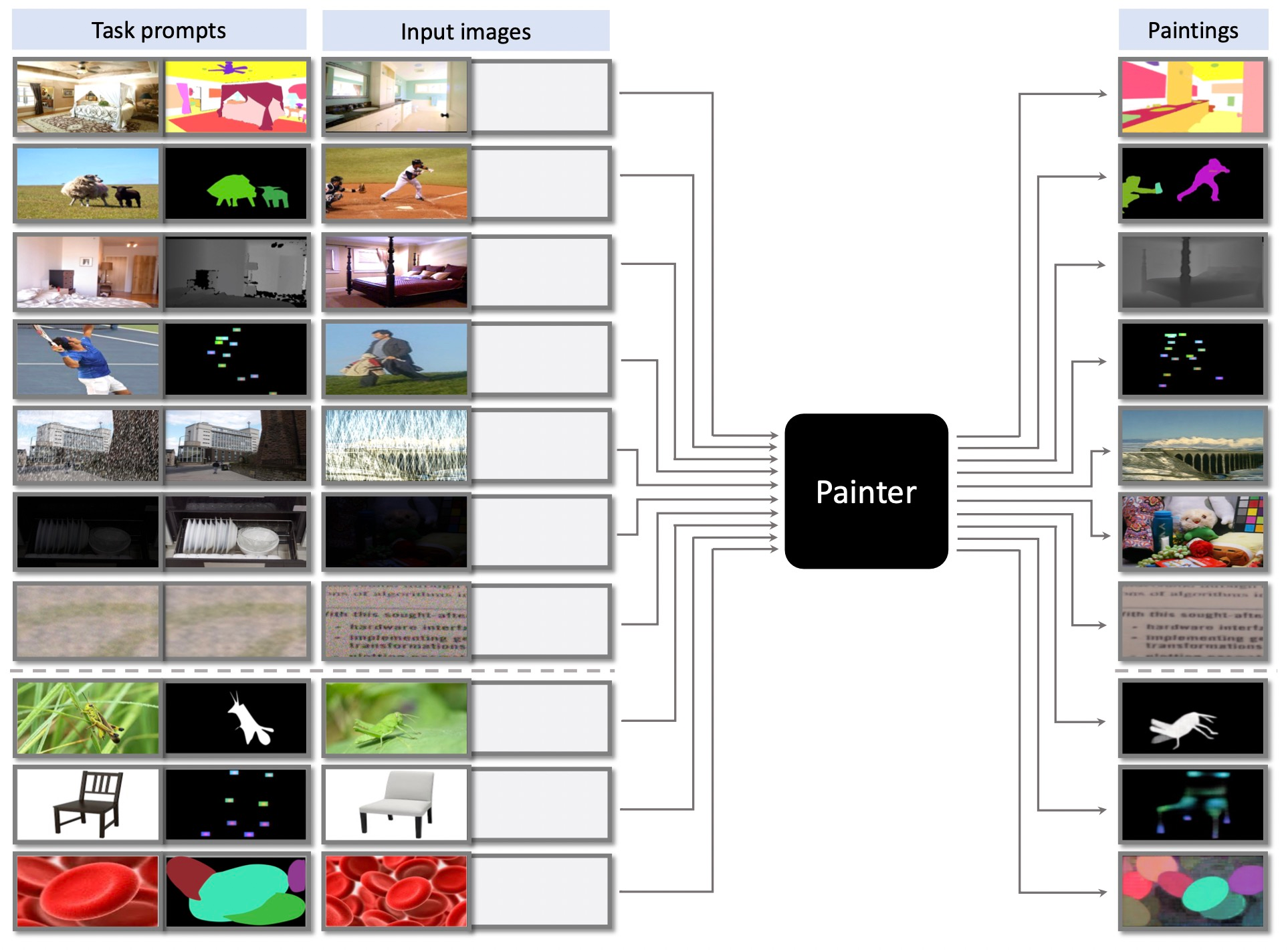

docs/teaser.jpg

0 → 100644

1.93 MB

engine_train.py

0 → 100644

eval/ade20k_semantic/eval.sh

0 → 100644

eval/coco_panoptic/eval.sh

0 → 100644