init

Showing

assets/license/QWEN_LICENSE

0 → 100644

assets/ovis-illustration.png

0 → 100644

879 KB

542 KB

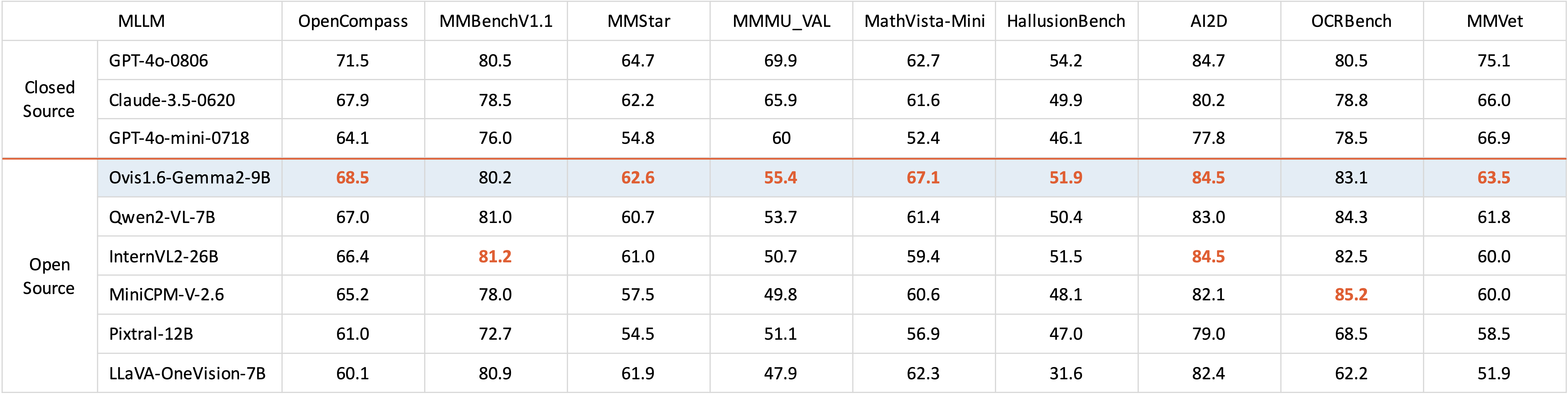

assets/result.png

0 → 100644

38.4 KB

icon.png

0 → 100644

53.8 KB

ovis/__init__.py

0 → 100644