open_clip

Showing

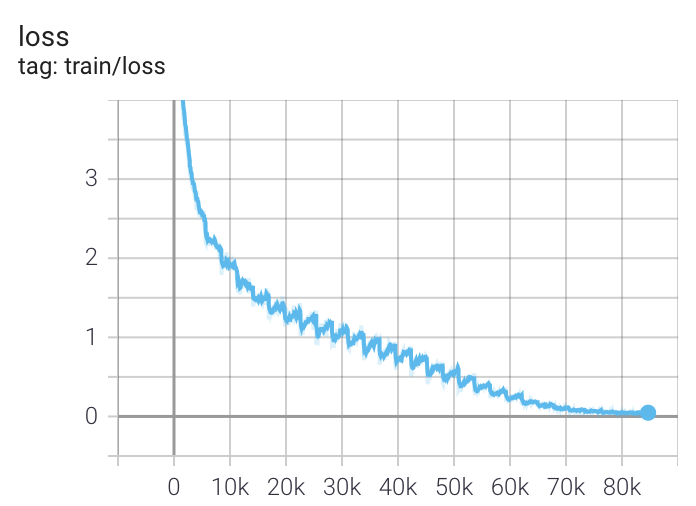

docs/clip_loss.png

0 → 100755

41.9 KB

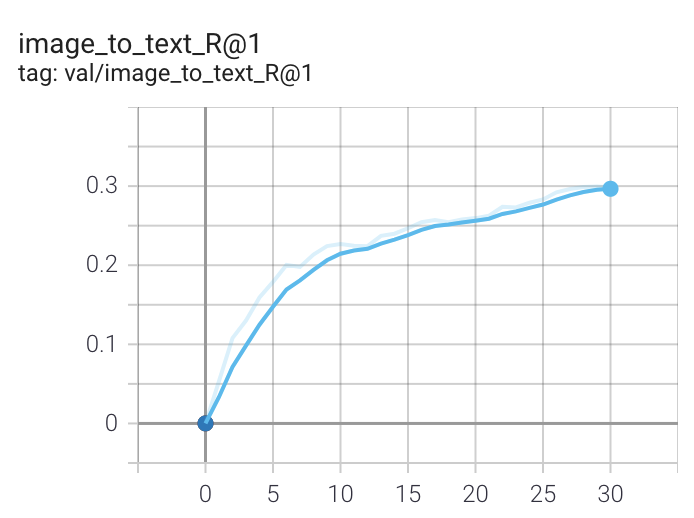

docs/clip_recall.png

0 → 100755

49.6 KB

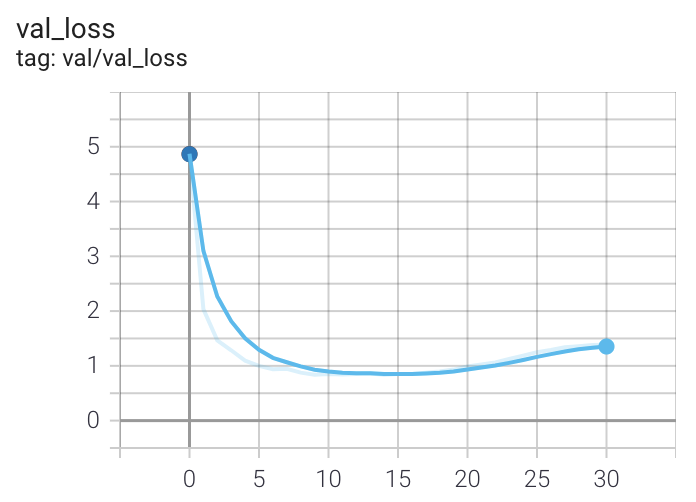

docs/clip_val_loss.png

0 → 100755

42.8 KB

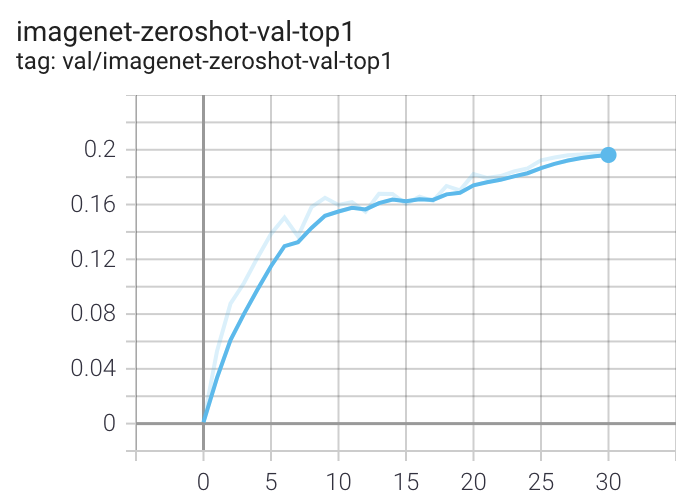

docs/clip_zeroshot.png

0 → 100755

57.1 KB

docs/clipa.md

0 → 100755

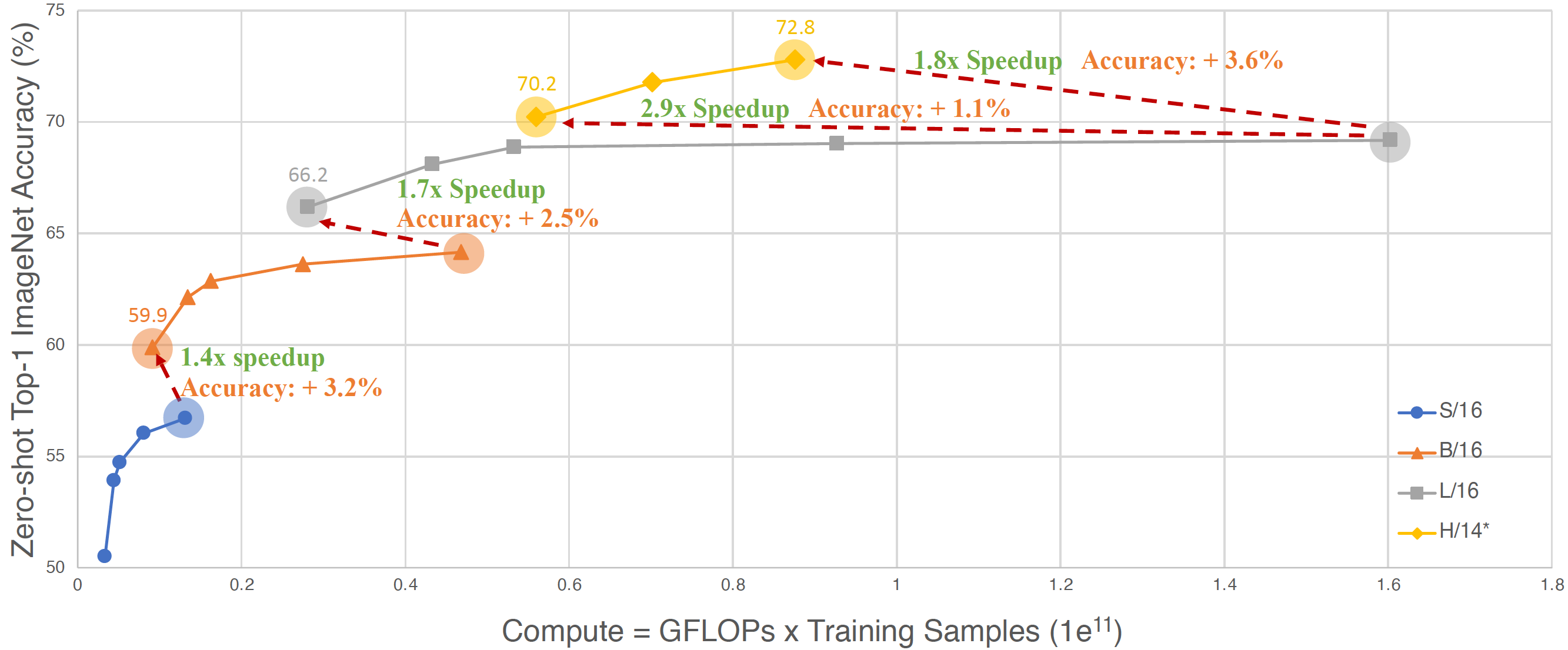

docs/clipa_acc_compute.png

0 → 100755

255 KB

1.04 MB

15.7 KB

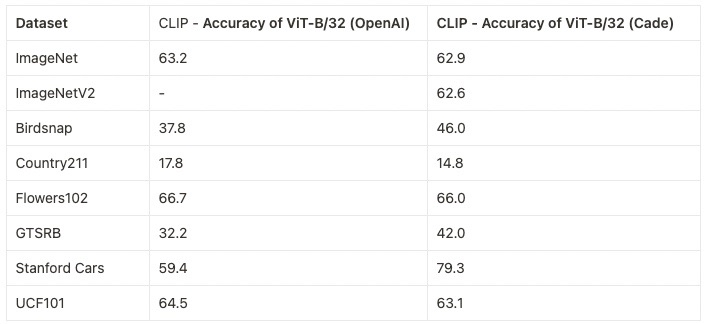

docs/datacomp_models.md

0 → 100755

995 KB

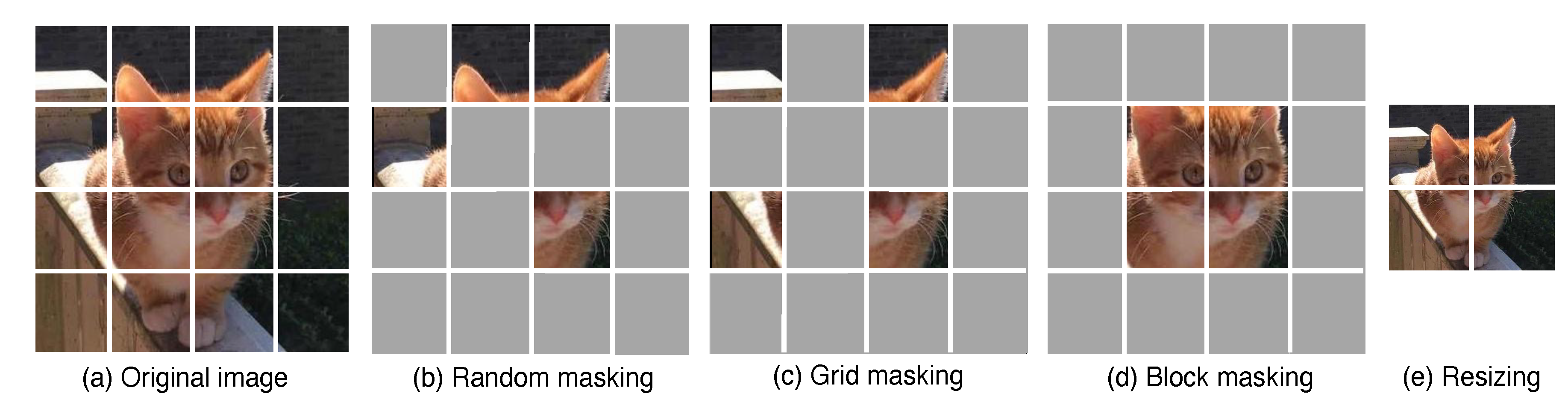

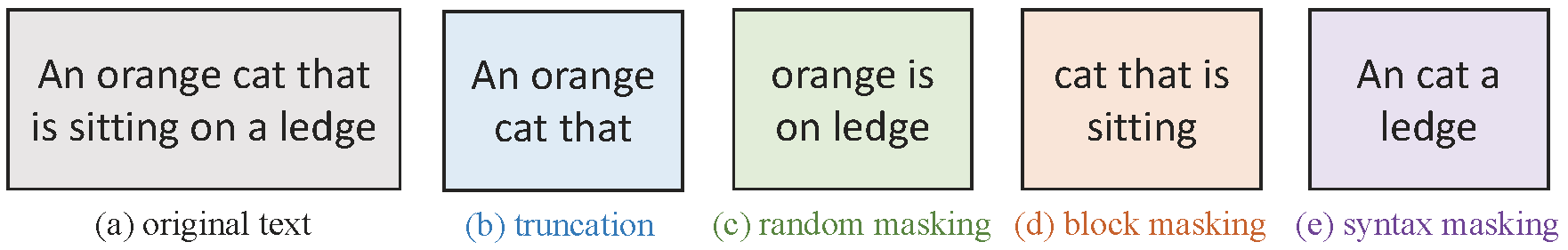

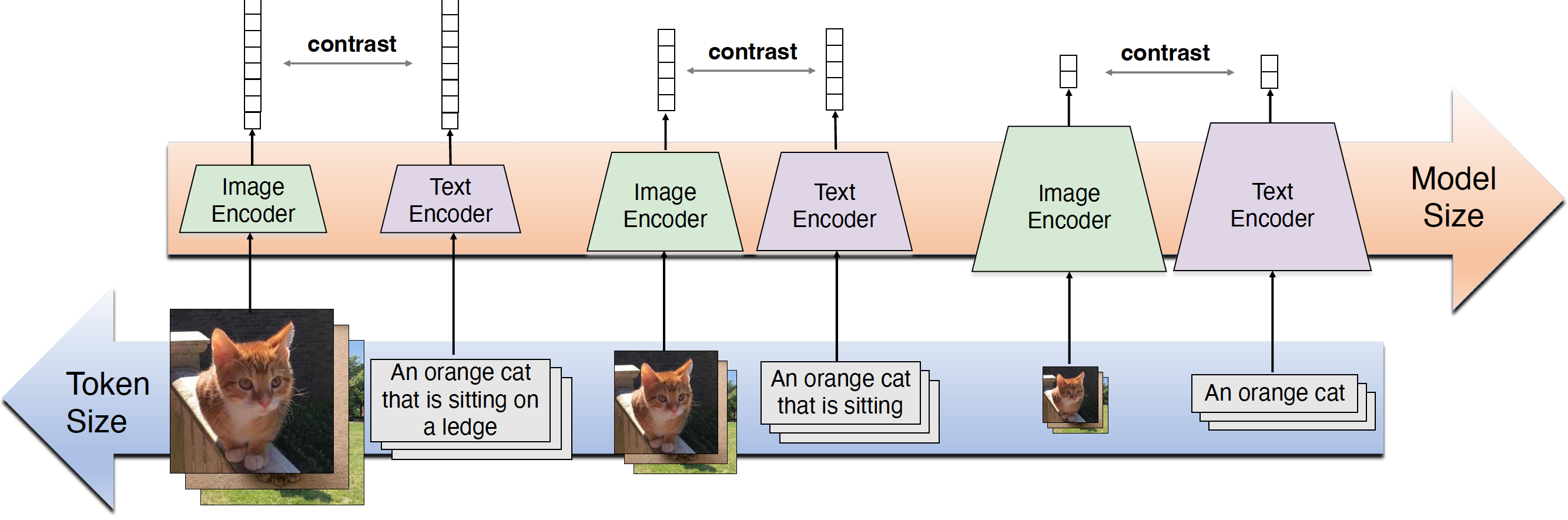

docs/inverse_scaling_law.png

0 → 100755

583 KB

240 KB

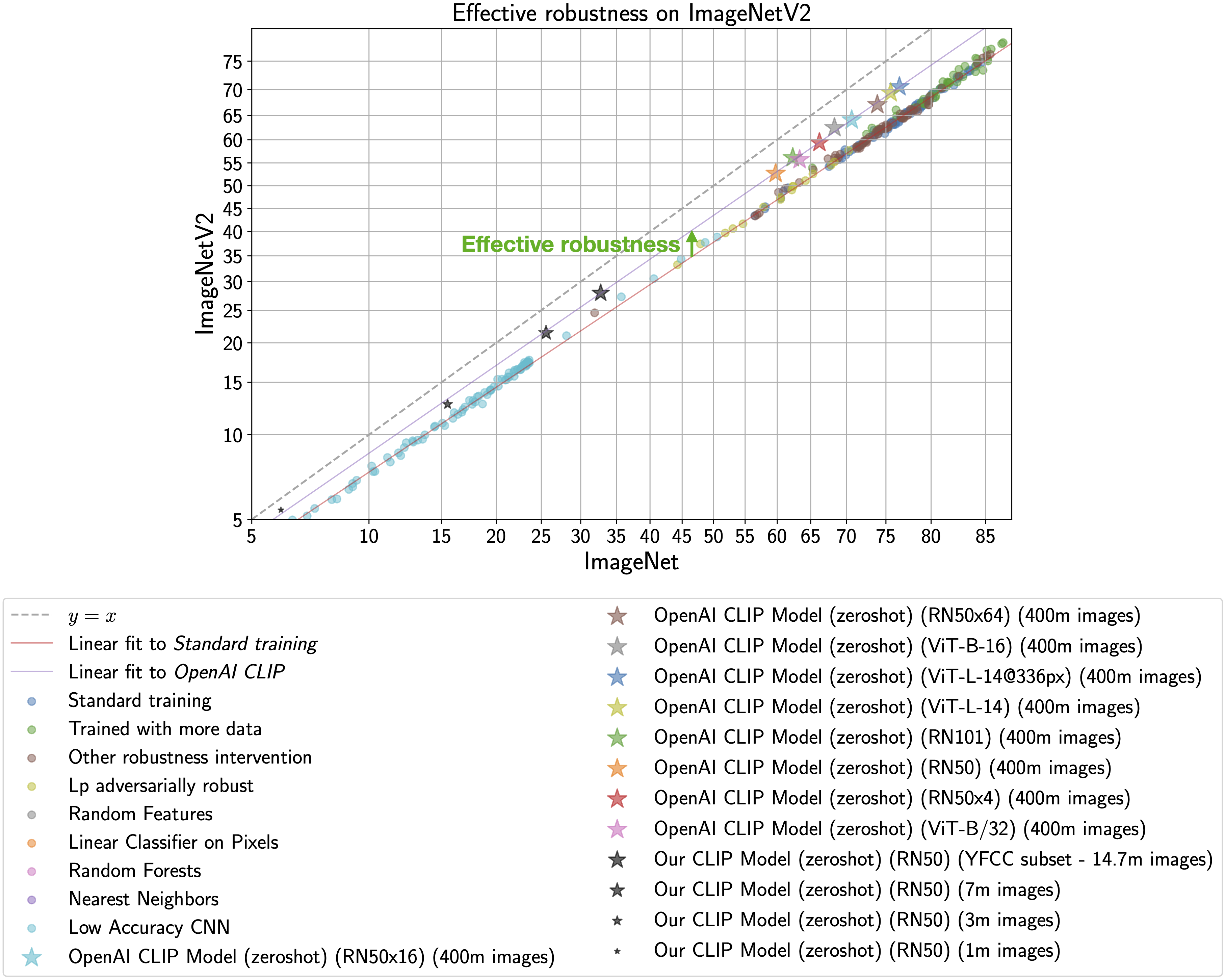

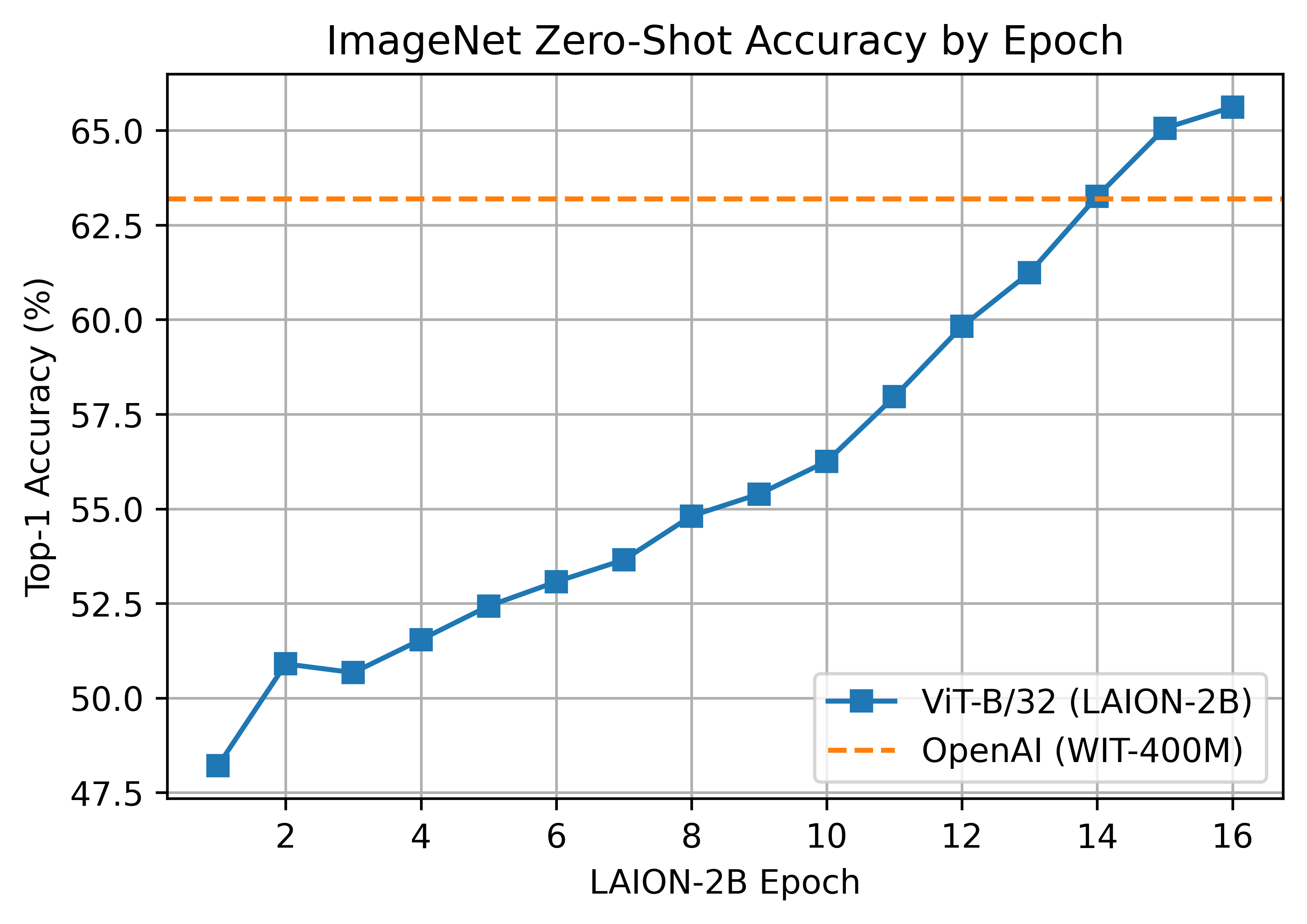

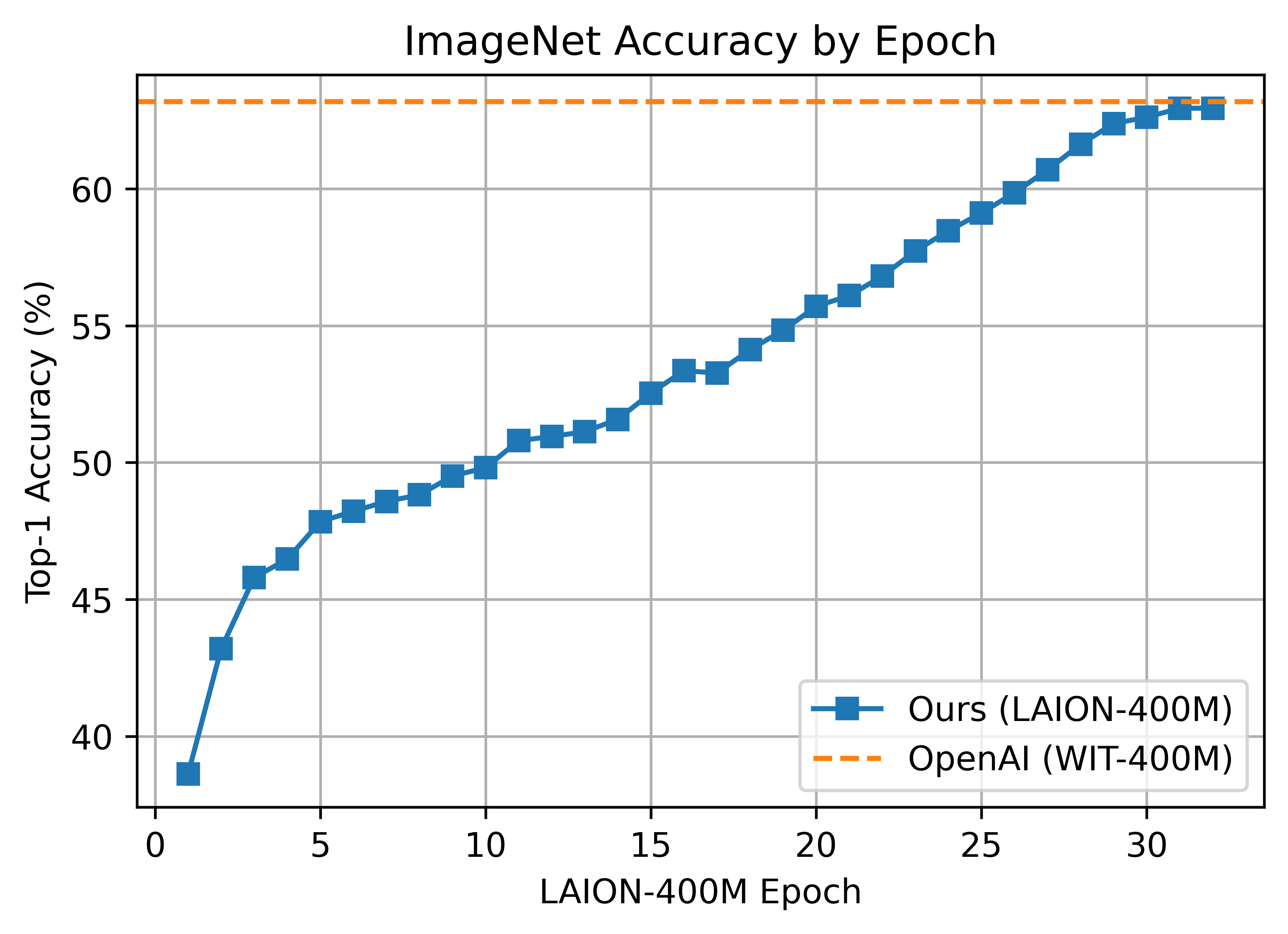

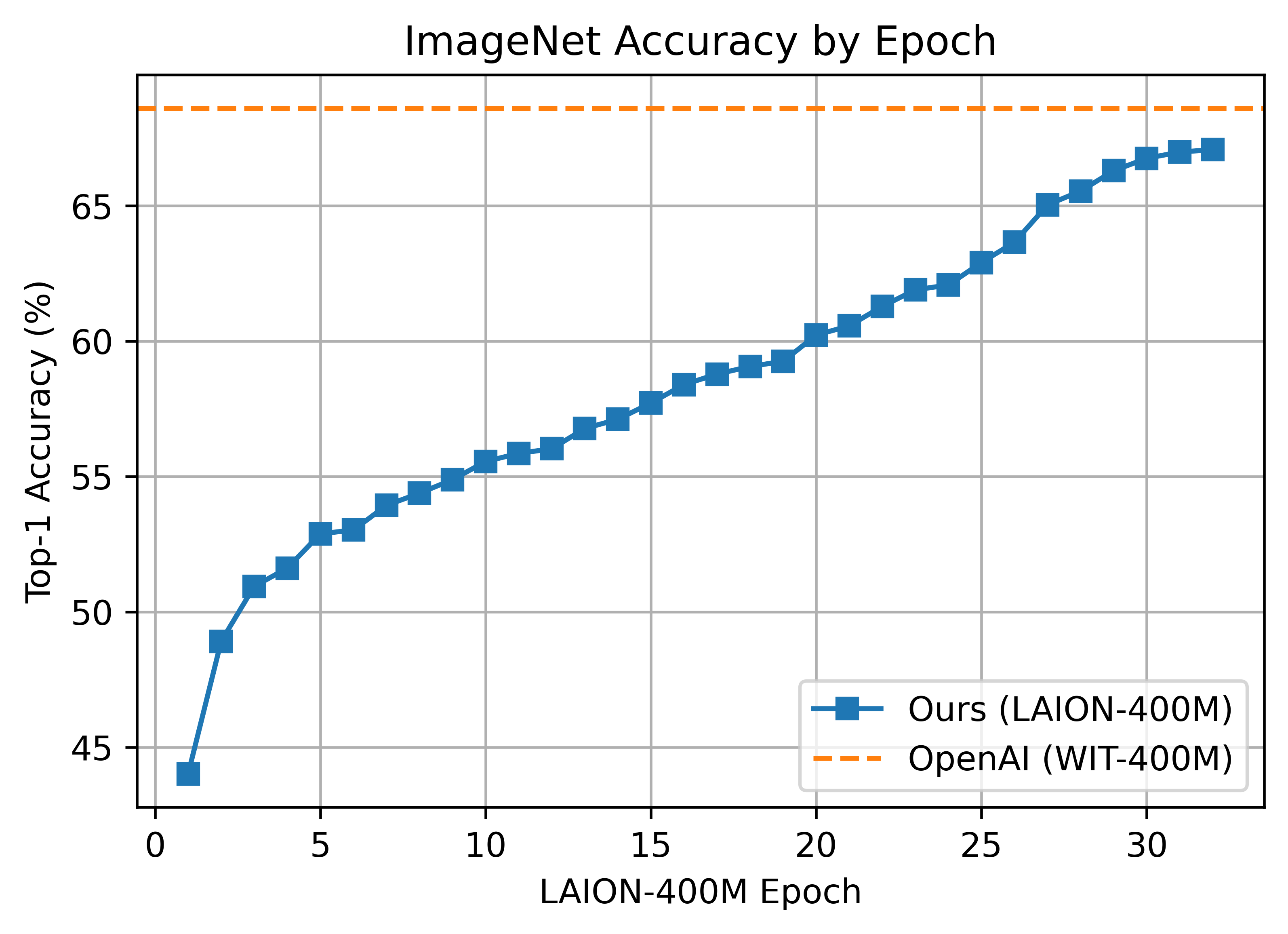

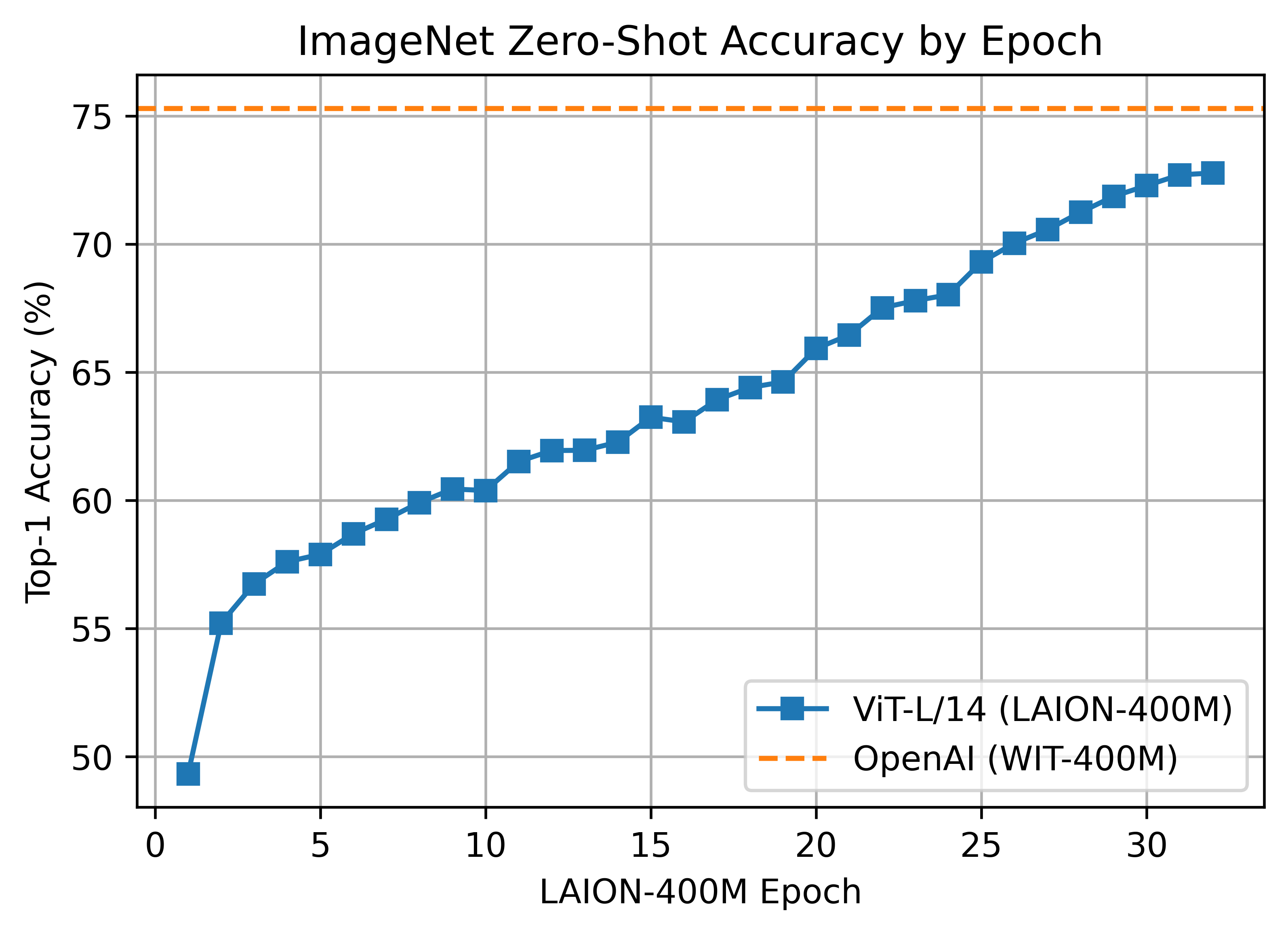

docs/laion_clip_zeroshot.png

0 → 100755

191 KB

191 KB

249 KB

199 KB

58.1 KB

docs/model_profile.csv

0 → 100755