omnisql

Showing

examples/example_4.txt

0 → 100644

inferences/tf_inference.py

0 → 100644

inferences/vllm_inference.py

0 → 100644

inferences/vllm_inference.sh

0 → 100644

model.properties

0 → 100644

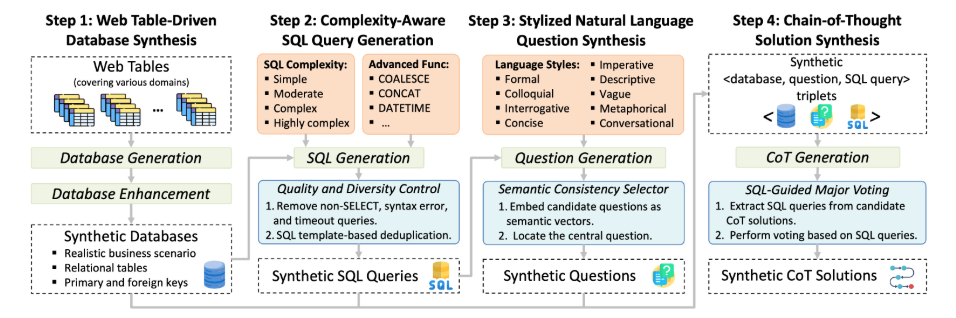

readme_imgs/alg.png

0 → 100644

14.2 KB

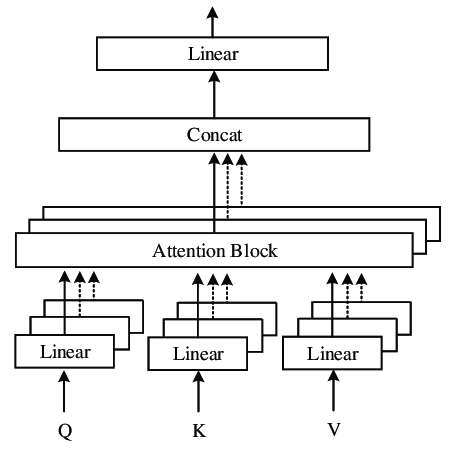

readme_imgs/arch.png

0 → 100644

216 KB

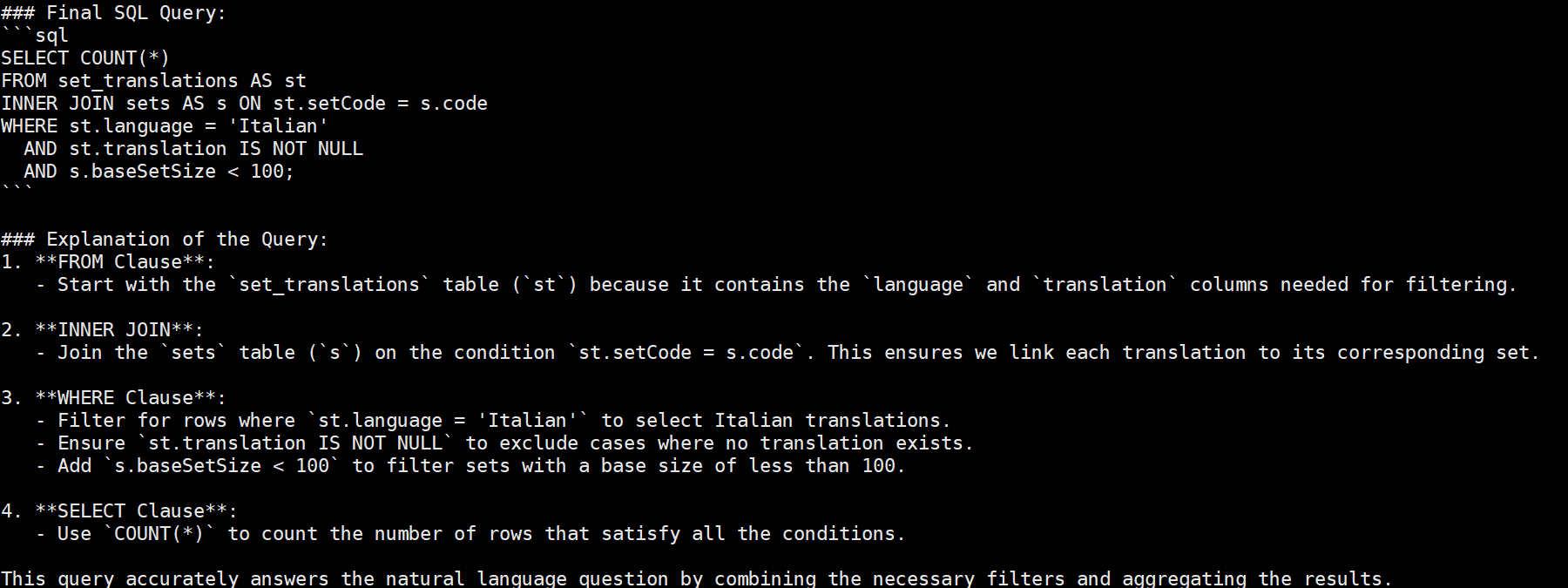

readme_imgs/result.png

0 → 100644

60.8 KB

requirements.txt

0 → 100644

| func_timeout | |||

| tqdm | |||

| matplotlib | |||

| nltk==3.8.1 | |||

| sqlparse | |||

| tensorboard | |||

| peft | |||

| transformers | |||

| accelerate | |||

| click | |||

| datasets | |||

| \ No newline at end of file |

train_and_evaluate/README.md

0 → 100644

This source diff could not be displayed because it is too large. You can view the blob instead.