v1.0

Showing

Too many changes to show.

To preserve performance only 428 of 428+ files are displayed.

examples/OFA_logo_tp.svg

0 → 100644

examples/case1.png

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

examples/demo.gif

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

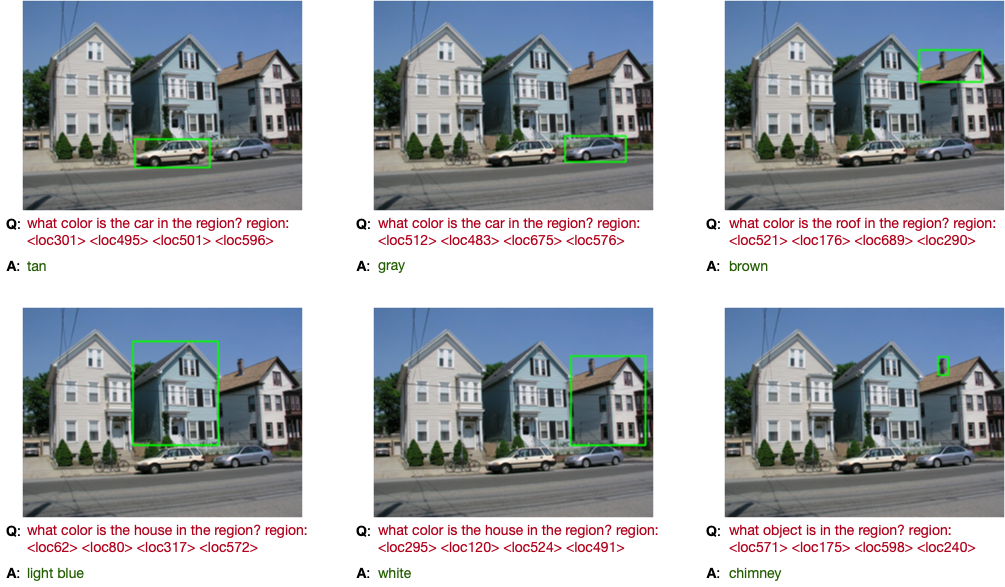

examples/grounded_qa.png

0 → 100644

311 KB

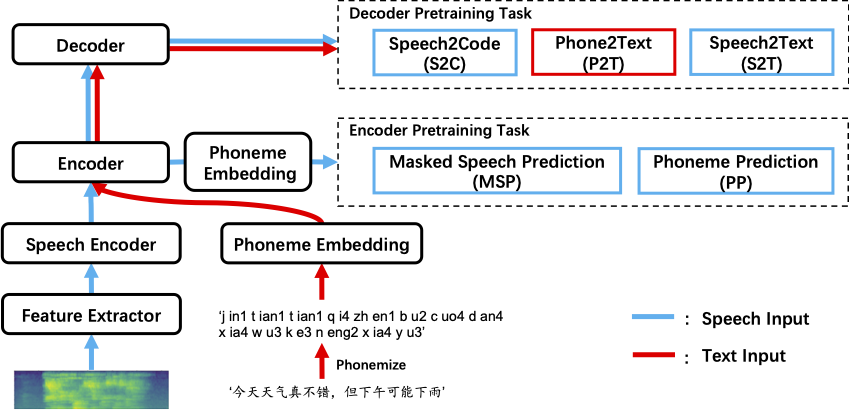

examples/mmspeech.png

0 → 100644

77.8 KB

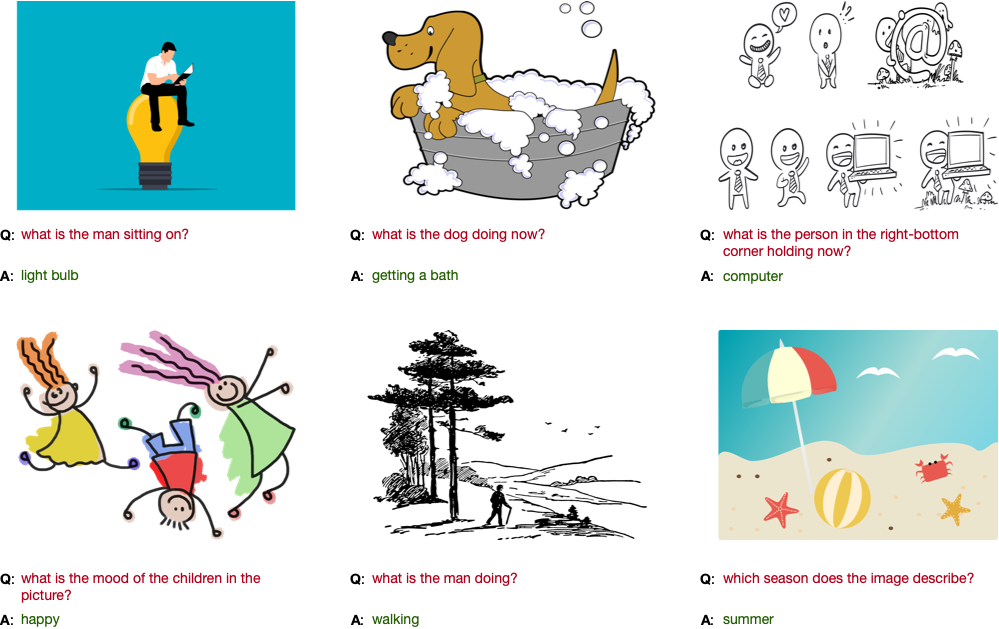

examples/open_vqa.png

0 → 100644

258 KB

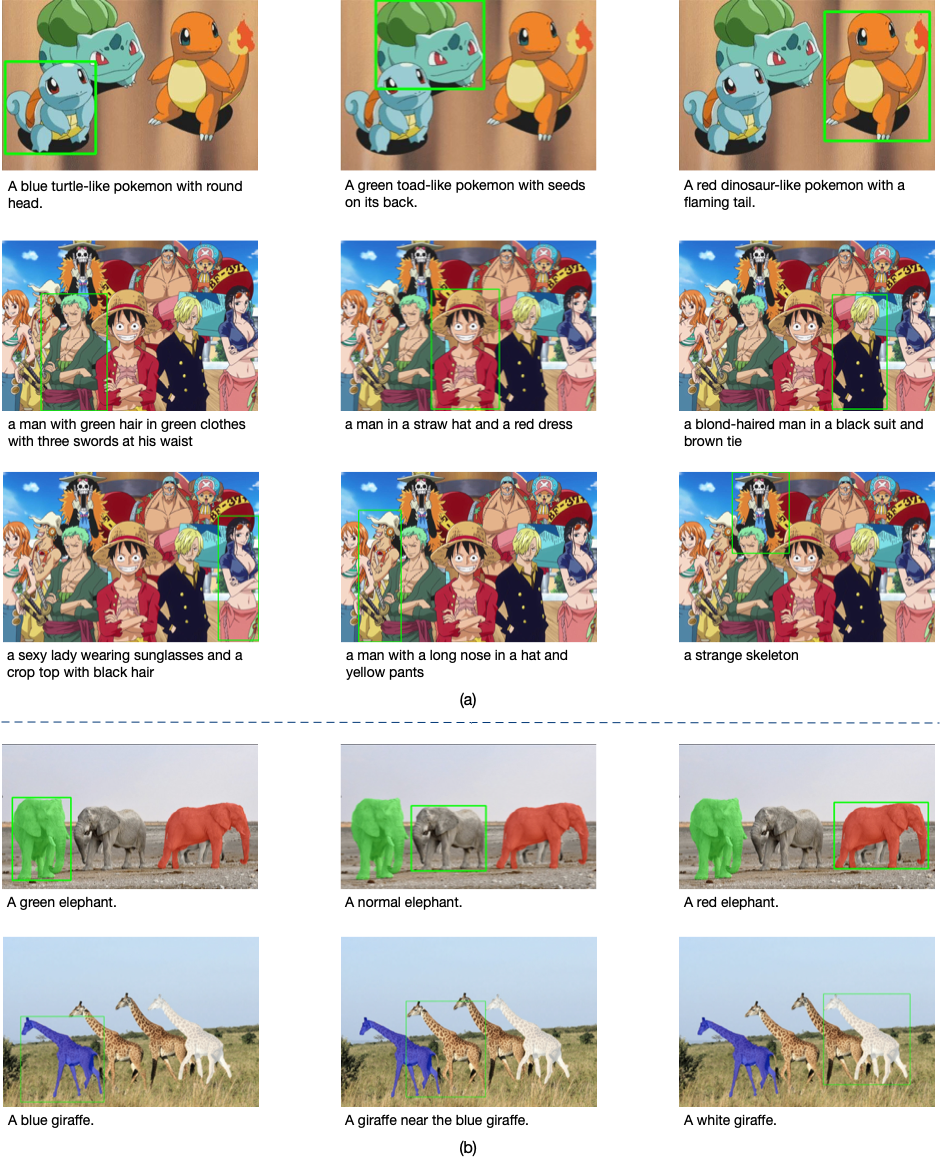

876 KB

fairseq/.github/stale.yml

0 → 100644

fairseq/.gitignore

0 → 100644

fairseq/.gitmodules

0 → 100644

fairseq/CODE_OF_CONDUCT.md

0 → 100644