"git@developer.sourcefind.cn:xdb4_94051/vllm.git" did not exist on "c267b1a02c952b68a897c96201f32ad57e0b955e"

v1.0

Showing

Too many changes to show.

To preserve performance only 428 of 428+ files are displayed.

data/nlu_data/qqp_dataset.py

0 → 100644

data/nlu_data/rte_dataset.py

0 → 100644

data/ofa_dataset.py

0 → 100644

File added

File added

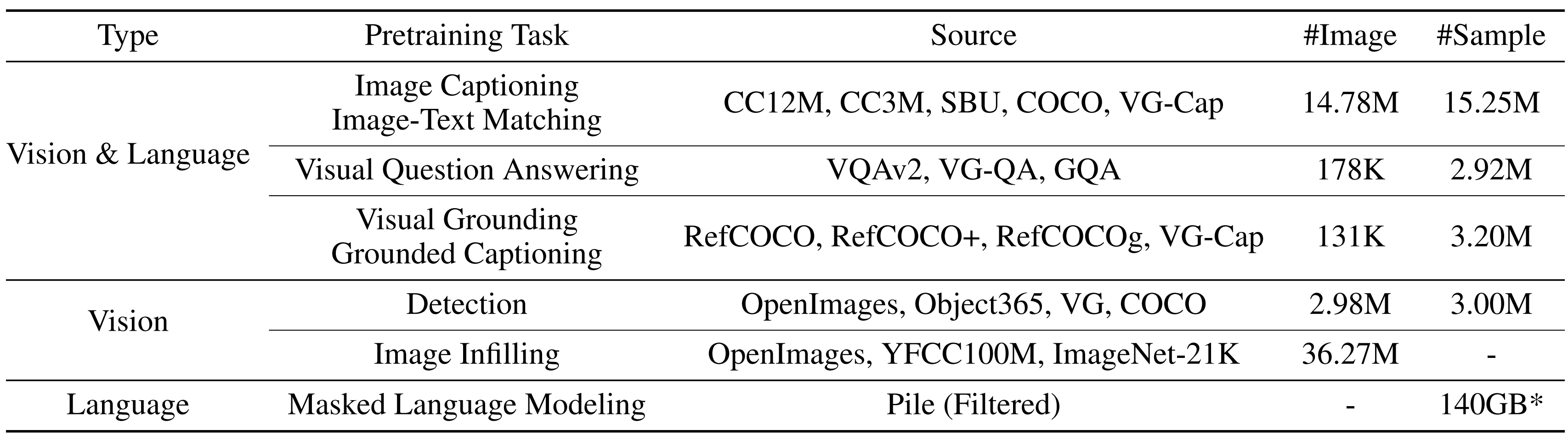

datasets.md

0 → 100644

doc/datasets.png

0 → 100644

325 KB

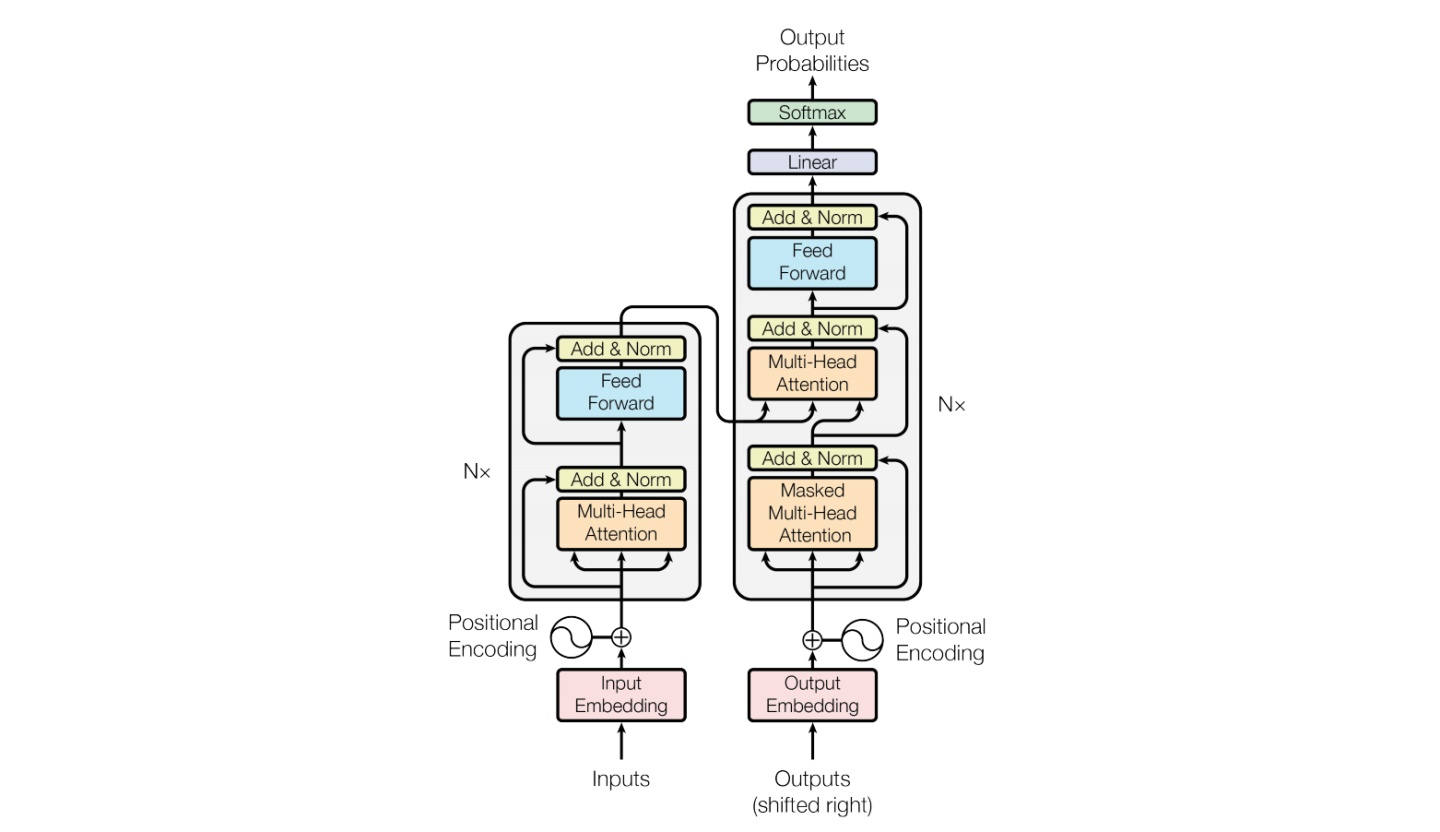

doc/structure.png

0 → 100644

233 KB

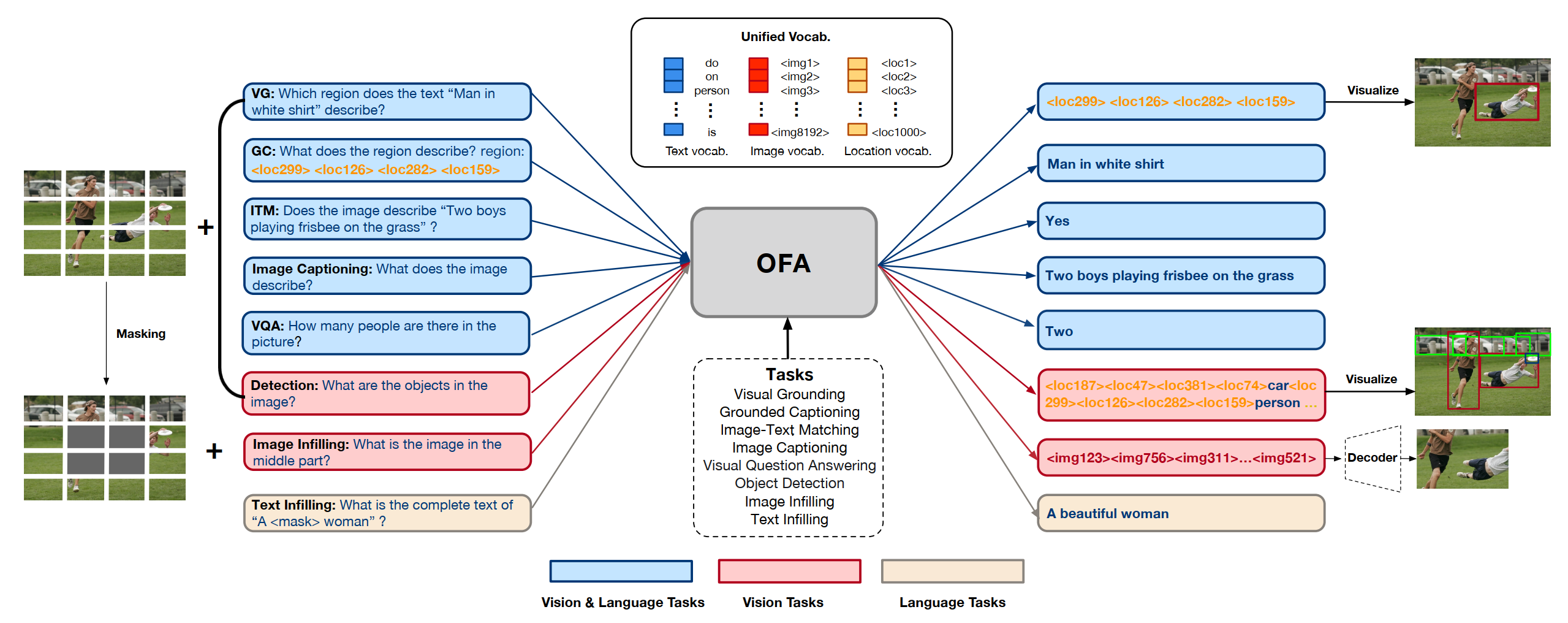

doc/test.png

0 → 100644

678 KB

doc/theory.png

0 → 100644

746 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

docker_start.sh

0 → 100644

evaluate.py

0 → 100644