v1.0.8

parents

Showing

examples/mamba/mamba.py

0 → 100644

examples/mamba/trainer.py

0 → 100644

examples/moe/README.md

0 → 100644

examples/moe/llamoe.py

0 → 100644

examples/moe/moe.py

0 → 100644

examples/moe/train_moe.py

0 → 100644

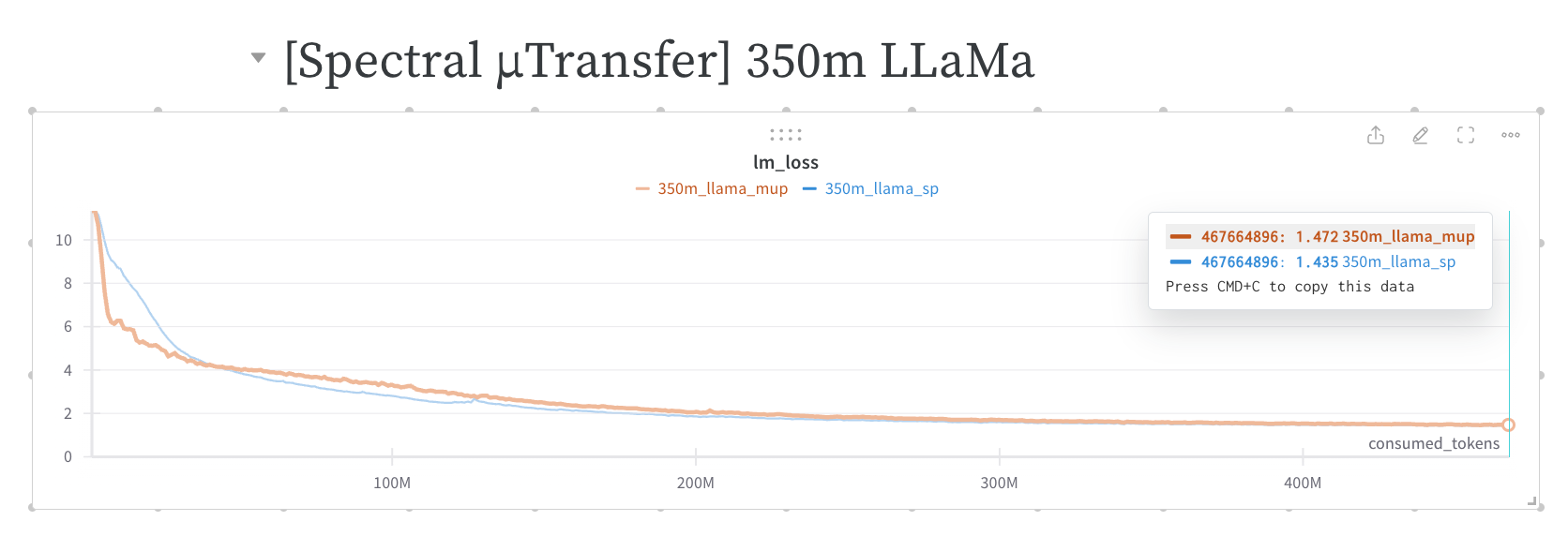

examples/mup/README.md

0 → 100644

105 KB