v1.0

Showing

32.6 KB

111 KB

42.8 KB

67.1 KB

214 KB

267 KB

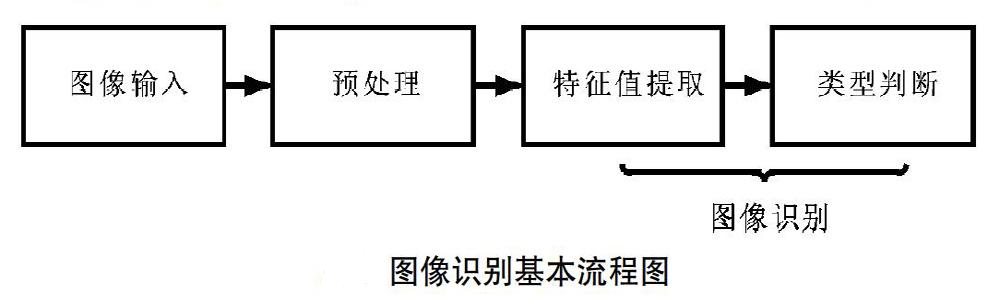

doc/algorithm.png

0 → 100644

76.7 KB

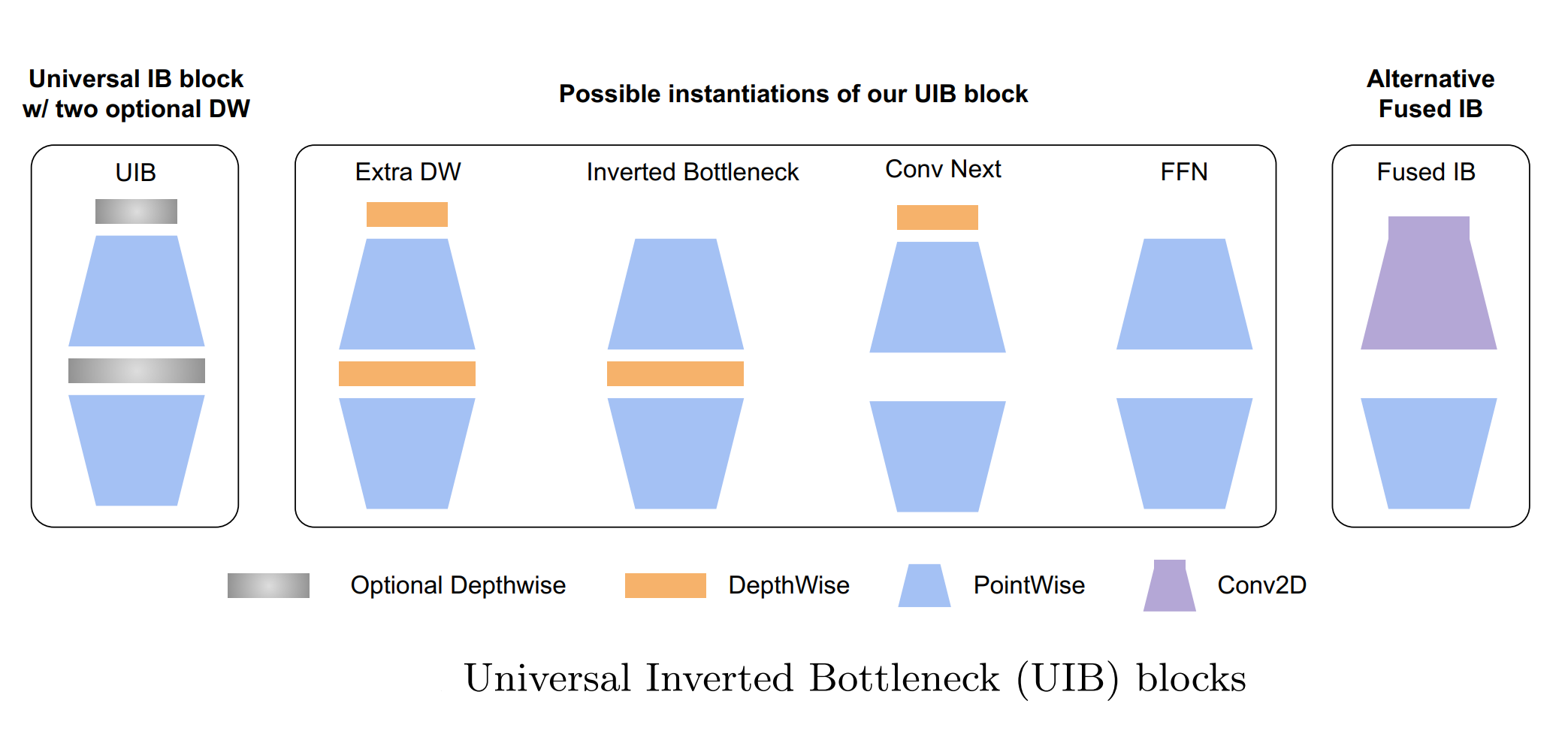

doc/structure.png

0 → 100644

137 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

model.properties

0 → 100644

model_config.py

0 → 100644

predict.py

0 → 100644

readme_origin.md

0 → 100644

requirements.txt

0 → 100644

114 KB

99.5 KB

train.py

0 → 100644

train.sh

0 → 100644