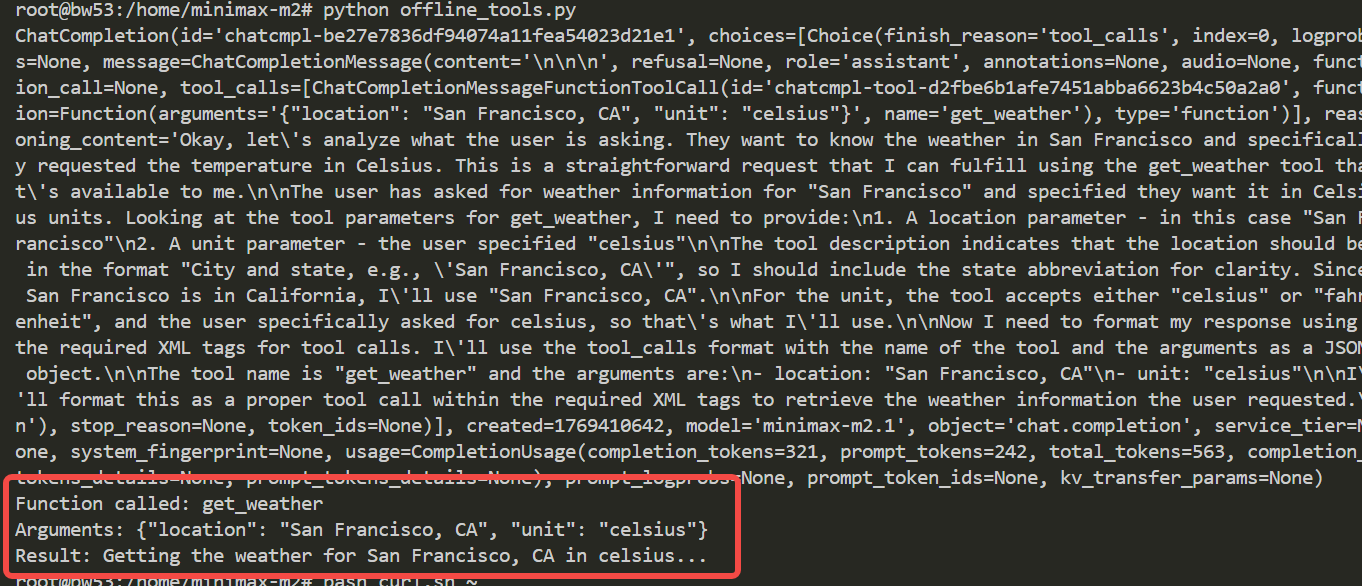

Update minimax-m2.1 tool call

Showing

This diff is collapsed.

config.json

deleted

100644 → 0

| W: | H:

| W: | H:

129 KB

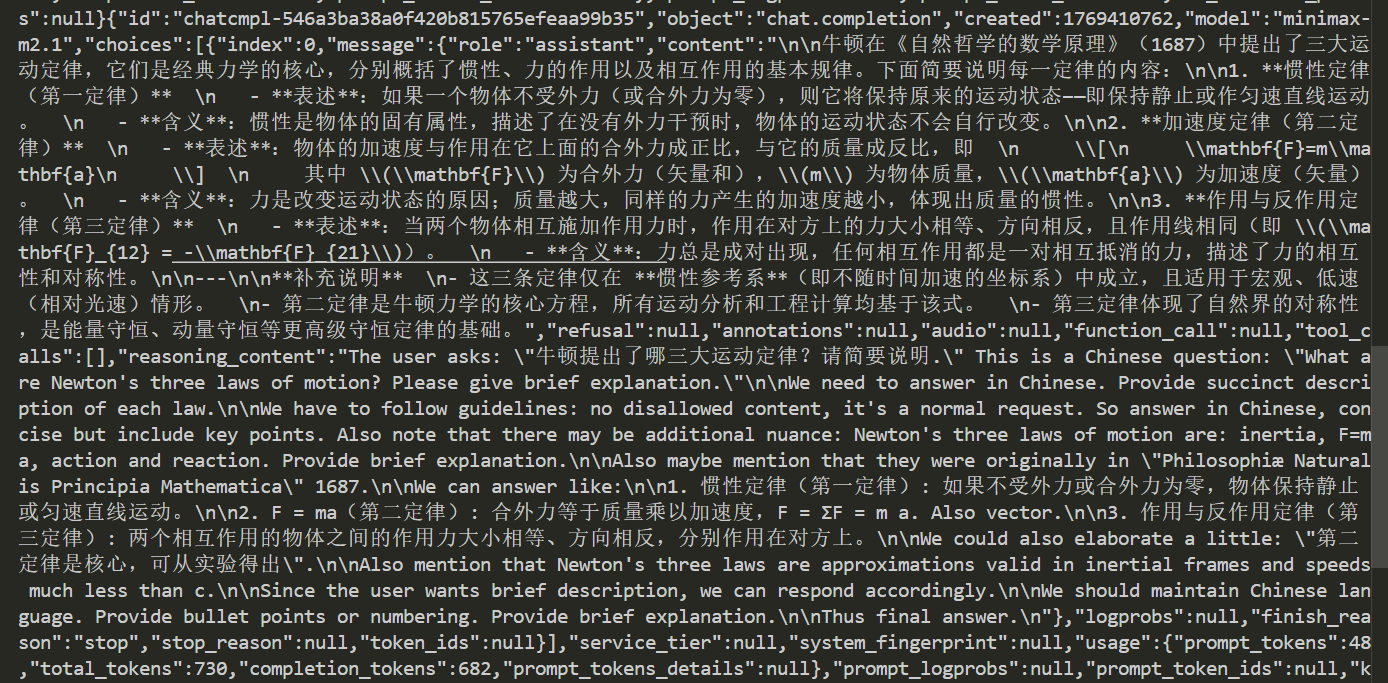

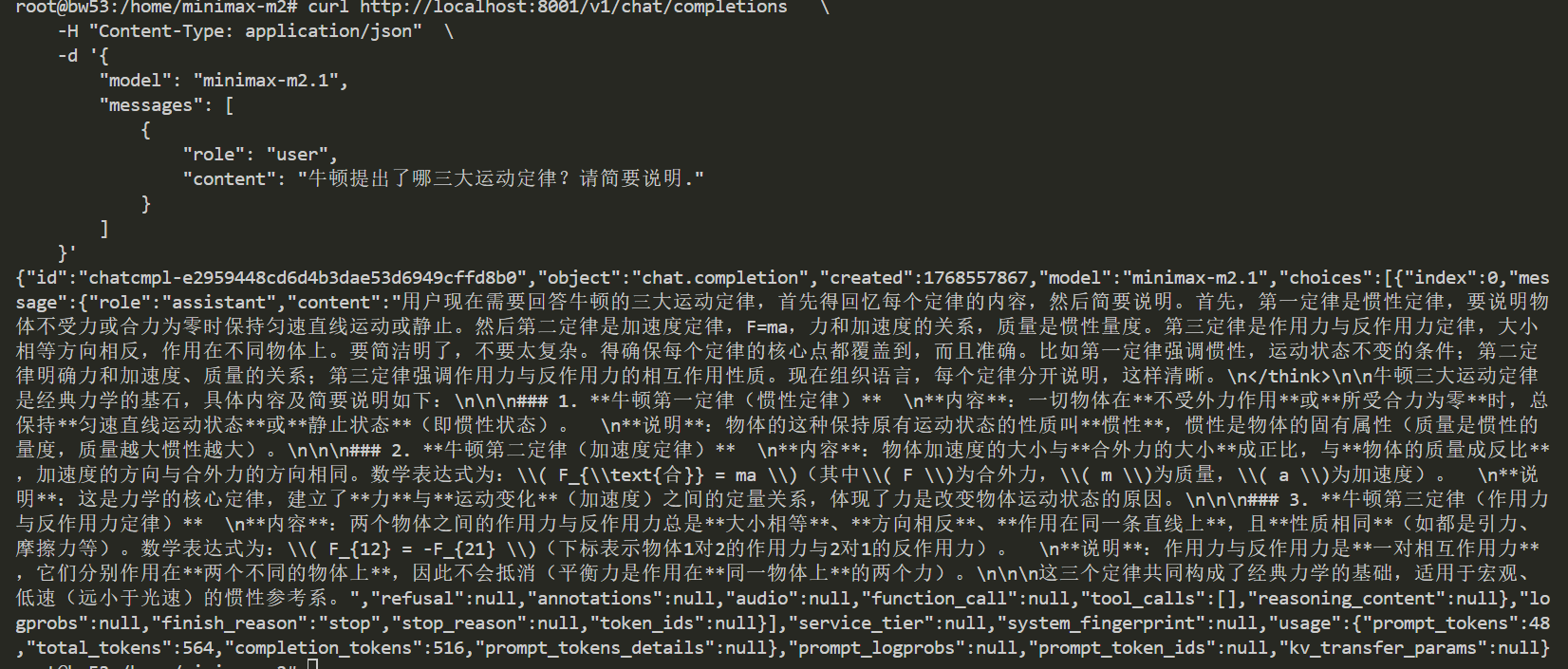

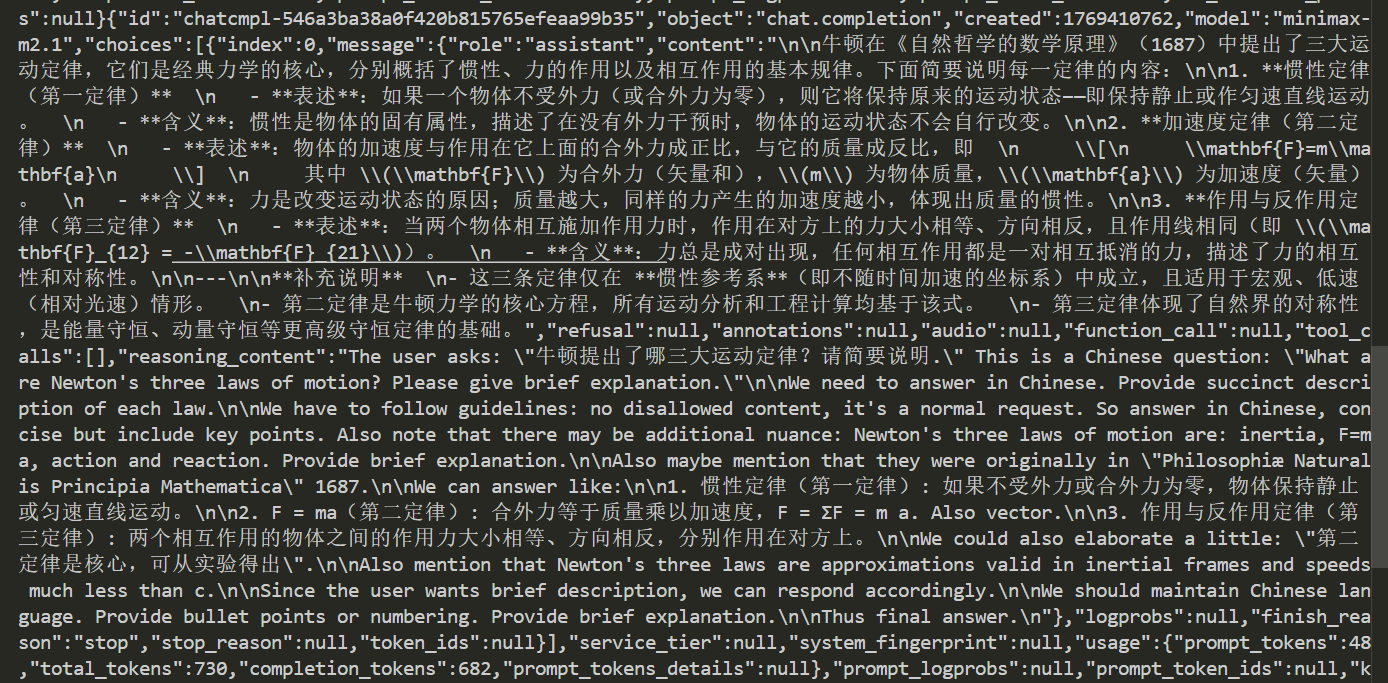

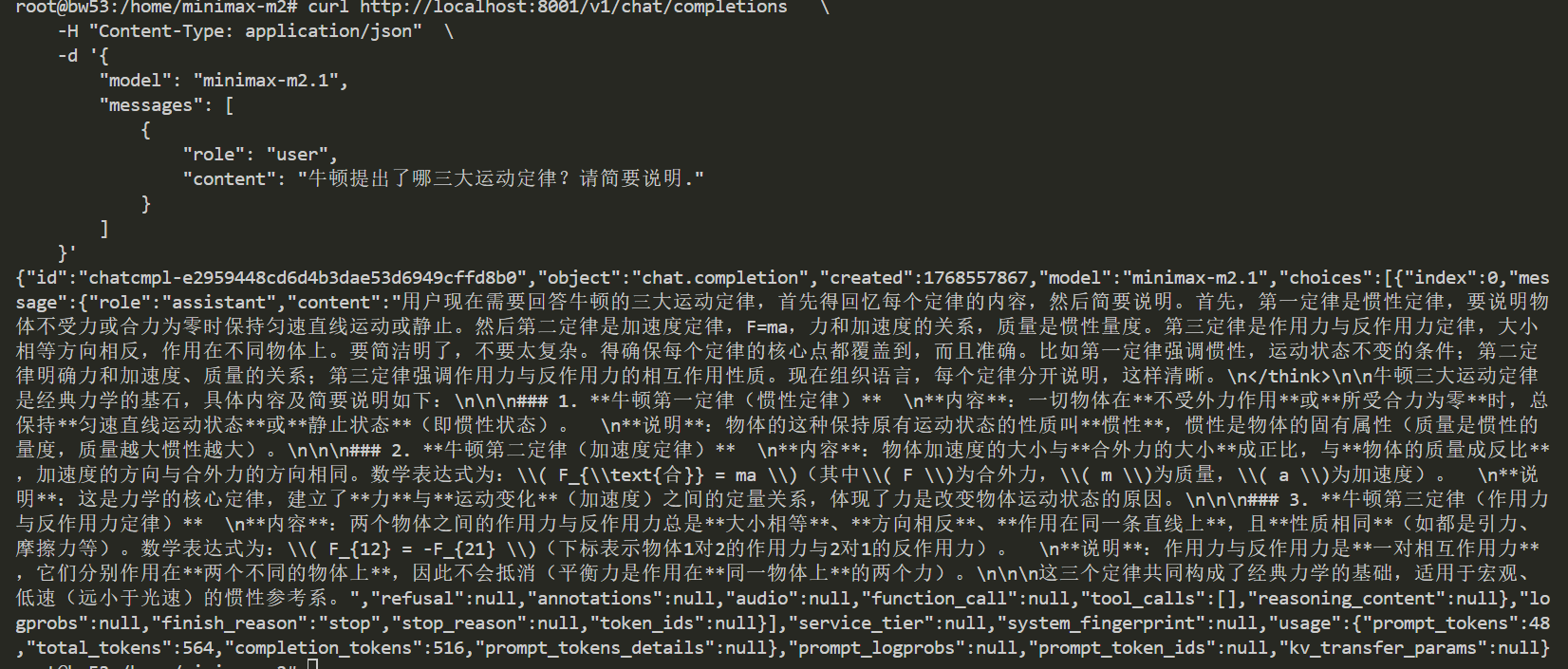

offline_tools.py

0 → 100644

232 KB | W: | H:

257 KB | W: | H:

129 KB