MiniGemini_pytorch

parents

Showing

Too many changes to show.

To preserve performance only 193 of 193+ files are displayed.

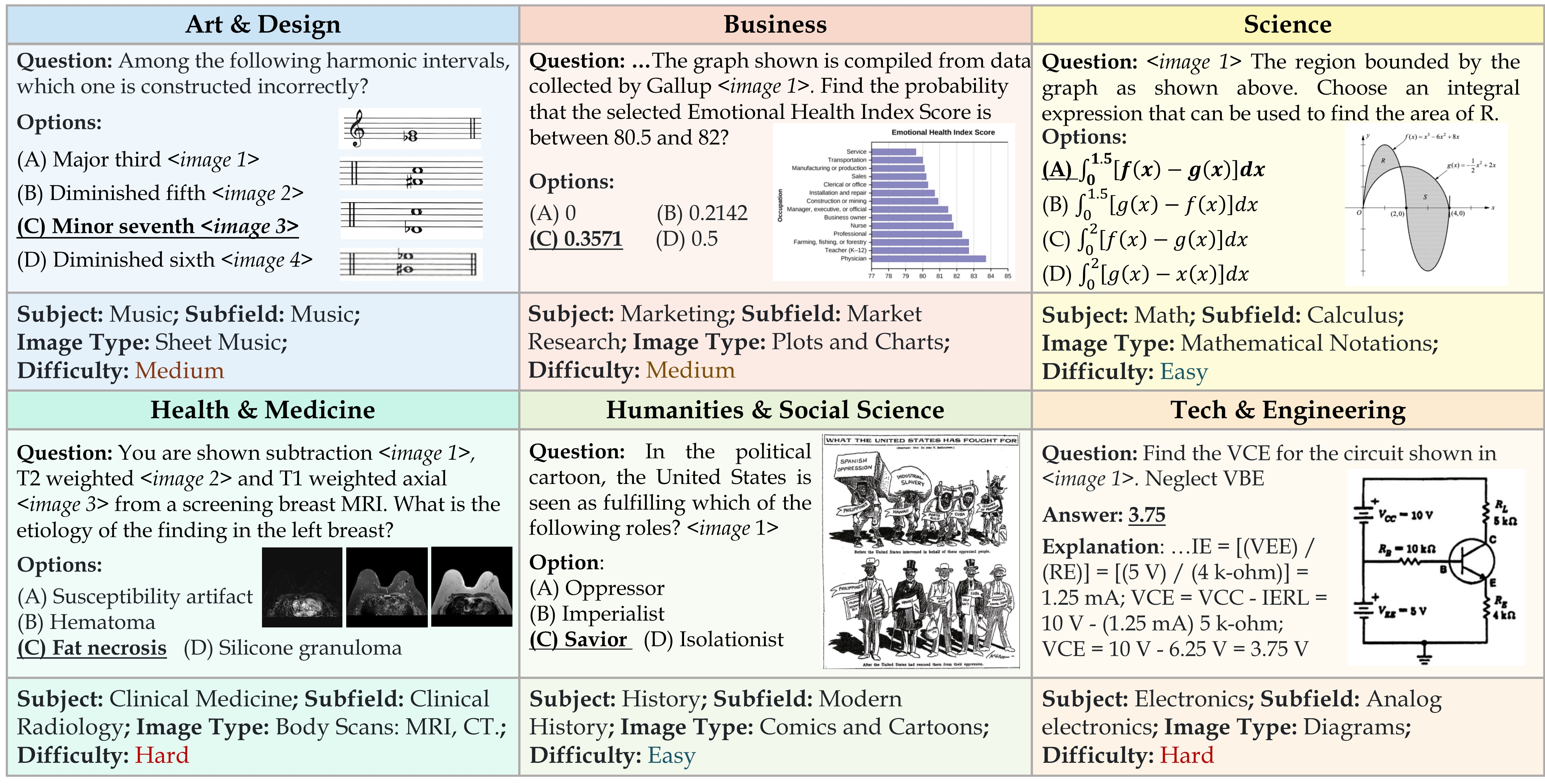

mgm/eval/MMMU/image.png

0 → 100644

3.2 MB

File added

File added

File added

File added

mgm/eval/eval_gpt_review.py

0 → 100644

mgm/eval/eval_pope.py

0 → 100644

mgm/eval/eval_science_qa.py

0 → 100644

mgm/eval/eval_textvqa.py

0 → 100644