init

Showing

assets/teaser.gif

0 → 100644

719 KB

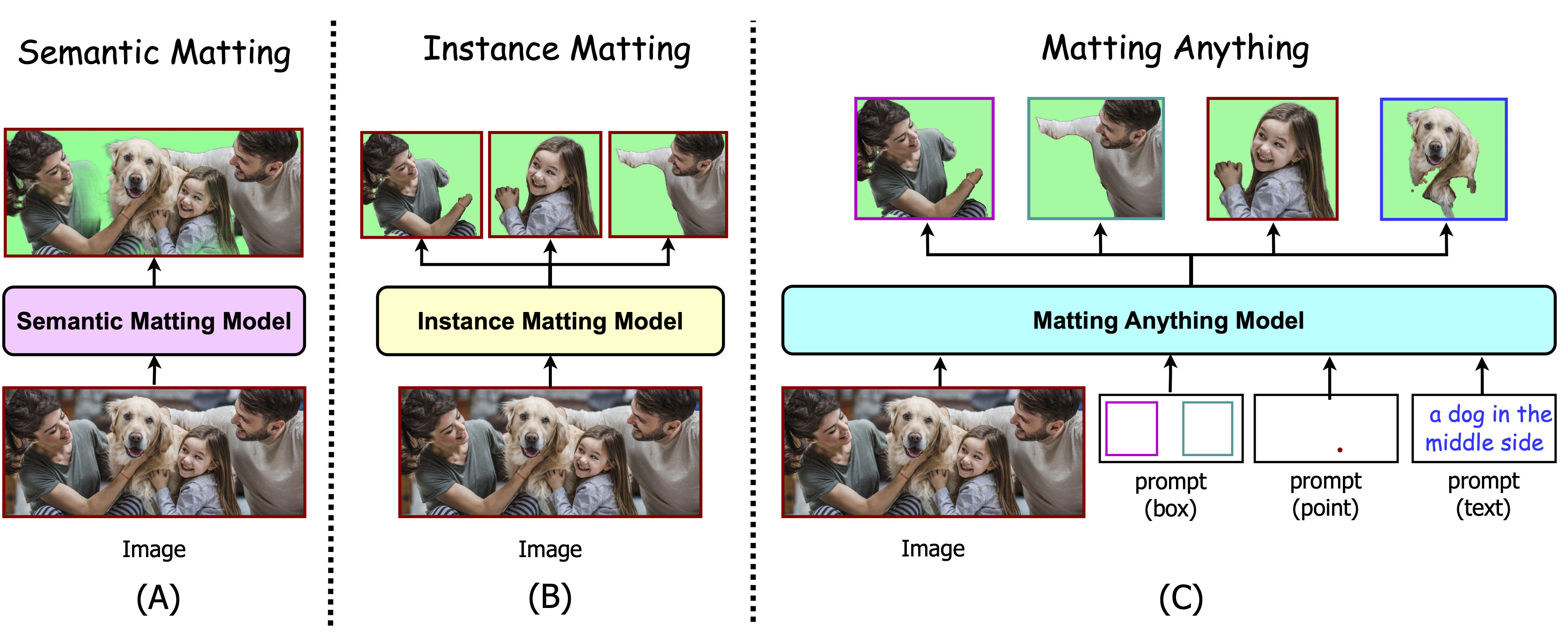

assets/teaser_arxiv_v2.png

0 → 100644

3.55 MB

config/MAM-ViTB-8gpu.toml

0 → 100644

config/MAM-ViTH-8gpu.toml

0 → 100644

config/MAM-ViTL-8gpu.toml

0 → 100644

dataloader/__init__.py

0 → 100644

dataloader/data_generator.py

0 → 100644

dataloader/image_file.py

0 → 100644

dataloader/prefetcher.py

0 → 100644

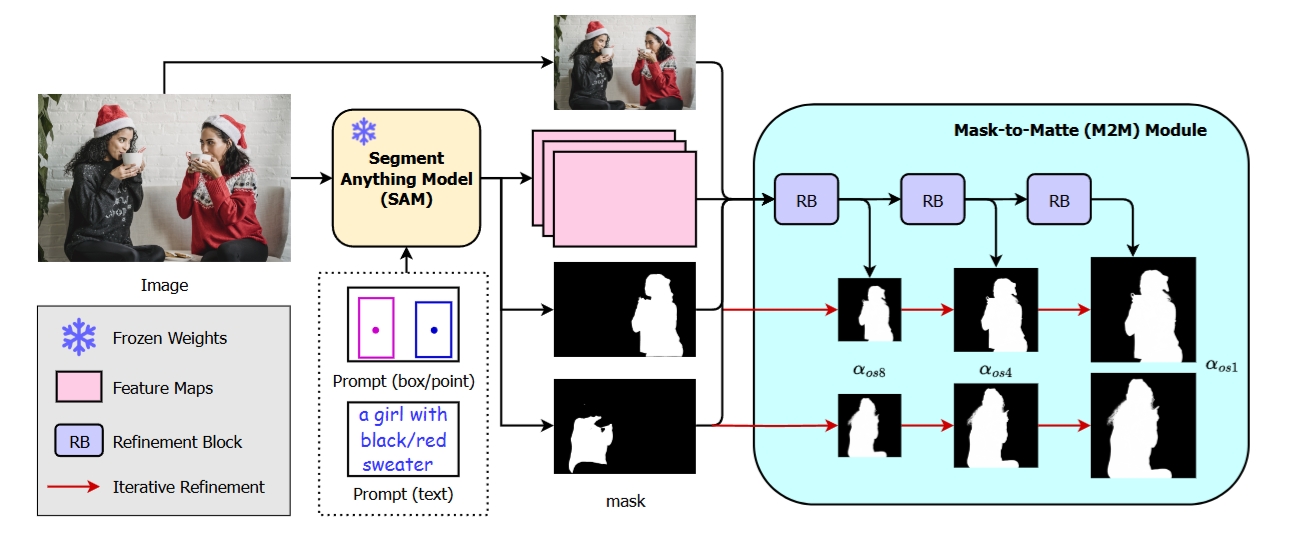

doc/Architecture.png

0 → 100644

206 KB

doc/demo.jpg

0 → 100644

174 KB

doc/matte.png

0 → 100644

542 KB

doc/result.png

0 → 100644

1.03 MB

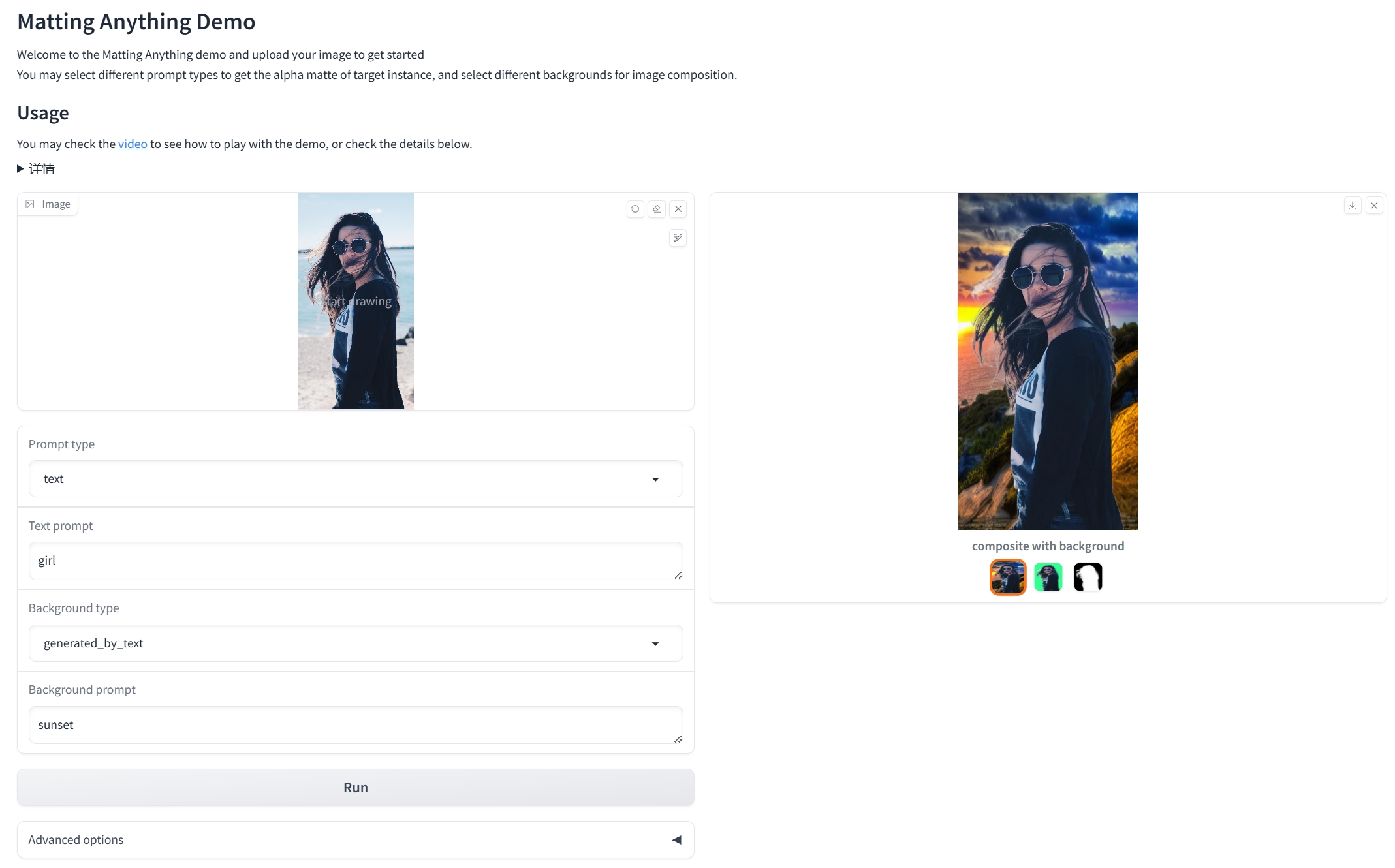

doc/webui.png

0 → 100644

250 KB

doc/webui_result.png

0 → 100644

384 KB

docker/Dockerfile

0 → 100644

evaluation/IMQ.py

0 → 100755

evaluation/IMQ_quick_rwp.py

0 → 100755