init

Showing

GroundingDINO/setup.py

0 → 100644

INSTALL.md

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_origin.md

0 → 100644

assets/arxiv_fix.png

0 → 100644

722 KB

assets/backgrounds/rw_01.jpg

0 → 100644

786 KB

assets/backgrounds/rw_02.jpg

0 → 100644

673 KB

assets/backgrounds/rw_03.jpg

0 → 100644

629 KB

assets/backgrounds/rw_04.jpg

0 → 100644

876 KB

assets/backgrounds/rw_05.jpg

0 → 100644

707 KB

assets/demo.jpg

0 → 100644

174 KB

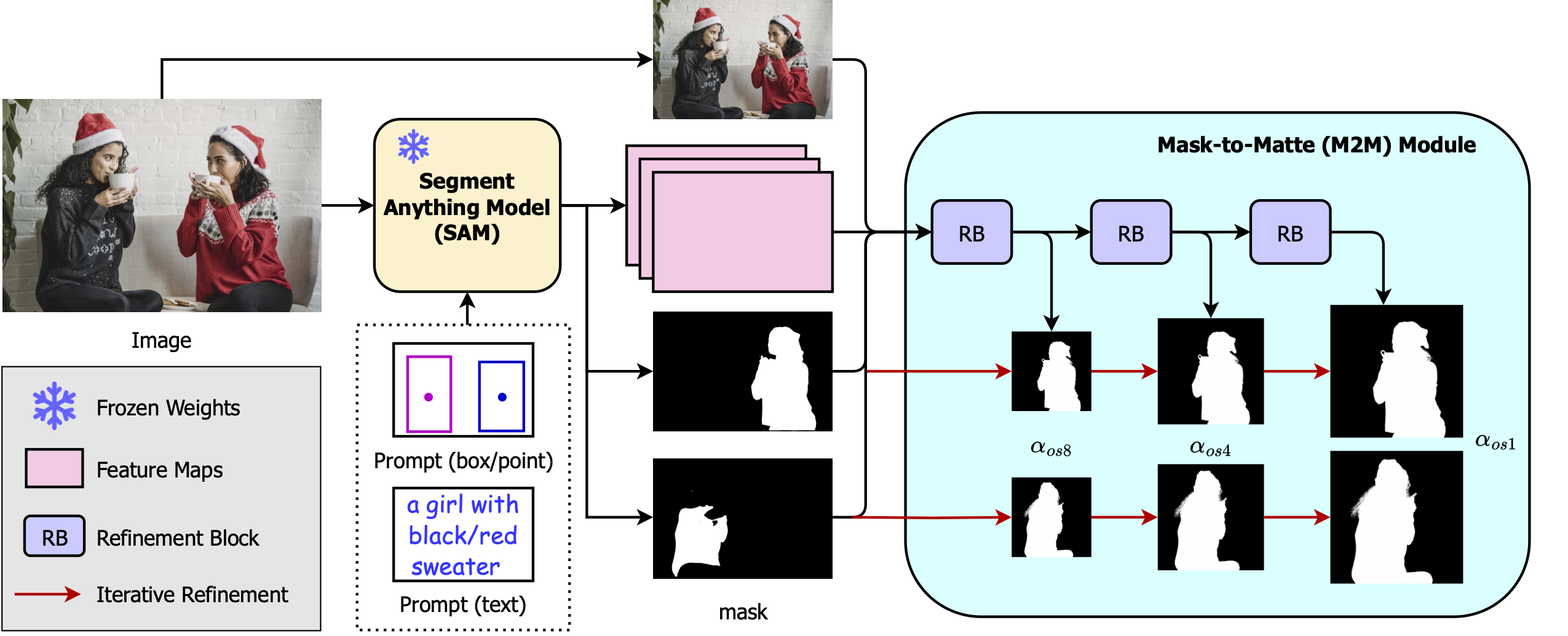

assets/mam_vis_v2.png

0 → 100644

2.25 MB