First commit

Showing

model.properties

0 → 100644

model_zoo/baseline.json

0 → 100644

model_zoo/baseline.pth

0 → 100644

File added

File added

models/basicblock.py

0 → 100644

models/common.py

0 → 100644

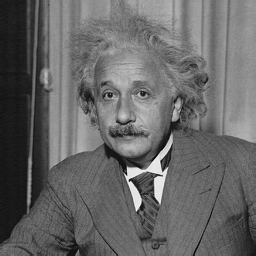

models/einstein.png

0 → 100644

51.9 KB

models/loss.py

0 → 100644

models/loss_ssim.py

0 → 100644

models/model_base.py

0 → 100644

models/model_gan.py

0 → 100644

models/model_plain.py

0 → 100644

models/model_plain2.py

0 → 100644

models/model_plain4.py

0 → 100644

models/model_vrt.py

0 → 100644

models/network_cnn.py

0 → 100644

models/network_dncnn.py

0 → 100644

models/network_dpsr.py

0 → 100644